Introduction to RADAR Camera Fusion

Today, I'd like to tell you a bit about RADAR Camera Fusion.This type of fusion has been the go-to for Tesla for years, helping them to even skip the LiDAR and rely solely on these two to get rich scene understanding (from the camera) and accurate 3D information (from the RADAR).If you've followed my Mini-Course on Visual Fusion (or my big one), you probably know that there are two main types of fusion:

- Early Fusion - fusing the raw data as soon as it gets out of the sensor

- Late Fusion - fusing the objects independently detected

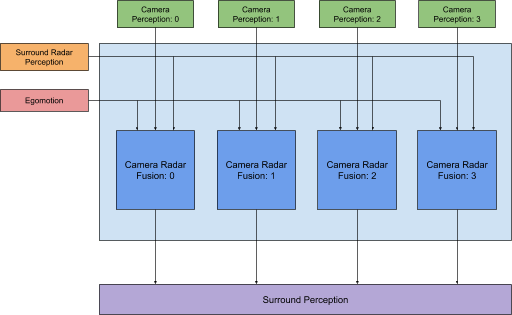

The RADAR Camera Fusion I'm going to talk about comes from NVIDIA, and it's a Late Fusion approach.

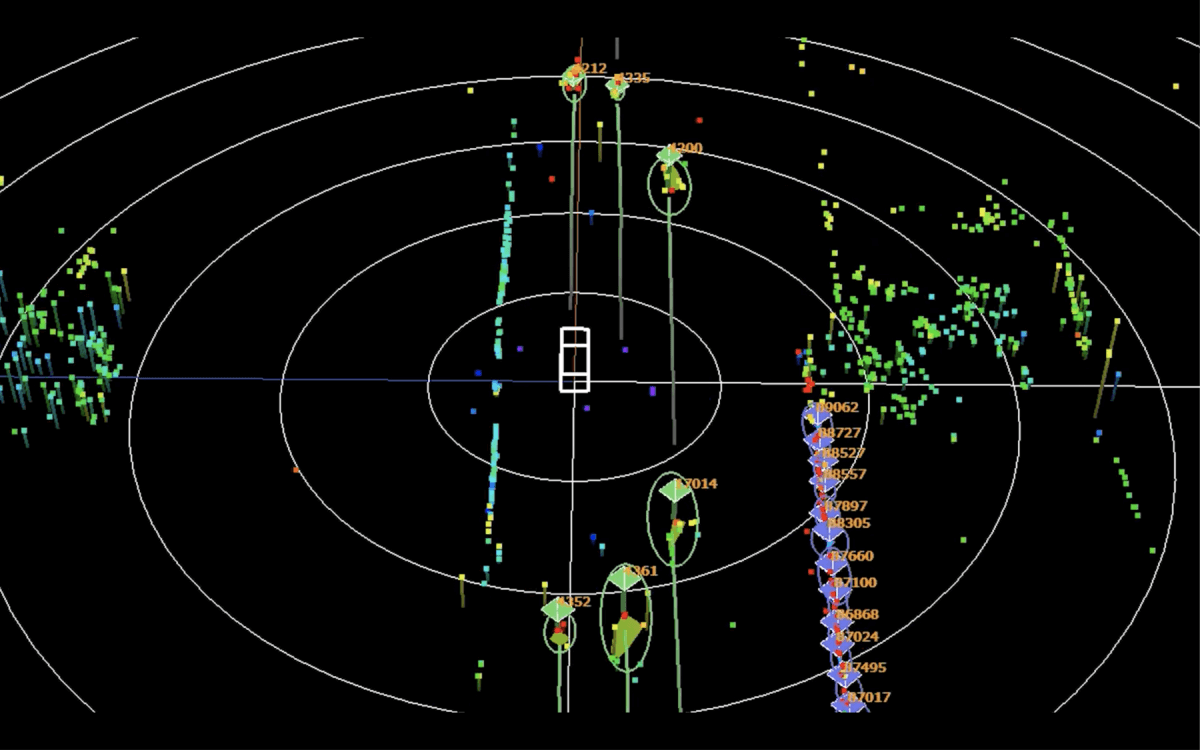

What the RADAR sees

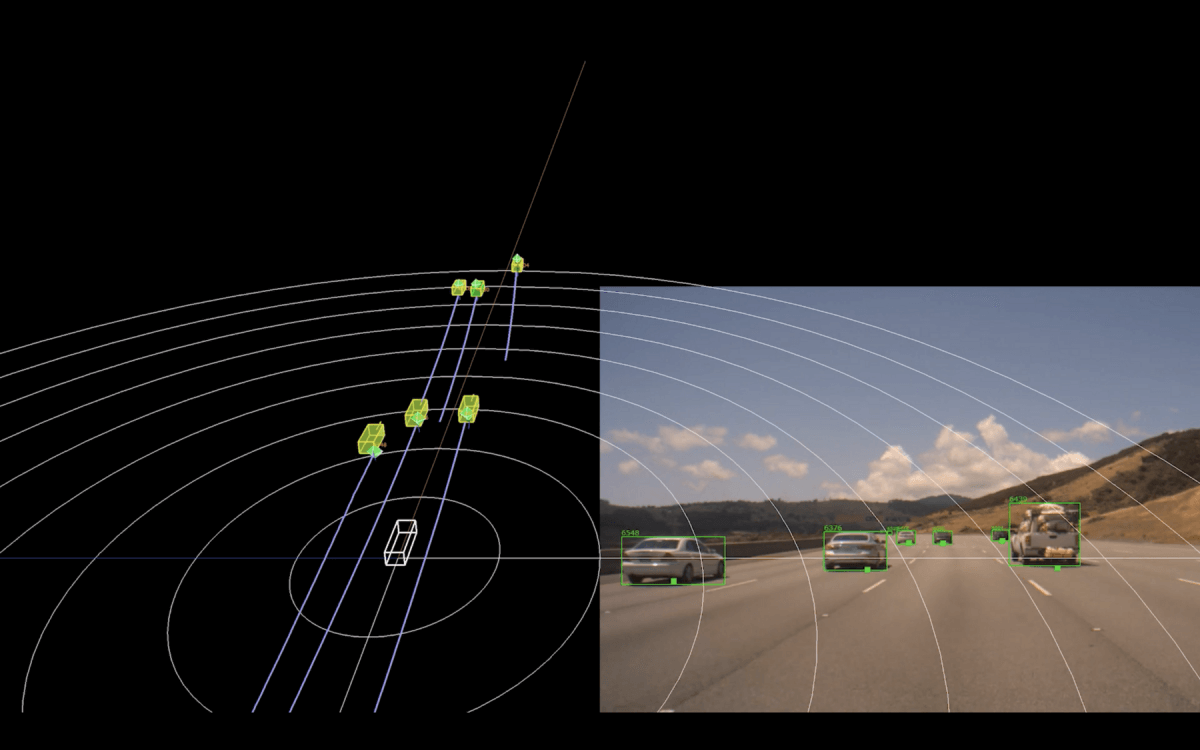

Here's a picture of what a RADAR sees. As you will see, it's not just the raw data, we have already performed object detection and tracking on it.

A few words about it...

- In white, the ego car (our car).

- In purple, all static objects.

- In green, all moving objects.

We can also see all the RADAR signatures (the little dots and green squares everywhere on the image), and the tracking ids in orange.

As you can see, it's noisy. One object is defined by dozens of RADAR signatures. By the way, if you're wondering how to turn dozens of really close points into an object, clustering could be a good way to start!

You maybe also notice that with RADAR, we distinguish moving from stationary objects. We're doing it because RADARs work that way!

👉 They use the Doppler effect to tell if objects are moving away or towards you; so they're extremely good with understanding movement.

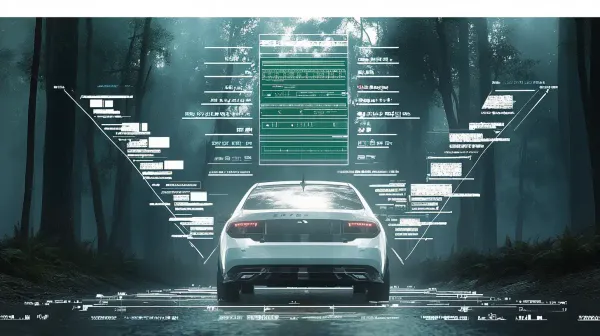

You already know what's on the camera image, so let's skip directly to this quick architecture showing what we want to accomplish:

The idea: project the RADAR signatures and detections in the Camera Image, and match the RADAR output with the camera outputs (this is what I call late fusion).

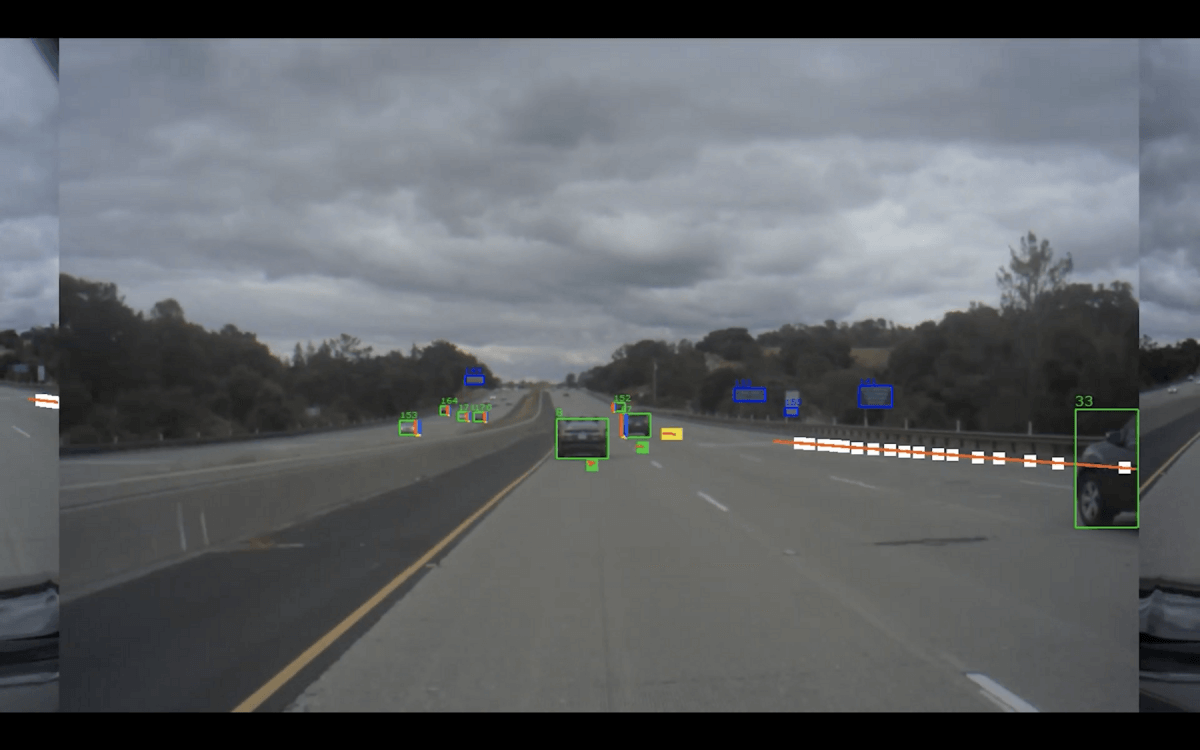

And here's a result projection on the camera image:

The white squares and orange tracks represent the RADAR tracking. Once an object has been track, you can see a small green square on the bounding box.

To do the fusion, we're fusing the camera bounding boxes with the RADAR "bounding boxes".

The fusion is achieved EXACTLY as I teach in the "Late Fusion" part of my course VISUAL FUSION: Expert Techniques for LiDAR Camera Fusion in Self-Driving Cars.

And here's the final result!

As you can see, RADAR Camera fusion is doable, easy to learn, and accurate.

📩Thanks for reading this far and if you want, I invite you to join the Think Autonomous mailing list to receive everyday content about Computer Vision for Self-driving cars and learn how to become a cutting-edge engineer!