LOXO: How to certify End-To-End algorithms in production with Jonathan Péclat

On June 4, 1996, the Ariane 5 rocket was ready to be launched after years of work, public funding, and political pressure. The stress was at maximal, but after just 40 seconds, the rocket exploded, causing a loss of over 370M$.

This event is one of the most known in software engineering, and in particular because of the reasons of the crash: a float to int conversion. Indeed, the engineers reused the code from Ariane 4 to launch Ariane 5, but forgot that a float64 storing the horizontal velocity would be converted converted to a signed int16. 40 seconds into launch, the conversion failed and crashed the rocket.

I think this story can be a perfect introduction to the domain of autonomous vehicle safety; which we'll cover today with our guest Jonathan Péclat form Loxo.

A quick intro:

Loxo is a Swiss based company started in 2022 where they built a first prototype for an autonomous shuttle. Since then, it evolved into this vehicle that now operates in Germany & Switzerland.

These robots navigate real streets, interact with real traffic, and do so using an architecture powered by End-to-End Deep Learning.

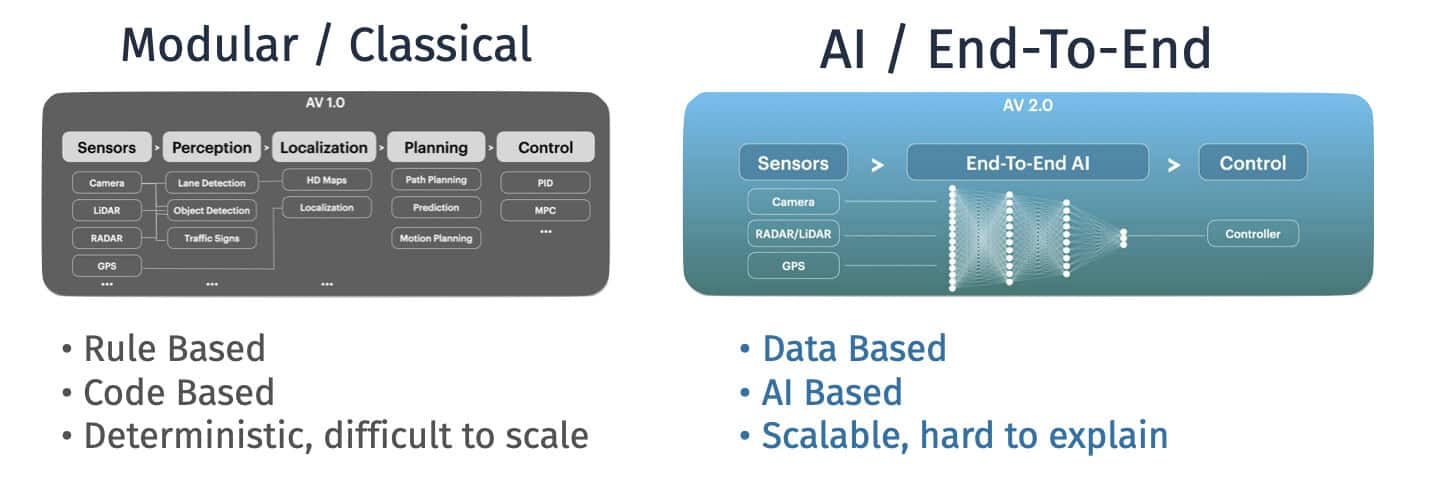

I find this incredible, because End-To-End Learning is purely AI based. It's data based, it's when you don't explicitely program the vehicle to stop at red light, but show it via examples from the dataset.

While a modular approach is pretty straightforward, and certification is about evaluating each individual block (is the lane detection safe? is the obstacle detection safe?)...

... End-To-End approaches are much more complex to evaluate, because they only output the final driving decision. I have an entire article covering the differences here.

So I asked Jonathan:

"How do you make End-To-End Learning safe?"

Here is what he explained:

LOXO uses End-To-End Learning in Production

As Jonathan pointed out:

“You cannot really prove that AI is safe, not today. So we run our AI system in parallel with another component that verifies the trajectory. If the AI violates any predefined rule, we switch to a deterministic safe path.”

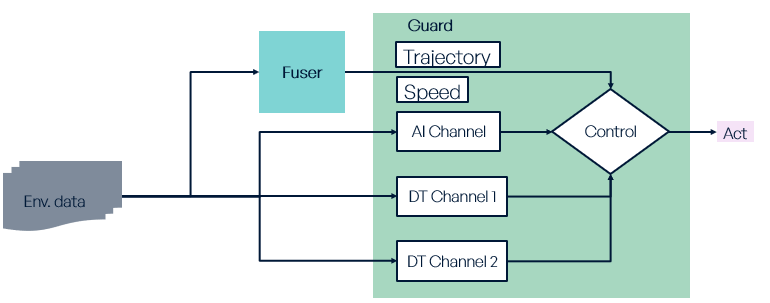

This point explained is crucial, because several self-driving car companies use exactly the same approach. LOXO does not rely on a single neural network but on four independent channels (two AI channels, and two deterministic channels) running in parallel, each serving a different role in verifying, supervising, or backing up the End-to-End planner.

LOXO’s architecture is a clear illustration of the principle of redundancy: Multiple algorithms, points of view, and, instead of a failure mode, a structure that catches, compensates for, and, if needed, overrides failures. This is how an End-to-End system becomes certifiable and safer.

The key point to understand is that companies relying on End-To-End do not use just that one approach; they run multiple algorithms in parallel that verify and contradict eachother. I have a complete breakdown of how Mobileye does it with their own End-To-End approach here, if you're interested.

Still, a question remains:

What exactly do you make redundant?

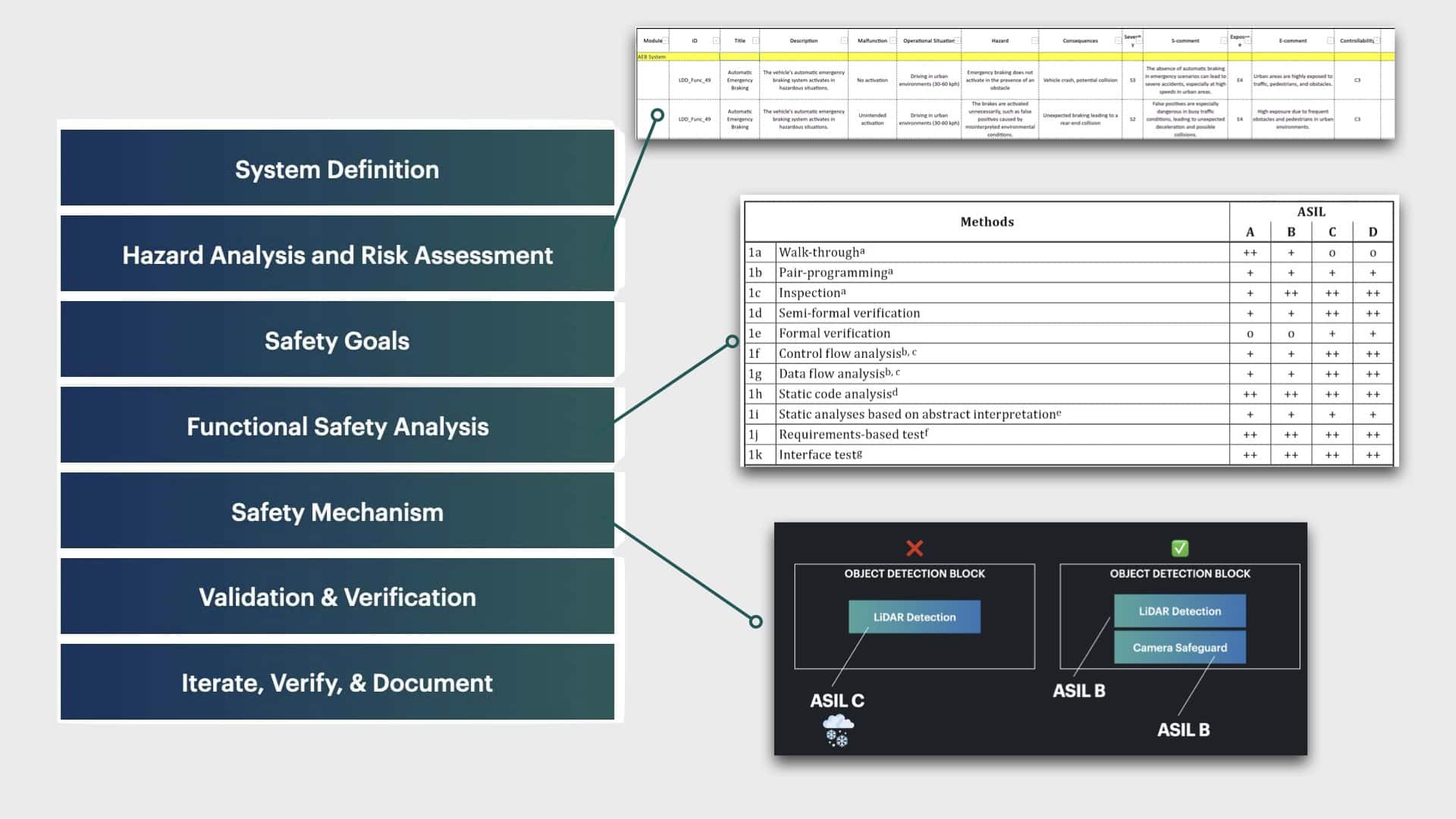

The sensors? The algorithms? What is even redundancy? This is my next question for Jonathan, which then explains the safety fundamentals of ASIL scoring and decomposition using among other a grading from A (safe) to D (risky):

The entire principle relies on the concept of Functional Safety with ASIL Decomposition. This is a job on its own, that often includes ISO norms, but if you'd like to explore this, I have a complete article covering how it works here:

Realize that this doesn't stop here. In my interview with Jonathan, Loxo explains the step-by-step framework they implement, along with their internal documents used to grade a function, evaluate its risk, and decide to make it redundant or not.

It's a complete masterclass we have inside The Edgeneer's Land, our community membership experience.

But for now, let's do a brief summary:

Summary

- When a self-driving car company uses End-To-End Learning, a single machine-learning model directly maps raw sensor data to driving actions or trajectories; without manually writing any rule.

- While this can simplify system design and improve performance, it also makes the system harder to interpret, verify, and certify, especially in safety-critical and regulated environments.

- Companies like LOXO often use redundant channels that are the opposite of End-To-End channels; using point clouds processing, clustering, extraction, and very deterministic approaches to try and validate what the AI says.

- Functional Safety Systems like ASIL Decomposition still apply to End-To-End, and there are many processes used to certify self-driving car algorithms.

Next Steps?

If you want to go deeper into how safety is formally addressed in the autonomous driving industry (how risks are identified, graded, reduced, and documented), I detail the full process in this blog post about functional safety.

If you'd like to get the complete masterclass from Jonathan, I recommend checking out The Edgeneer's Land.