RNNs in Computer Vision

Why Deep Learning is generally segmented into three big fields: Traditional Neural Networks, Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs).

While the first one is a general structure that can work on Big Data, CNNs are neural networks that can work on images and RNNs are neural networks that can work on sequences such as text or sound.

Here is the curriculum most courses (online and offline) follow.

We generally start by learning about the basic structure of neural networks and how to use regularization and fine-tuning to prevent overfitting and common problems.

Then, we dive into how to use these neural networks in the context of images and sequences such as text or sound.

This 4 steps process is very common and widely used. Some can then choose to add Reinforcement Learning, auto-encoders or even GANs to this curriculum.

Something that a lot of people do then is to specialize in either Computer Vision through CNNs or Natural Language Processing through RNNs.

Generally, people go towards what they liked or understood the most.

The applications are usually very separated.

I started to learn about CNNs through self-driving cars and didn’t even take a single RNN class because it wasn’t needed for me to learn NLP.

Some people work in chatbots companies and will skip CNNs to focus on RNNs which is much more useful to them.Today, I’ll discuss whether learning both is a good idea or just a waste a time.

Learning CNNs

Let’s start with Computer Vision applications.

A very popular field of Deep Learning is Computer Vision, or the ability to work with images.

From image classification to image segmentation, a lot can be made.

The main neural network used for this task is called a CNN, or Convolutional Neural Network.

To take advantage of spatial information, Convolutions are an operation to learn specific features independently of their position on the image.

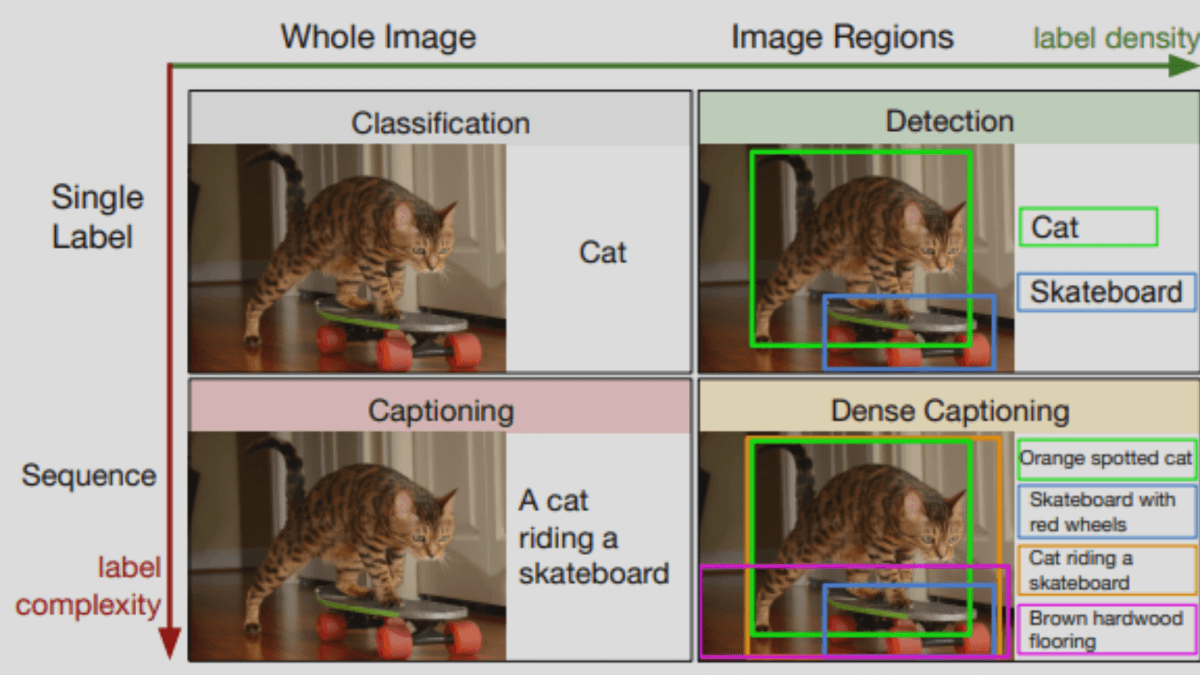

Let’s not get into technique here, but let’s just understand what are CNNs used for.Image Classification

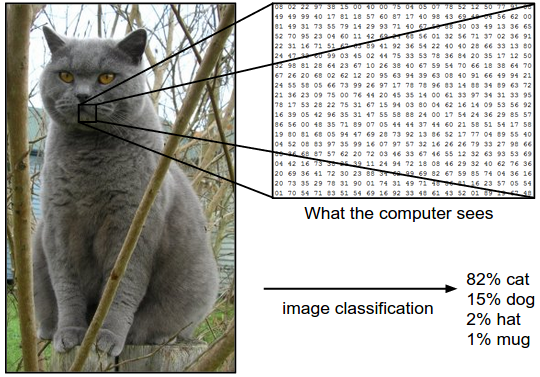

Image classification is the first activity we can do using CNNs. This activity is about classifying images.

The input is an image like the one on the left, and the output of the neural network is supposed to be a probability for each class (cat, dog, hat, mug, …).

If the neural network trained correctly, it should be able to pick the correct label for each image with high accuracy.

Input images can be dogs and cat pictures but it can go to understanding a dog’s breed, classifying satellite images, or even medical cells.Object Detection

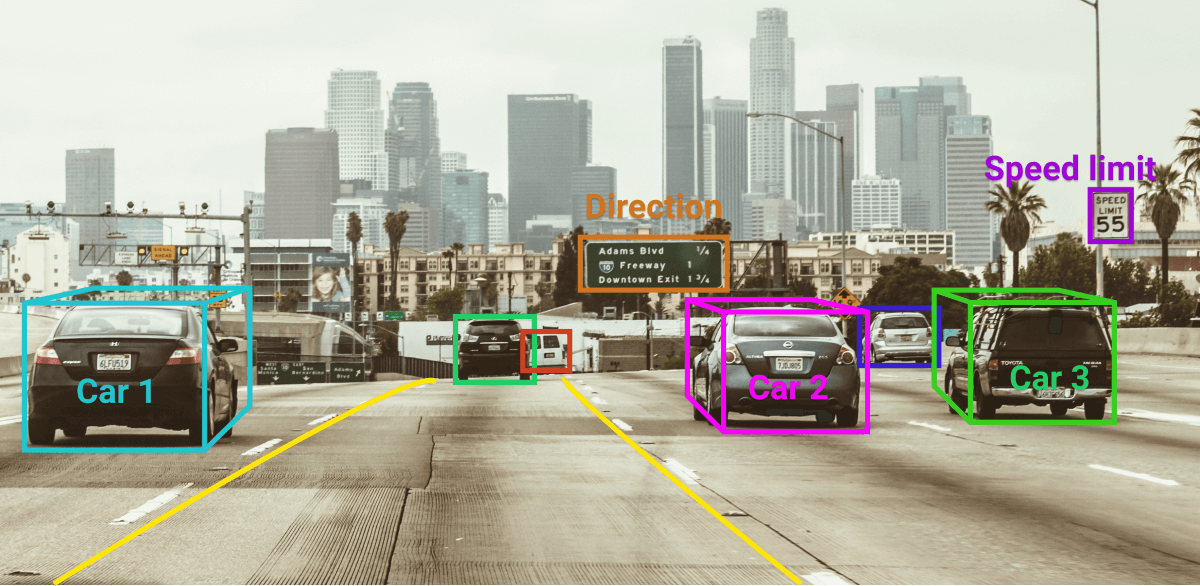

The second most used application of CNNs is object detection.

The goal is to output a set of bounding box coordinates from a picture. Here, we don’t output 0, 1, 2,… like in the first case, but a list of 4 coordinates representing the bounding box, and a probability score for the class.

The detection of the bounding box coordinates is actually a regression task in this case.

Object detection is also performing classification since it’s detecting a specific object and can detect a car among 80 or more objects.Image Segmentation

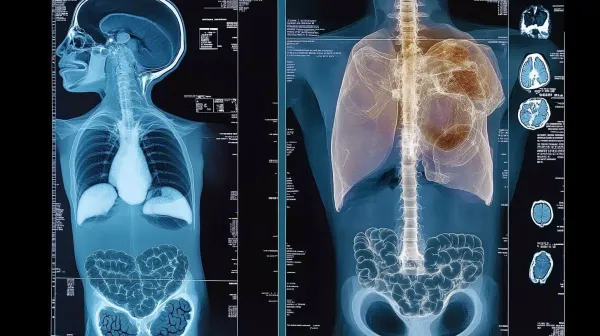

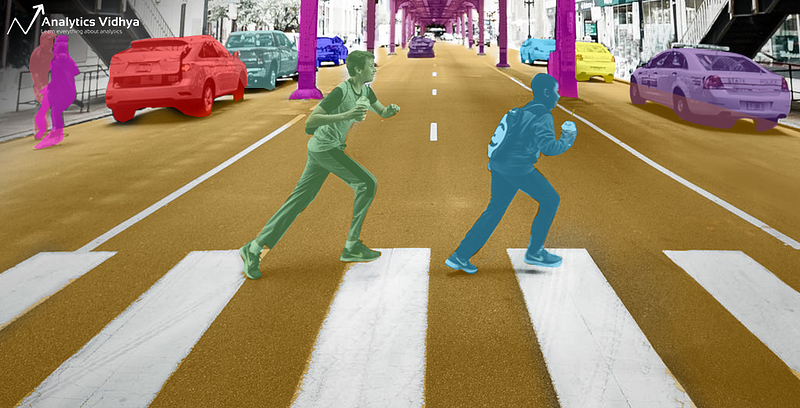

If we continue our overview of CNNs, we end up having a neural network that can classify each pixel of an image and set an object (road, pedestrian, car, …). As opposed to object detection, each object here can be individually detected. Therefore the pedestrian on the left is not colored the same way than the one on the right.

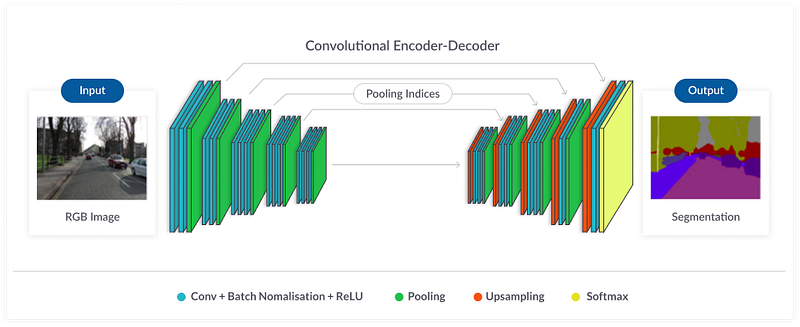

The output is an image. To get an image as an output (as opposed to a class or a number), the neural network works in two ways, an encoder that learns the desired features, and a decoder that recreates the image. Techniques such as transposed convolutions are used to recreate images.

The use cases are various: it can start with image segmentation for a self-driving car (drivable area detection) but can go up to satellite segmentation, or cancer detection.

Generally, learning CNNs alone can give you a very wide range of applications you can work on. Most of the Computer Vision field is full of these 3 use-cases.

Learning RNNs

Since I learned self-driving cars first, I learned CNNs first. Maybe it’s your case too.

For a lot of years, I have been very unfamiliar with RNNs and the way the can process text.

Let’s try and review a few applications of RNNs.

A Recurrent Neural Network is a network that can understand sequences and time.

In a Convolutional Neural Network, we were treating a single image every time.

If we work on a video, we split the video into images and treat each image independently. In a Recurrent Neural Network, sequences are a set of time-related data.

We can predict the next world in a sentence, and thus need to understand all the previous words. We can generate music and therefore need to generate something that makes sense. Each note must be coherent with the previous one and can’t be treated independently.

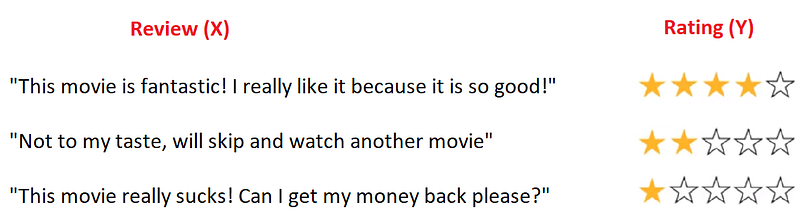

Let’s try and analyze the various use-cases.Classification (Many-To-One)

Let’s start with classification to compare with neural networks. We can first take a series of words and feed that to a neural network so that it can output a class.

For example, we can analyze a movie review and classify the text into positive or negative.

We talk about Many-To-One because we take many inputs and output one single element. Words prediction or Machine Translation (Many-To-Many)

If we wish to understand word meanings and predict the rest of a sentence from its first few words, we are in the context of a Many-To-Many prediction.

We take many words as inputs (the quick brown fox) and after each word, we try to predict the next one taking into account all the precedent ones. So we are in the Many-To-Many case.

Sometimes, we don’t have as much output as input, for example, like in language translation.Sequence Generation (One to Many)

The previous case considered predicting a series of data by classifying between words that belong to a vocabulary.

A very popular use-case we can deal with is sequence generation.

In this case, we feed the first word and the neural networks output coherent notes that will follow.

If you want to learn about RNNs, you can have access to a wide variety of fascinating jobs in almost every domain.

⏩ Here is a cheatsheet !

Learning Both: The next step?

Is learning (and mastering) CNNs and RNNs the next step if you know only one of them?

For a long time, I didn’t consider RNNs at all. For most of my Deep Learning career, I have been a bad RNN practitioner. At best, I followed general courses such as Deep Learning specialization or Nanodegree.

These are very good courses, but the examples are often far from real-life.

Mastering only one of them will take a long time, and you have better chances if you focus on one of them only.

However, a couple of months ago, I needed to work on vehicle tracking.

In order to learn about the topic, I often write a Medium article.

Since I want the article to be good, I will make sure I learn the topic very well.

When writing the article, I realized vehicle tracking, even though possible with Kalman Filters and the Hungarian Algorithm ,as explained in my article, was also possible using RNNs. RNNs take advantage of sequences.

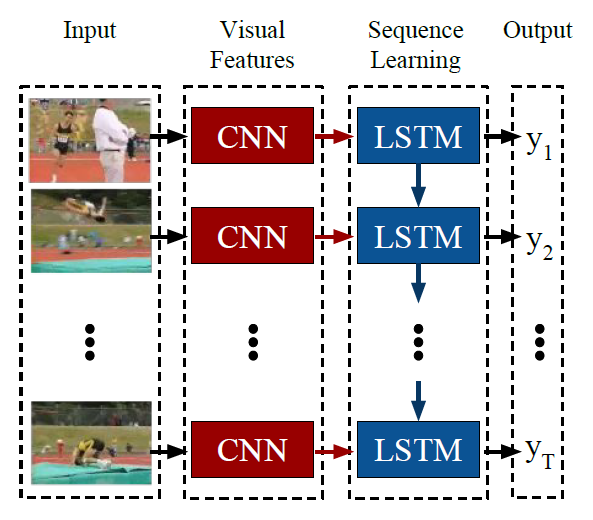

Sequences are not only text or music, it can also be videos (set of images).

It means that using RNNs and CNNs together is possible, and in fact, it could be the most advanced use of Computer Vision we have.

Action classification, movie generation, the next step is using RNNs.

In order to understand actions in videos, we must analyze multiple images and not only one. We can then work using RNNs to focus on tracking the convolutional features.

A use-case used a lot to teach this combination is image tagging.

If we go further, the science is called optical flow, and can even include 3D CNNs. Most people learn only about one of the two fields, learning both is actually being one of the few who work with videos. A lot can be done: preventing shoplifting, replacing the Kinect, predicting cars’ future behaviors, understanding a football match…

Being one step ahead is doing something the others aren’t, which includes this.

📩 If you’d like to receive daily emails like this one to go further, I invite you to join the Think Autonomous’s Daily emails. This is the most efficient way to understand autonomous tech in depth and join the industry faster than anyone else.