Understanding BDD 100K — A Self-Driving Car Dataset

Building Self-Driving Car projects is nothing but easy. Over the years, a lot has been done in order to provide relevant help to Deep Learning Engineers who want to train models. The Data problem has been, for a long time, a huge one. Custom datasets, online annotation tools, everything was developed to help with Computer Vision.

When Berkeley Deep Drive Dataset was released, most of the self-driving car problems simply vanished.

Suddenly, everyone got access to 100,000 images and labels of segmentation, detection, tracking, and lane lines for free.

For beginners, examples often show a set of images, and one unique label being the class of the object.

Thus, we are used to the Image > Label association.

Computer Vision has evolved quite a lot…

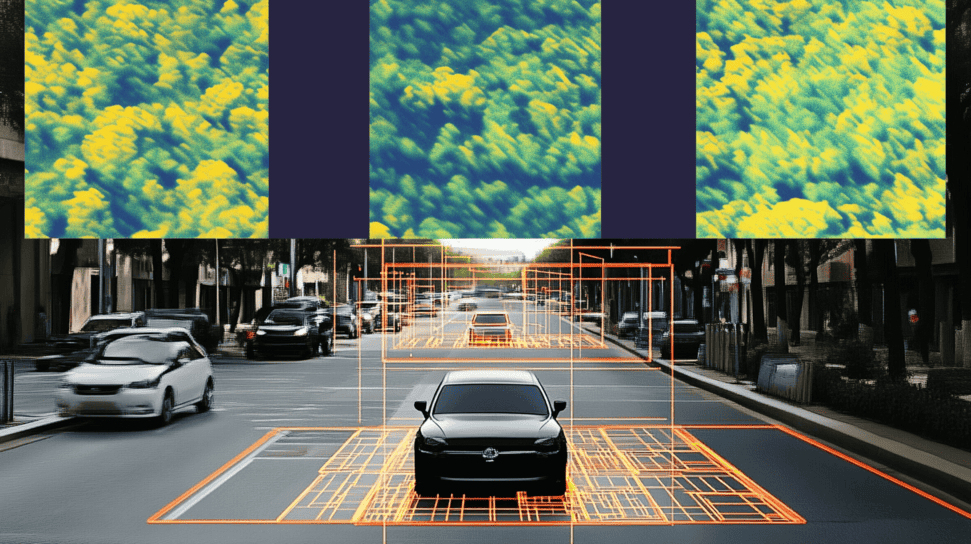

Obstacle detection, segmentation, scene understanding, pose estimation…

For sure, one image does not have one label anymore.

There can be dozens of labels for a single image.

If you aspire to train your own model on this dataset, it might not be easy to understand it for the first time.

This is why I made this article, I hope it can help you!

Images and… Labels?

When you log in to the BDD 100K portal , you can access a page that looks like this.

If you click on a button, you download the associated data.

That was the easy part.

Now, what does the data look like?

When clicking on every button, or almost, we get multiple folders. Images, Segmentation, Driveable Maps, Videos, Tracking, Labels, …

What is all of this?

We will start with image labels, and then consider the rest.

The first 3 folders, Images, Segmentation, and Driveable Maps, contain images and segmentation images.

Segmentation is a type of algorithm where the goal is to assign a class to each pixel by creating a map.

Thus, the label is an image where each pixel is made of one color representing a class.

Here, Image>Label becomes Image>Image.

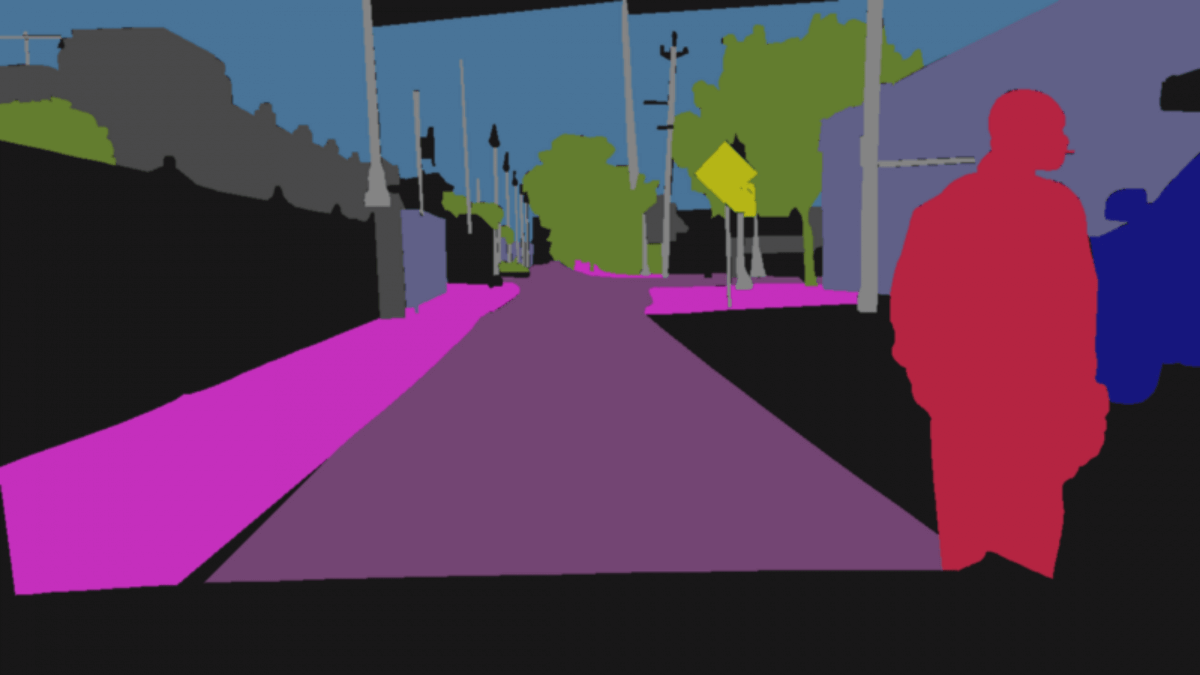

Image, Segmentation, and Driveable Area labels from BDD100K

The image is a 1280x720 RGB image.

The name of the image file is a specific number that can be found in every label image.

In semantic segmentation, each pixel is one object.

Here, the type of segmentation is full-frame segmentation.Thus you can see the road in purple, the sky in blue, the cars in marine, …

Finally, Driveable Maps represent the lanes.

Red pixels represent the ego lane, where it’s okay to drive. Blue pixels represent adjacent lanes.

For the semantic segmentation problem, we have a lot of classes.

Here is the distribution (number of examples/class).

How to use?

To use this type of data, you will need semantic segmentation algorithms. This is generally done by an encoder/decoder architecture.

What other types of labels do we have?

The next labels are not images.

Segmentation maps give us a lot of information, but probably not enough.

In particular, it would be nice to have the bounding boxes of each object.

With this, we will be able to run obstacle detection algorithms such as YOLO, FASTER RCNN, …

Understanding a JSON File

When you click the label button, you will download two JSON files: one for training, and one for validation.

A JSON file is a file where you can access information in a specific format.

To be a real Computer Vision Engineer, you’ll need to be able to access information in a JSON file.

Don’t worry, it’s one of the easiest things you will do in your programming career.

I will comment a bit, but this is straightforward.

Let’s study the JSON for our image.

“attributes”: {

“weather”: “clear”,

“scene”: “highway”,

“timeofday”: “daytime” >

},For the first part, we can see that the information is just about the scene in general.

Here is the dataset distribution for these elements.

Next, we will access obstacles labels.

Obstacles

“timestamp”: 10000,

“labels”: [

{

“category”: “car”,

“attributes”: {

“occluded”: true,

“truncated”: false,

“trafficLightColor”: “none”

},

“manualShape”: true,

“manualAttributes”: true,

“box2d”: {

“x1”: 555.647397,

“y1”: 304.228432,

“x2”: 574.015906,

“y2”: 316.474104

},

“id”: 109344

},The second part will help a lot with obstacle detection.

Each obstacle is fully-described by a bounding box.

Every obstacle has the same information.

A labeling tool was used to manually draw the bounding box and add the information to a file.

As you can see, every information on the JSON can be given using the labeling tool.

Here is the obstacles distribution for this dataset.

How to use?

To use this type of information, you will need an obstacle detection algorithm such as YOLO, SSD, or FASTER RCNN.

The goal of the algorithm will be to predict bounding box coordinates.

Next, let’s see the driveable areas.

Driveable Areas

{ “category”: “drivable area”,

“attributes”: {

“areaType”: “direct”

},

“manualShape”: true,

“manualAttributes”: true,

“poly2d”: [

{

“vertices”: [

[

344.484243,

615.550512

],

… (similar content) …

[

344.484243,

615.550512

]

],

“types”: “LLLLCCC”, > Type of the lane

“closed”: true

}

],

“id”: 109351

},For each lane, one paragraph like this exists in the file.

Here again, we click on lane points to have an accurate representation of the road.

Each point you see on the JSON file is a point at the edge of a lane.

This is used for driveable area detection, but not for full-frame semantic segmentation.

If you want the labels for full-frame semantic segmentation, you need to refer to the image I showed at the beginning.

How to use?

For driveable area, the best way is through semantic segmentation, as showed before. If you use this JSON information, you can try to predict the lane curve equations, but this will need a strong model definition of your type of data.

Finally, let’s see the lane markings.

Lane Markings

“category”: “lane”,

“attributes”: {

“laneDirection”: “parallel”,

“laneStyle”: “dashed”,

“laneType”: “single white”

},

“manualShape”: true,

“manualAttributes”: true,

“poly2d”: [

{

“vertices”: [

[

492.879546,

331.939543

],

[

0,

471.076658

]

],

“types”: “LL”,

“closed”: false

}

],

“id”: 109356

},This is similar to the driveable area label, but for one single line.

The vertices represent a lane line equation of type y = mx+p (or higher degree).

Here is the dataset distribution.

How to use?

These last indications are relevant and can be used. For example, if a lane marking is parallel to the passing car, it may serve to guide cars and separate lanes; if it is vertical, it can be treated as a sign of deceleration or stop.

To use the 2D values, you will need a neural network that can predict lane line equations. The output will be a set of numbers that you try to regress. The numbers are those in the JSON file.

Lane lines can also be predicted through semantic segmentation.

The BDD 100K Dataset is immensely rich. It contains a lot of images, and labels for everything that we might want to do in self-driving cars.

One thing I didn’t see when studying the JSON files are 3D Bounding Boxes which are more and more relevant today.

You can access the full description on this page.

Training on a subset of these images will give you a very strong representation of the world. It decreases the need for data augmentation techniques such as image flipping, cropping, rotating, …

The same exists for videos, where you can this time access tracking for consecutive frames.

If you have a self-driving car project, I highly recommend this dataset.

Other datasets like CityScape or Kitty are not half as populated.

In my next course, we will use this dataset to train cutting-edge models!

📩To go further, I invite you to join my daily emails and learn how to become a self-driving car engineer by receiving daily career tips, learning the stories from the field, and get more technical content!