Tesla's HydraNet - How Tesla's Autopilot Works

Elon Musk's company Tesla is one of the self-driving car giants leading the industry with an incredible technology,bold choices, and a gigantic fleet of customers.

In 2020, I wrote an article to explain how Tesla's Computer Vision system worked. In the annual Computer Vision and Pattern Recognition (CVPR) keynote in 2021, Andrej Karpathy, Tesla's AI Leader, explained gave a lot of new information on Tesla in 2021.

In this article, I'd like to explain to you how Tesla builds their Neural Networks, collect data, train their models, and more importantly, what is the inside of their Neural Networks.

Let's take a look at Tesla in 2021.

Tesla's Computer Vision system in 2020

Last year, I explained how Tesla's system evolved from using MobilEye's Computer Vision pack to designing their own chips, and building their own vision system.At the time, their Neural Net looked like this:

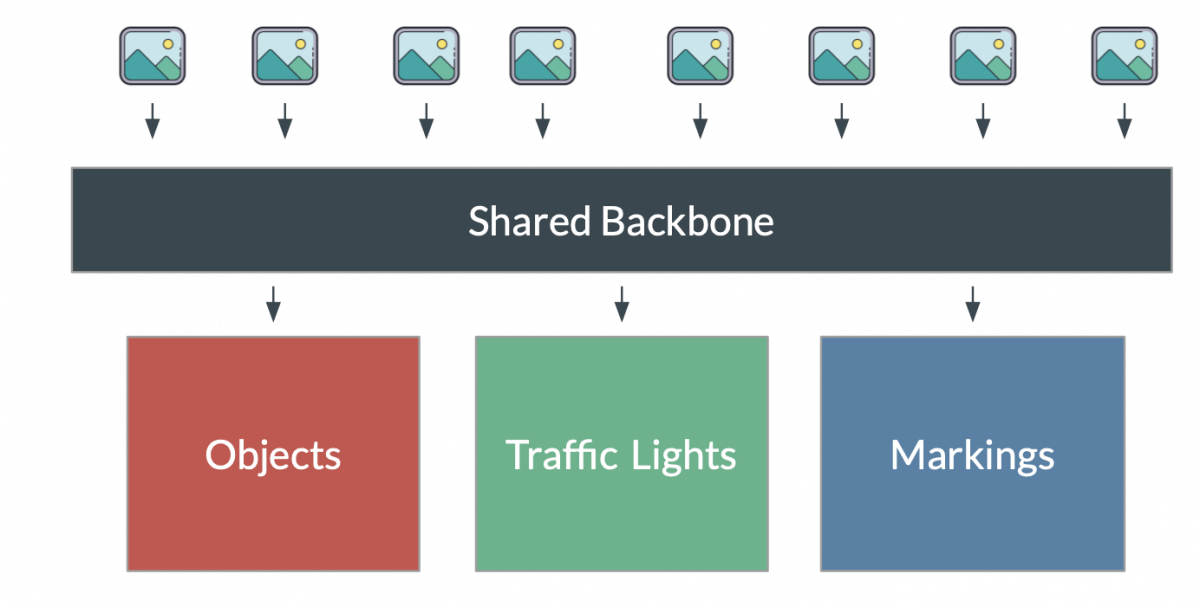

The 8 images are all synchronized and fused using a shared backbone. Then, several "Heads" are used. That structure is what Tesla calls a HydraNet : several heads, one body.The advantage of this structure is that you can finetune the neural network for specific use cases, such as object detection, without messing up the other tasks (lane lines, ...).We also saw that Tesla doesn't need LiDARs, because of the cost, and size of these sensors. Their system was made of cameras and RADARs. In this article, I actually get more in the details of why this choice makes sense to them, but might not for other companies such as Waymo.

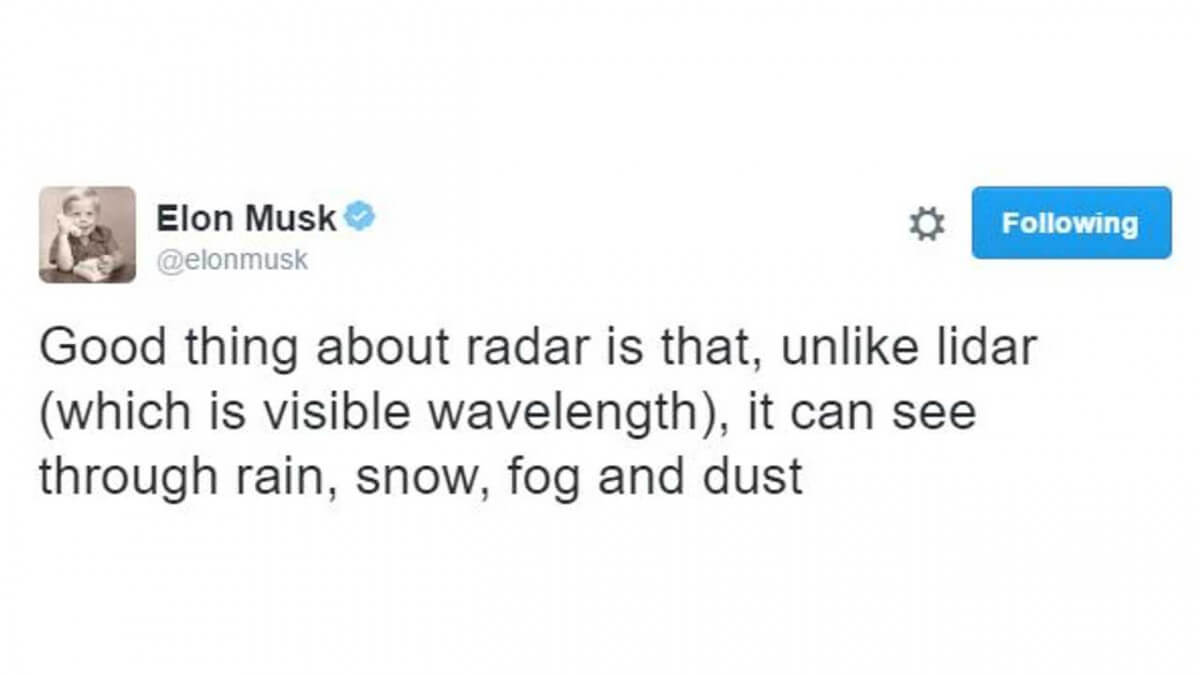

According to Elon Musk and the Tesla team, RADARs and cameras are enough for full self-driving.

RADAR is the sensor that helped Tesla sell cars without any LiDAR. As a reminder, Tesla's goal is to sell full self-driving cars for 25,000. As long as LiDARs cost 10,000 each, it's not an option.

In 2021, Elon Musk announced something else: The Vision system is now powerful enough to be used alone, without the RADAR.

A bit after this tweet, Tesla indeed removed the RADAR from their new Teslas, and double down on a Vision-only approach; just like humans do!In the rest of the article, I'd like to take a look at the new Vision only system. Let's try to see how it evolved since the original HydraNet, and how it will continue growing in the next few years.

The Vision Only Autopilot (2021)

In order to double down on Vision, Tesla had to transfer the entire RADAR team into a giant Computer Vision team. It's not that giant, about 20 people.Take a minute and picture how the entire Tesla's Autopilot is about just 20 people. I'm personally impressed to see how small of a team it is, and how effective they are!In the new configuration, Tesla is only working with 8 cameras, which provides a 360° view of the environment. Ultrasonics are also here to assist with parking.

The result of these 8 cameras is as follows:

According to Karpathy, cameras are 100 times better than RADARs; so RADARs don't bring much to the fusion, and even make it worse.

This is why they removed it.

In this article, I'd like to solve 2 main questions:

- How could they possibly remove the RADAR? 🤯 What was the exact process to transition from one system to another? Are the results better with the camera, or similar?

- Is the new HydraNet better than the previous one?

Removing the RADAR

When asked about the RADAR, here's Tesla's answer.

"The reason people are squeamish about using Vision is [...] people are not certain if neural networks are able to do range finding of depth estimation of these objects. So obviously humans drive around with vision, so obviously our neural net is able to process the inputs to understand depth and velocity of all the objects around us." Andrej Karpathy

Data Labeling

Thousands of people are driving in Tesla everyday. Unfortunately, I'm not one of them. To train a Neural Network to predict depth; they need a HUGE dataset of millions of videos, and labels.How to collect this? Simple! Thousands of people are driving in Tesla everyday!

👉 The data is coming from the fleet.

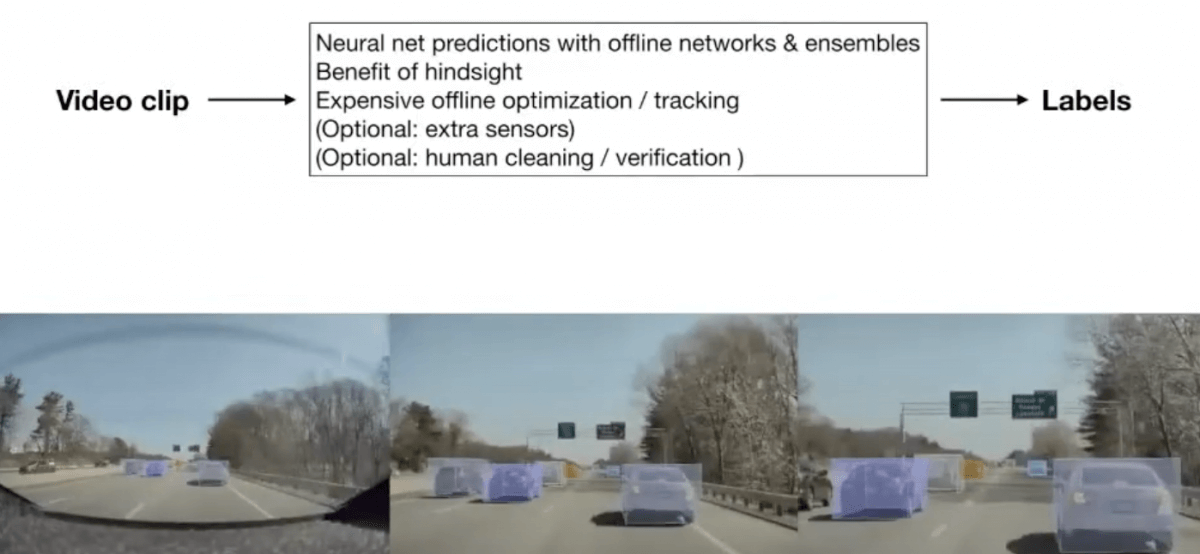

But how to label thousands of hours of videos? They use Neural Networks; and the labeling is somehow semi-supervised.

They're doing the labeling offline. This has several advantages, the network can be super heavy or even combine RADARs or LiDAR inputs. This is for labelling, and anything that will help get the ground truth label is a good option!

Once the Dataset is labeled, they can use it and to train their Neural Network: the HydraNet.

🐸🐸🐸 The New HydraNet 🐸🐸🐸

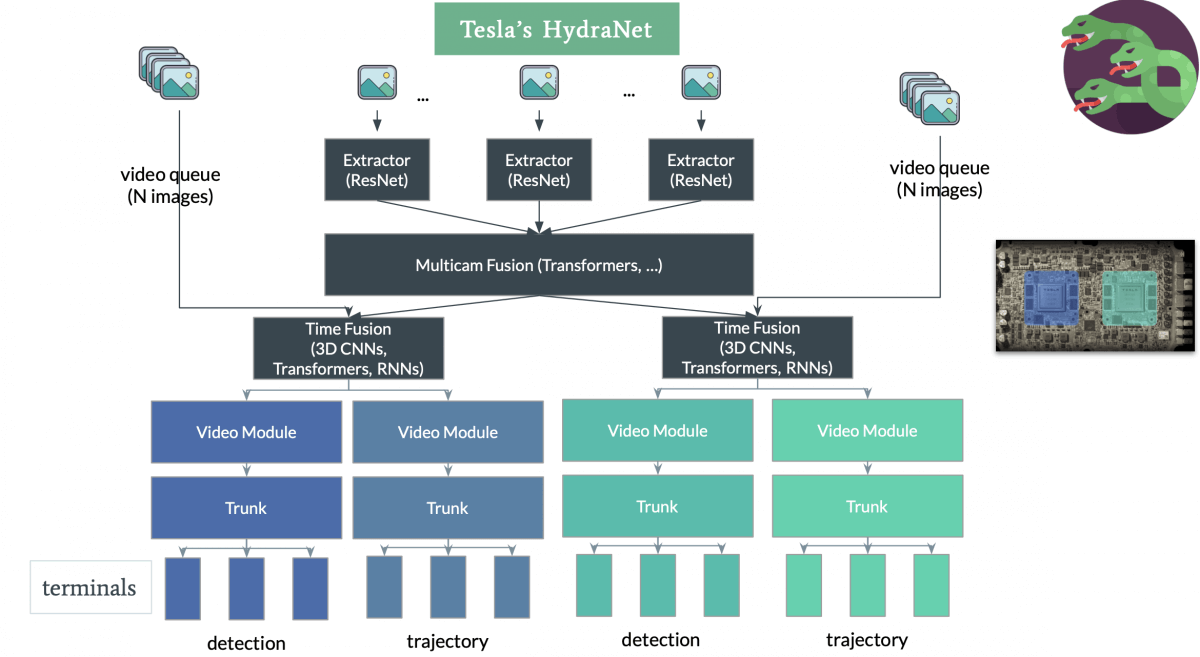

Here's the new HydraNet!

To detail what's going on:

- All 8 images are first processed by image extractors.

To do so, architectures similar to ResNets are used. - Then, there is a multicam fusion.

The idea is to combine all 8 images into a super-image. For that, the Hydra uses a transformer-like architecture. - Then, there is a time-fusion.

The idea is to bring time into the equation and fuse the super-image with all previous super-images. For that, there is a video queue of N images. For example, if they want to fuse with 2 seconds, and given that the cameras work at 36 Frame Per Second, N would be 72.

The time fusion is done with 3D CNNs, RNNs, and/or Transformers. - Finally, the output is split into HEADS.

Each head is responsible of a specific use case, and can be fine-tuned on its own!

If the heads used to be simply heads, they now have trunks and terminals. It's a way to get deeper and more specific on the type of use case. Each terminal can deal with its own task (pedestrian detection, traffic light, ...).

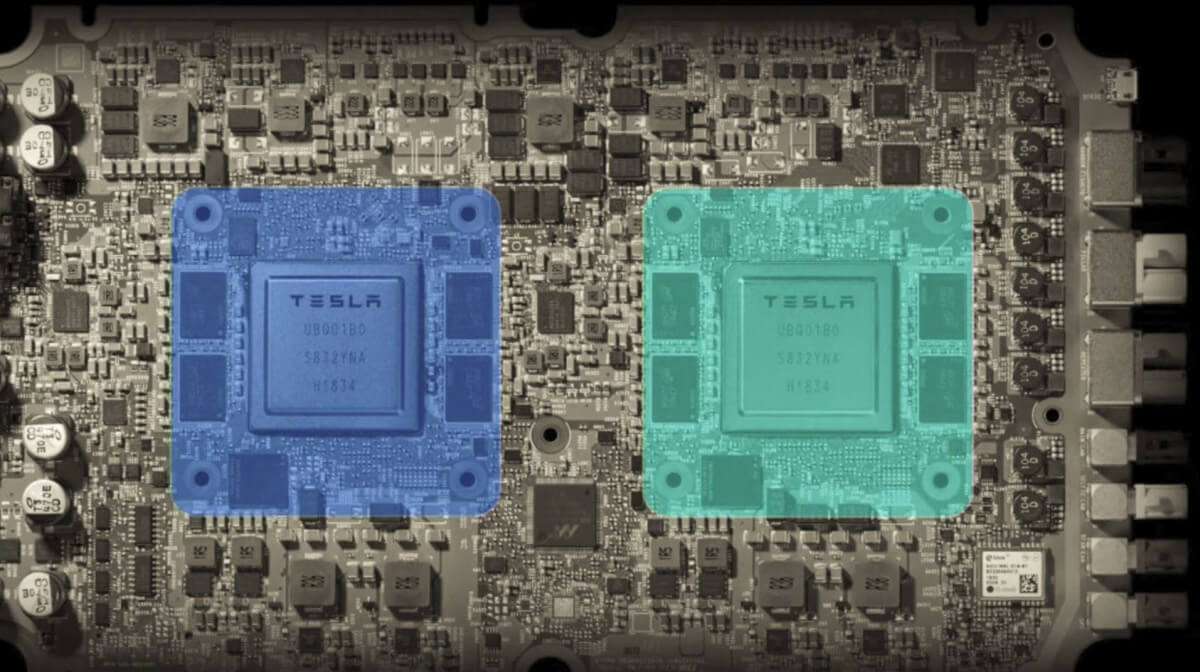

Something to note: As you can see, everything is split in 2: we have two time-fusion processes, 2 blue heads, and 2 green heads.This is actually a way to show that the neural network runs on the 2 chips in parallel.

My guess from the keynote is that the entire network runs on 2 chips in parallel to add redundancy to the process. Finally, this Neural Network is secretely tested inside every Tesla driving, it's just not connected to control. It is being compared to the RADAR/Camera Legacy Fusion output. For months, the network is being improved, until someday, it's ready to be the only tool used!

The Results

This entire article is based on this keynote from Andrej Karpathi, which I recommend you watch if you'd like to get deeper in the topic. The results are simple: it works! In many cases, the RADAR had trouble figuring out what was going on. For example, when the car in front of you brakes very strongly! They found that the HydraNet is doing a better job at predicting the real speed and distance.In the video, Andrej Karpathy also goes further on the super-computers used to train the neural network, the 5th most powerful computer on Earth!

Vision alone is definitely happening this year at Tesla. The new cars are already shipped without a RADAR! The work is astonishing, and also very encouraging for this approach!