6 Perception Engineer skills to get started (and 6 bonus skills to get advanced)

In a recent interview I did on YouTube, Sina (my interviewer), asked about my past as a Perception Engineer, and about how to make it in the self-driving car world:

"The majority of my audience, they are either students or just fresh graduates. They have a degree in engineering and they're trying to become a self-driving car engineer. So how would you suggest someone with a bachelor's in engineering go about becoming a self-driving car engineer?"

I rarely talk about "how to get started".

This is just not my topic.

I prefer to write and build courses for intermediate levels. Because I know that they need my help the most, and that the beginner level is somehow "covered".

In this article, I'm going to explore the beginner stage of Perception.And for that, I'm going to share with you 6 skills that are fundamental to learn.These 6 skills can take you from "I don't even know how to code" to "I can detect and track objects in mini-robots."

We'll then explore 6 Skills to move from an Intermediate Level to an Advanced Level.

This should be fun:

👉🏼 Before that —if you're looking for some kind of checklist, you can download my "Self-Driving Car Engineer Checklist" here.

Let's begin with:

6 Skills from Beginner to Intermediate Perception Engineer

Let's begin with 6 skills to learn from scratch.

#1. Maths, Python, & Linux: The Master's Degree

I expect most engineers reading this blog post to be either students in Master's Degree or already graduated. If you're not at this stage, then simply note that the "prerequisites" needed for this kind of job (Perception Engineer) are everything we learn at school. Including:

- Linear Algebra

- Calculus

- Probabilities

- Linux Command Lines

- Python

- Computers

- ...Again, if you have a Master's Degree, you probably have all of it already.

#2. Machine Learning, Deep learning, & Computer Vision

Here is number 2: all the fundamentals about Machine Learning, Deep Learning and Computer Vision.

I sometimes call these boring, but I remember being fascinating when I first learned about all of this.

You can learn these skills from my friends at PyImageSearch, or via Andrew Ng's Courses.

To make it more interesting, I recommend moving away from the basic projects and datasets, and try to do everything with either a custom dataset, or a dataset you don't see everywhere.

If you are able to build and train models with PyTorch or Tensorflow, handle OpenCV, classify images, and detect objects you should be good.This second point alone can take you an entire year. Be patient with it, and enjoy the process.A note — I put object detection at the same level.Most engineers spend a lot of time trying to understand object detection, and it's a great thing.But I would actually avoid spending too much time focusing on the different version of YOLO and which one is currently better.In the end, it's just returning bounding boxes. And these squares in pixel coordinates are totally useless if you don't learn to postprocess them.Which brings me to:

#3. Object Tracking: Making sense of your Skills.

Tracking means that instead of just detecting an object on every frame, you are able to follow it across multiple frames; attribute an id and color to its box, and even predict its next moves.These is an interesting process when you learn tracking, you start trying to "use" your skills. It's no longer about having 20 FPS detecting pedestrians, but about predicting what the pedestrians are doing, if they're running or walking, ...

Put differently, we're moving away from just detecting objects, and going to be interpreting a scene; which is much more powerful and useful to companies.

With that, learning tracking opens new fields such as Kalman Filters, Sensor Fusion, or even SLAM.

👉🏼 If you're interested in learning object tracking, you can check my dedicated (and revamped) course here.

#4. ROS: The skill everybody runs away from

A client of mine recently shared how he applied the content from my Portfolio and Resume courses to get an interview for "Autonomous Driving Engineer" position.

Everything was happening great. But at some point, he mentioned how the recruiters said the only blocking point was about ROS.

ROS means Robotic OS, and every self-driving car or Perception Engineer is either using ROS, or using a modified version of it.

There is no avoiding ROS.

👉🏼 The good news is, if you find it difficult and daunting, you can check this link and learn it in a super easy and rewarding way.

#5. Segmentation: The Skill Everybody Wants to Learn (But Nobody Really Knows Why...)

Something I find fascinating about image segmentation is that even though almost every professional Computer Vision Engineer knows how it works, a good 90% of them wouldn't know what to do with it for applications such as Robotics.

Yet, image segmentation is used in Perception, and there are even interesting ways to combine image segmentation with point clouds or other systems and make it 3D.

For example, in my course on HydraNets, I have a lesson about 3D Segmentation:

This kind of cutting-edge application of Perception wouldn't be possible without learning and understanding the fundamentals of image segmentation first, meaning:

- Convolutions

- Encoder-Decoder Architectures

- Upsampling

- Deep Learning Blocks (Residual Blocks, Inverted Blocks, ...)

- Reading Research Papers

- ...Once you know about it, you can start exploring more advanced applications of Deep Learning (we'll go back to that in the second part of this article).

👉🏼 By the way, my course on Image Segmentation can probably help.

#6. Point Clouds: The Skill More Enjoyable to Show Than A 6 Pack

Finally, Point Clouds!

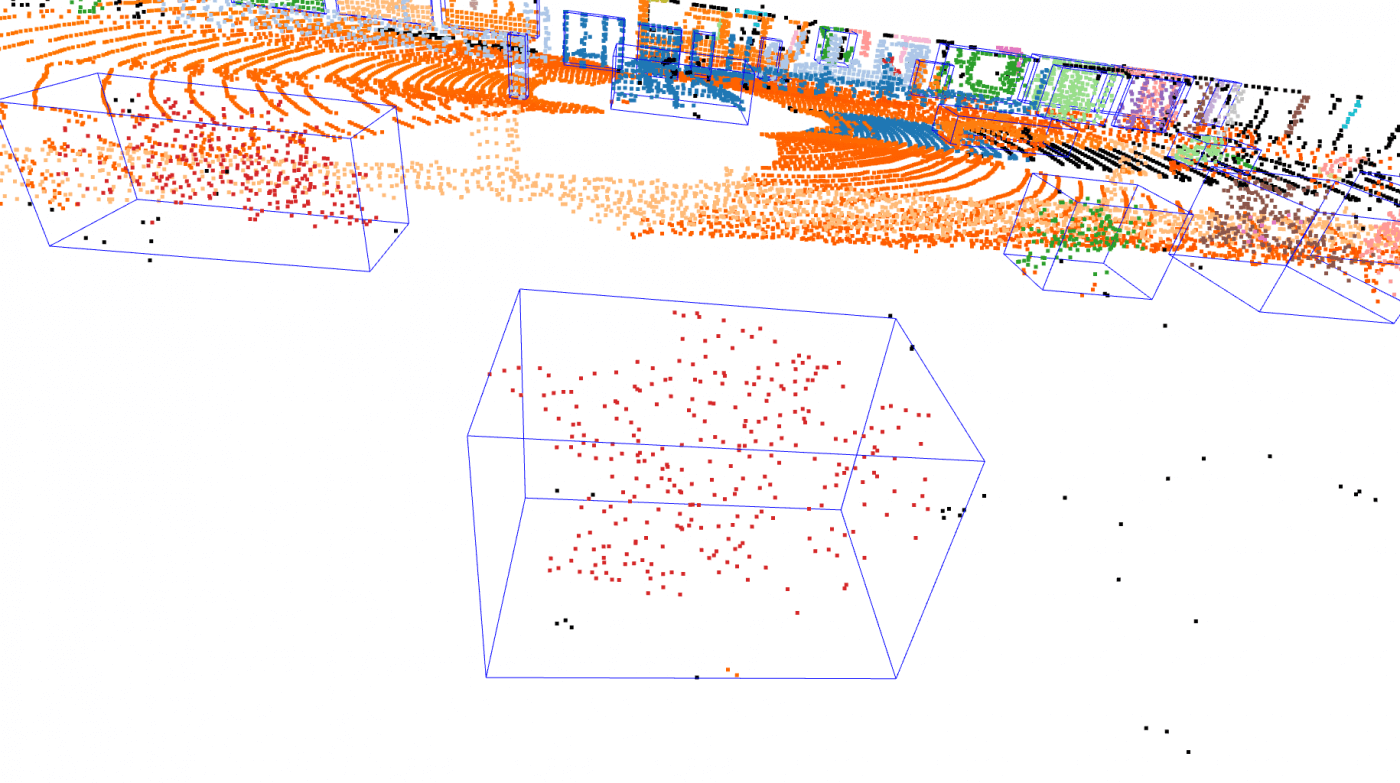

So far, we've been talking a lot about Computer Vision & Deep Learning. But Perception is actually 50% also about LiDARs & 3D Data.If you don't know what a Point Cloud is, you can get introduced through this article.Working on Point Clouds also and most of all means working on 3D Machine Learning techniques, such as:

- Outlier Removal

- Downsampling

- Clustering

- Principle Component Analysis

- ...The result (taken from my Point Clouds Fast Course) looks like this:

We just saw 6 Skills to go from 0 to 1.

Learn them (in that order works) and you'll have the foundations of the job title "Perception Engineer".

Now, what if you already have that, or want to go a little further and push it from 1 to 100? From Intermediate to Advanced?

6 Perception Engineer Skills from Intermediate to Advanced

As a reminder, these are skills for a role in Perception. With that, since my initial list is of 6 skills, I didn't pick more, but there are indeed more, and you can check them out in my checklist.

#1 — Kalman Filters

I don't know any Perception Engineer who doesn't know about Kalman Filters.

This is just how it is.

Kalman Filters are everywhere there are sensors.And in a self-driving car, they are almost at every module.There are lots of good resources to get started with Kalman Filters, I also have my own course on the topic, but I'd recommend getting started through my article on Sensor Fusion.There are actually 3 types of Kalman Filters:

- Linear Kalman Filters

- Extended Kalman Filters

- Unscented Kalman FiltersThe first type is used to track linear motion, and the second type of used for non-linear applications (for example, the sensor fusion case I'm mentioning in the article quoted earlier).

#2 — 3D Computer Vision

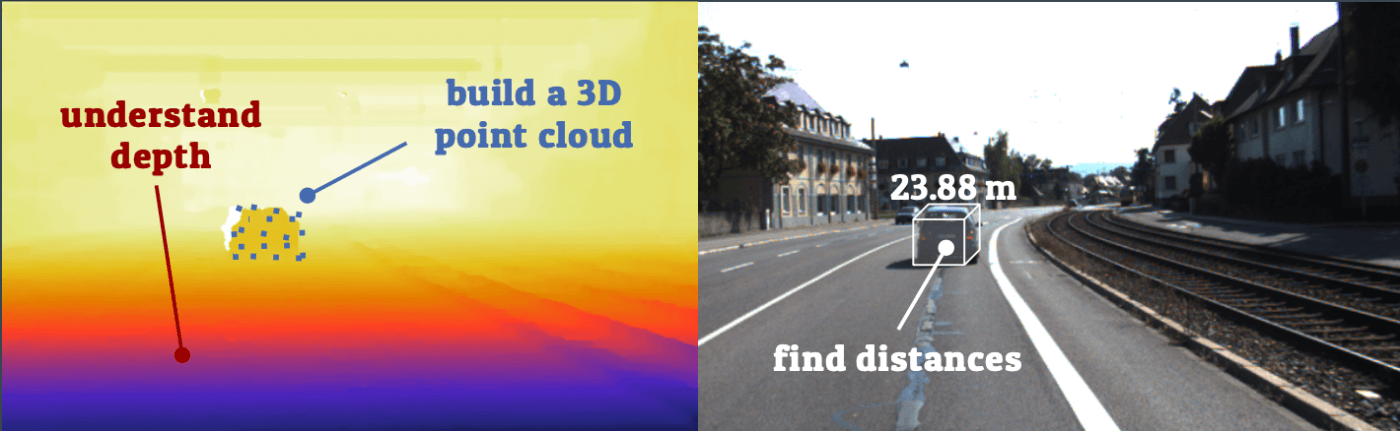

One of the best thing you can learn when you feel "good" at Computer Vision is to explore 3D. The world is in 3D, and it just doesn't make any sense to remain at the pixel level.

If you look at Robotics companies, they all use 3D sensors, or 3D algorithms at some point. For example, they're going to use an RGB-D Camera, or they're going to use a LiDAR, or Stereo Cameras.

Computer Vision makes the most sense when it's used in 3D.

Here is an example of things you can do in 3D:

I took it from my course on Pseudo-LiDARs & 3D Computer Vision.

#3 — 3D Deep Learning

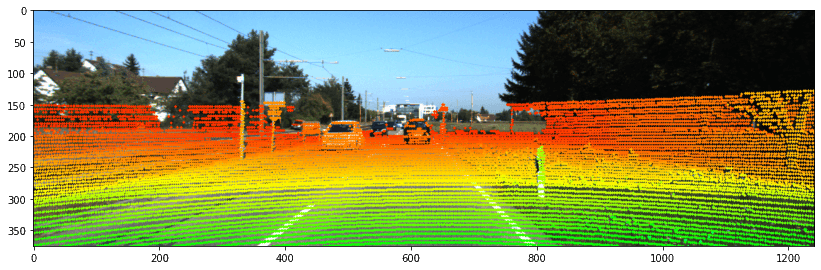

Still on the 3D topic, you can learn about 3D Deep Learning. It's used a lot when we have meshes, point clouds, or voxels. In an autonomous robot, you'll find 3D Deep Learning Algorithms for Point Clouds Segmentation & Object Detection in Point Clouds.

Here is an example:

-I personally love this topic, and many of my clients who have been though my 3D Deep Learning course could find jobs on LiDARs after.

#4 — Video Processing & Prediction

If you can learn about 3D, you can also learn about 4D. Which means you can start to include time in the process. We've seen in other blog posts how sensors are now becoming 4D themselves, so consider that software must also be 4D.

As a Perception Engineer, a good skill to learn is Optical Flow and Object Prediction.

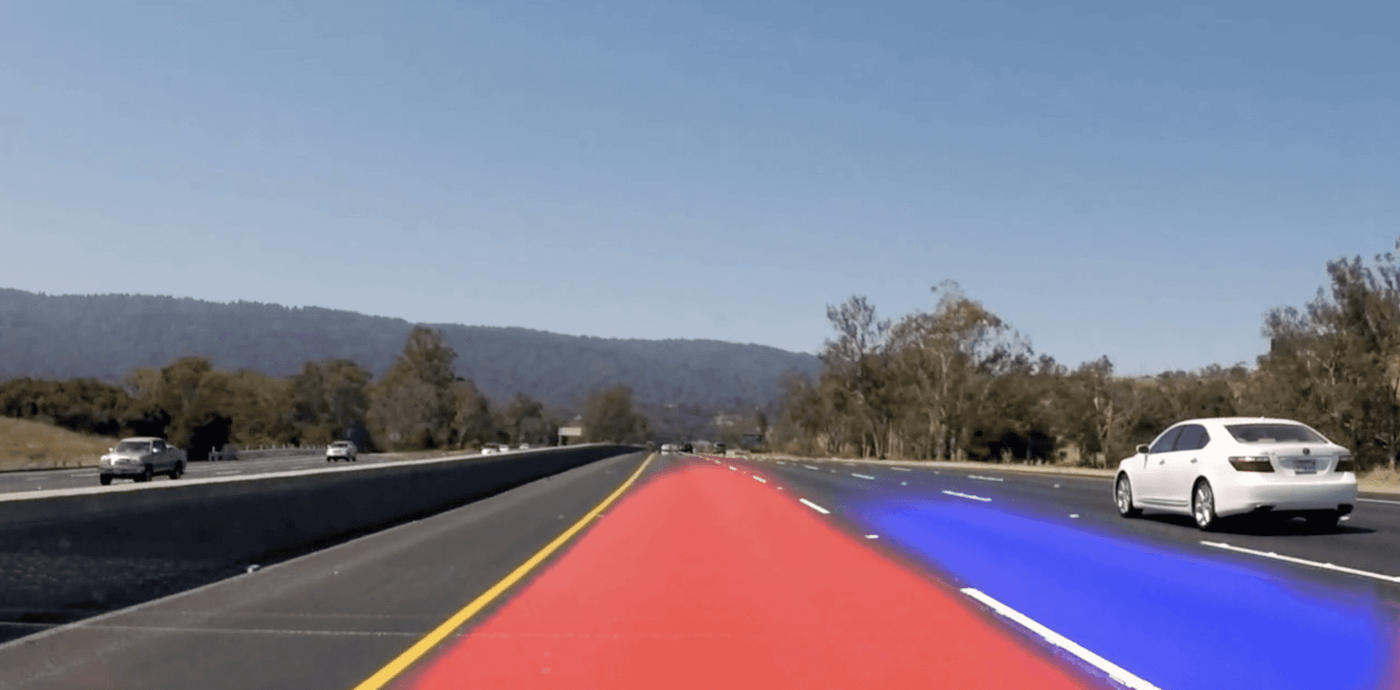

Let me show you an example from my course on Motion Prediction & Optical Flow:

In this example, we can assign a color to the motion of each person. If it's red, it goes up, if it's blue, it goes down.The skills behind this are Computer Vision, but also Deep Learning, and in particular:

- Recurrent Neural Networks

- Deep Learning for 6D Inputs

- Deep Fusion — Correlation Blocks, Fused Convolutions, ...

- 3D Convolutions

So, this is all hardcore Deep Learning, and I happen to teach it here.

#5 — Sensor Fusion

Another primordial topic for Perception Engineers: Sensor Fusion. We have several types of sensors. For the case of autonomous cars, we mainly have:

- LiDARs

- Cameras

- RADARs

Understanding how each of these work is the first part; understanding how to fuse them is the second.

You can learn more about it in my post 9 Types of Sensor Fusion Algorithms.

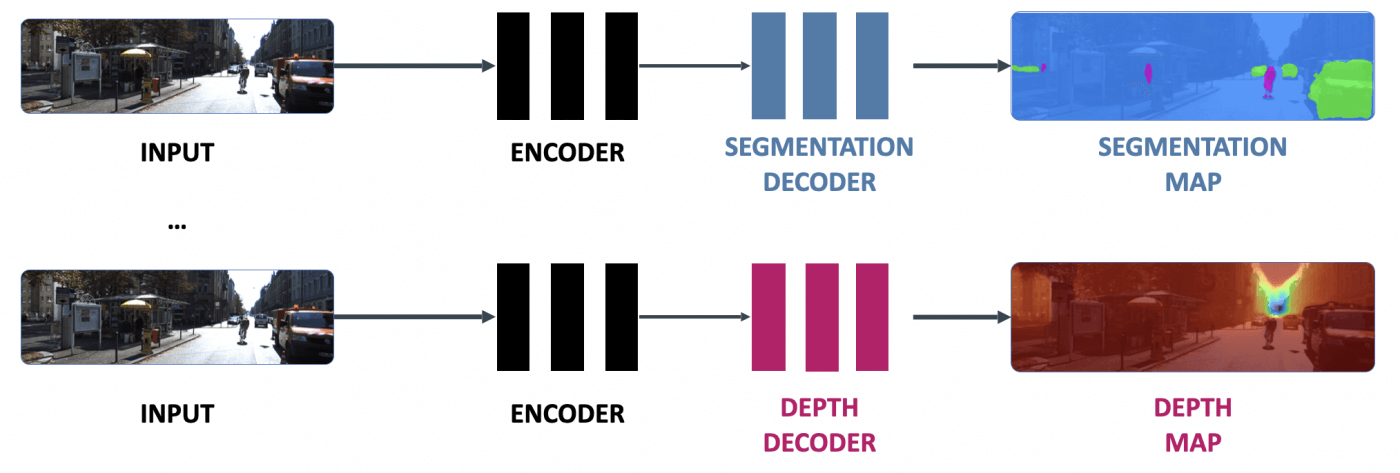

#6 — HydraNets

Finally, one of my favourite is called HydraNets. I talked about it before in the Segmentation part, because Image Segmentation was one of the "heads".HydraNets are Multi-Task Learning Architectures where a Neural Network can do more than one task. It's highly efficient in the case of self-driving cars, where we have dozens of tasks, and can't afford dozens of neural networks; especially if the encoders repeat themselves.Instead of:

We have:

You can learn more about this in my dedicated course.

Okay, we just saw how to go from 0 to "Hero" in Perception.

As a reminder:

From 0 to 1:

- Linux, Python, Maths

- Machine Learning, Computer Vision, & Deep Learning

- Object Tracking

- ROS

- Image Segmentation

- Point Clouds

From 1 to More:

- Kalman Filters

- 3D Computer Vision

- 3D Deep Learning

- Video Processing & Prediction

- Sensor Fusion

- HydraNets

If you look at a Perception Engineer job description, you'll see these set of skills, sometimes written under different names. For example, 3D Computer Vision can be referred by its more geometrical terms, such as "epipolar geometry". Video Processing can be referred to as "Optical Flow". But you now have somewhere to dig and begin your adventure.

I am communicating about these topics daily in my emails, and if you're not inside yet, you might miss tomorrow's email about one of these topics. You can subscribe by downloading the Self-Driving Car Engineer Checklist, that is going even more in-depth on the exact set of skills you should learn.