LiDAR vs RADAR: How 4D Imaging RADARs and FMCW LiDARs disrupt the Autonomous Tech Industry

Back in 2020, a company contacted me because they needed my opinion on a robotic sensor stack they were working on. They had 2 days to finalize the decision of a sensor suite that would equip their autonomous delivery pods. Like many, they were considering using a combination of all sensors, cameras, LiDARs, RADARs, and even ultrasonic sensors. But they also had concerns, and were wondering if nothing better was available.

But in 2020, the combination of a LiDAR, a camera, and a RADAR was what made the most sense. "These sensors are complementary" I would reply. "The LiDAR is the most accurate sensor to detect a distance, the camera is best for scene understanding, and the RADAR can see through objects and directly estimate velocities".

Is this still true? Don't we have camera only systems today? Don't we have LiDAR only systems that bypass RADARs? And don't we have RADARs that are getting as good, if not better, as LiDARs? I think the idea of "complementarity" is changing. Today, sensors get more capable. FMCW LiDARs can detect speed, and Imaging RADARs can great accurate point cloud representations.

So, what is true and what isn't?

Let's take a look via this article in 3 points:

- The Traditional LiDAR vs RADAR comparison

- The new LiDAR and RADAR sensors in self-driving cars

- LiDARs vs RADARs: The Modern Comparison

Watch it here in my private app.

Traditional LiDAR and RADAR technology comparison

I believe the following no longer makes sense, but I am going to show it to you anyway, and this is what you'll see in 99% of other posts about the topic. Here is the idea in 3 points:

1 - LiDARs are great for distance estimation

LiDAR (Light Detection and Ranging) is a technology that leverages laser light to measure distances and create detailed 3D maps of objects and environments. When you look at a distance estimators today, the LiDAR is often used as the "ground truth". LiDAR systems operate by emitting laser pulses (waves) and calculating the time it takes for the light to come back. This idea is called "Time of Flight" - and although there are multiple types of LiDARs, this is the overall idea.

Here is an example:

Now, what does it produce? The answer is a point cloud of the environment. But not all point clouds look the same.

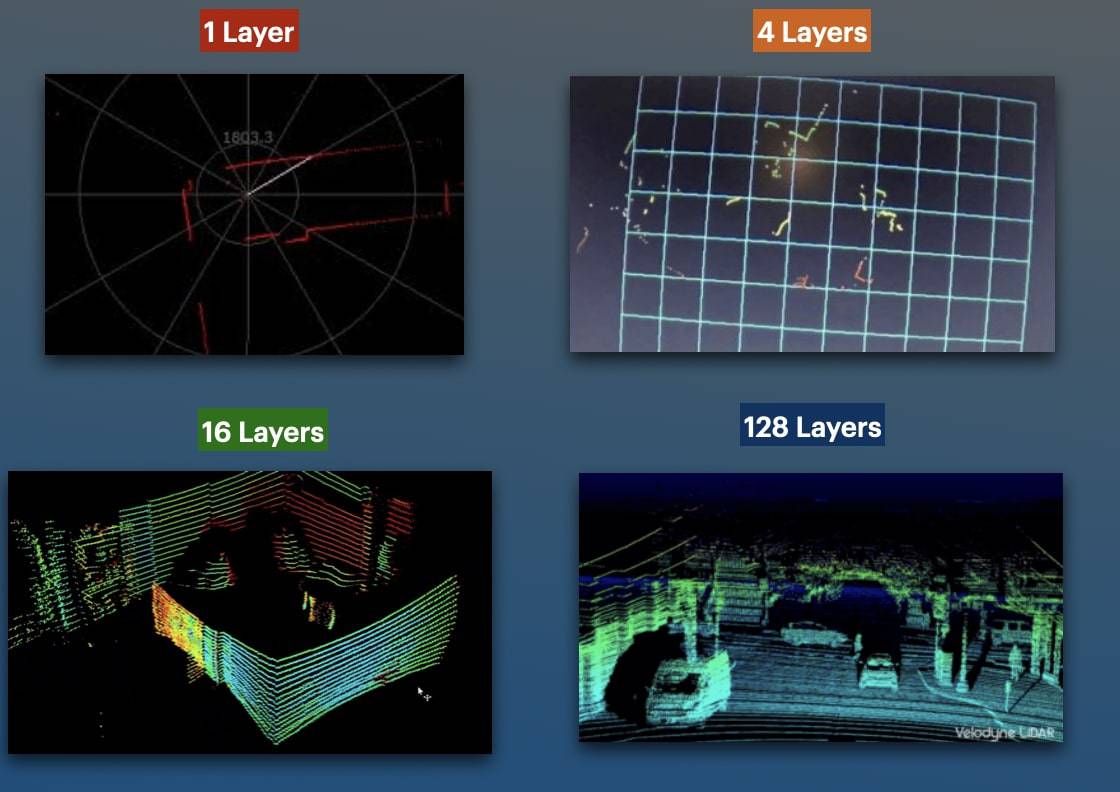

2D vs 3D LiDARs

Because I'm going to talk about 4D LiDARs, I have to explain the idea of a 2D and a 3D LiDAR first. The idea is well explained in my post "2D LiDARs: Too Weak for Self-Driving Cars?", in which I explain that LiDARs use vertical "channels" or layers, and that based on the number of channels, you have a more accurate 3D resolution.

What more channels bring

Lidar utilizes laser pulses to send out laser beams, measure distances, and create detailed 3D maps. But the drawback is that if you want to measure a velocity, you need to compute the difference between 2 consecutive timestamps. How has the point cloud moved in the last second? At low speed, this is good enough, but at high speed, measuring the differences between 2 frames can mean several meters before braking.

This is why we also like to combine it with a RADAR. Let's see it:

2 - RADARs are great velocity estimators

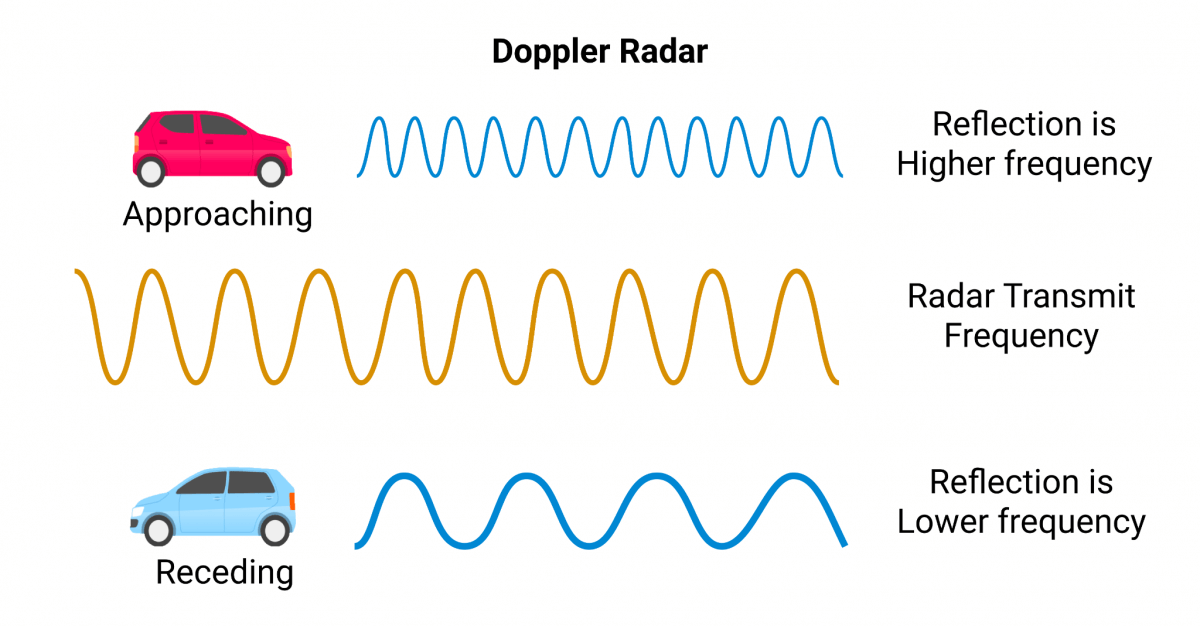

RADAR stands for Radio Detection And Ranging. It works by emitting electromagnetic waves that reflect when they meet an obstacle. Unlike cameras or LiDARs, RADAR relies on radio waves that can work under any weather condition, and even see underneath obstacles. They use the "Doppler Effect" to measure the velocity of obstacles.

RADAR technology is very mature (>100 years old), and is used in various industries, including aviation, where it is crucial for air traffic control, cars, missile detection, and even weather forecasting. However, most RADARs work in 2D. Haaaaa - yes, this is what we got: X and Y, but no Z, exactly like a one-channel LiDAR.

Should I show you the sample point cloud from a RADAR?

Output from a RADAR system

But let me show you the real output from a RADAR sensor:

I mean, can you tell where there is a vehicle? Whether we should stop or not? It's complete garbag— wait, if people use it, it's gotta be useful, right? And yes, it is, because while we only have noisy 2D point cloud, each of these points also provide a 1D velocity information. RADARs tell us whether the points are going away from us, or towards us, and how fast.

Using Point Clouds Processing, Deep Learning (often trained on LiDAR data), or even RADAR/Camera Fusion, we can even get a result like this:

Notice how the yellow dot changes to a green color as soon as the car moves, and how each static object is orange, while moving objects have a color. This is because the RADAR is really good at measuring velocities.

3 - LiDARs and RADARs are complementary and still need eachother

As a little summary, I'd say that LiDARs are good, but most of the time need cameras for context, and at high speed, need RADARs. RADARs are great, but could NOT work as a standalone system. So let's do a quick overview:

If you want to be green everywhere, you need to combine all 3. Use the camera for scene understanding, use the RADAR for weather conditions and velocity measurement, and the LiDAR for distance estimation.

This brings a problem. A random startup must invest in 3 sensors, co-calibrate 3 sensors, train their team on all these sensor types, and the more sensors we use, the more confusion we risk bringing. You may wonder... can't we use just one? Or two?

Let’s see how:

FMCW LiDARs & Imaging RADARs: The Future of Perception

Back in January 2023, I was at CES in Las Vegas for the first time. It was a big show, really incredible, and while walking there, I met a startup named 'Aeva'. Aeva is a LiDAR startup specialized in 4D technology. "What's 4D?" I asked. It turns out, 4D meat that their LiDARs had the possibility to do direct velocity estimation.

The next day, I walked to a different area and stumble across a korean startup called bitsensing. "Bitsensing is creating a 4D Imaging RADAR" said the presentator. I was in shock. It was a normal RADAR, but providing an incredible resolution, with Z-elevation, accurate 3D view, no noise, and still the Doppler velocity measurement.

It sounded like these startups were working on fixing the weaknesses of classical technologies.

Let me introduce them to you.

1 - FMCW LiDAR (Frequency Modulated Continuous Wave LiDAR): 4D LiDAR

An FMCW LiDAR (or 4D LiDAR, or Doppler LiDAR) is a LiDAR that can return the depth information, but also directly measure the speed of an object. What happens behind the scenes if they steal the RADAR Doppler Technology and adapt it to a light sensor.

Here's what the startup Aurora is doing on LiDARs... notice how moving objects are colored while others aren't:

LiDAR uses the Doppler Effect, similarly to the RADAR technology, to get this 4D view. The main idea can be seen on this image, where we play with the frequency of the returned wave to measure the velocity.

The Doppler Effect is exactly about measuring this frequency. And this has now been adopted in FMCW LiDAR technology, but still with light waves instead of radio waves. I highly recommend to check out my complete post called "Understanding the magnificent FMCW LiDAR".

2 - Imaging RADAR: 4D RADAR

In 2024, mobileye, who had been working on their own FMCW LiDAR for years, announced it would be shutting down its entire FMCW LiDAR division to focus on proprietary 4D Imaging RADAR. What happened? Why the shift? Well, let's first try to understand what Imaging RADARs are. I like to call these...

RADAR on steroids!

To understand better how it works, I'd like to show you the bitsensing demo they showed me at CES.

bitsensing Imaging RADAR Demo

The Imaging RADAR has an incredible resolution. It provides a very accurate point cloud, that can see through adverse weather conditions, do obstacle detection AND measure velocity directly! Under-the-hood, it uses a set of MIMO antennas to get a much better resolution, range, and precision. We could in fact detect obstacles inside a vehicle, and classify children from parents.

See the demo:

Can you notice how similar it looks to the FMCW LiDAR? We have in both cases:

- A 3D Point Cloud

- That can directly measure velocity

Other Examples from Self-Driving Cars

Frankly, many actors from the autonomous driving industry are switching to Imaging RADARs. Mobileye has a great demo, so does Waymo. Let's see these 2 examples.

Here's the Waymo Imaging RADAR Demo:

And now Mobileye:

See? We are in the middle of a transition... but why are people using Imaging RADARs over FMCW LiDARs? And are they really moving away from LiDARs? Let's find out in the final point...

LiDARs vs RADARs: The Modern Comparison

There are 2 ideas I'd like to talk about here:

- The Future of RADARs IS Imaging based

- The Future of LiDARs may NOT be FMCW based

1 - The Future of RADARs IS Imaging based

We have clearly see how a good RADAR system can bring incredible benefits. We can now do tasks like object detection using purely an imaging RADAR. Recently, we've seen Deep Learning models, like the ones from Perciv AI, work on RADAR data (radar signals, radar point clouds, radar waves, ...) directly.

Back in the day, any comparison between a LiDAR and a RADAR didn’t really make sense because the sensors were highly complementary. But today, these sensors can be in competition, and if there is one, Imaging RADARs are winning it! If we see the new comparison table now:

We are BLUE almost everywhere, but the cost of Imaging RADAR stays lower than FCMW LiDARs. In addition to this, the Imaging RADAR can nicely fit under a bumper, since RADAR employs radio waves that go through objects.

When looking at remote sensing technology, RADAR has always been a great choice; whether it's synthetic aperture radar systems in the military field, or environmental monitoring of their radio frequency spectrum, or the recent adoption in autonomous vehicles, RADARs ARE by default a great choice.

In the self-driving space, RADARs were never good enough to be a standalone. Have someone ever told you you weren't good enough? Well, this is a lesson, because you can see a massive adoption and trend of Imaging RADAR - and I believe the future of RADARs is imaging.

2 - The Future of LiDARs IS NOT FMCW based

Now this is the incredible discovery here: Nobody is abandonning LiDARs for FMCW LiDARs. Self-driving car companies have NOT adopted FMCW LiDAR technology in mass (for now), and I predict they'll just stick to solid-state.

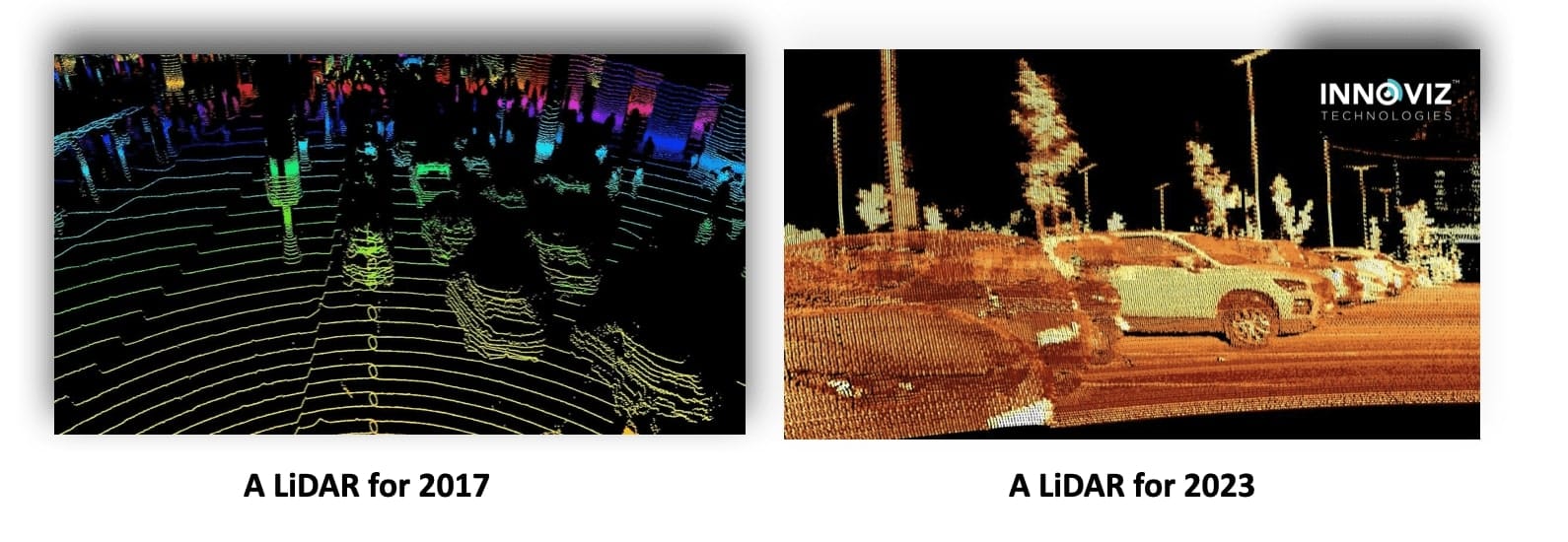

Back in 2023, I went to Innoviz Technologies headquarters in Israel. Innoviz is a LiDAR manufacturing companies providing LiDAR devices to companies like BMW. I asked them: "Why are you NOT building FMCW LiDARs?". Their answer was that their LiDARs were good enough, and that there was no real need for FMCW. It really surprised me, but I guess they know what they're talking about. They could solve the drawbacks of LiDARs by building better LiDARs, for example here:

In many fields, LiDAR sensors are at the core. We have airborne lidar systems building elevation maps, we have HD Maps built entirely from LiDARs, and even drones equipped with LiDARs today... this technology is here to stay. Plus, today, EVERYONE uses LiDARs! No that was wrong, Tesla doesn't, and a few others have bet on vision-only... but the majority of startups do, except that they use BETTER LiDARs. Not necessarily 4D, but LiDARs that provide better resolution, focusing more on solid-state technology.

This is the key message I have for you, and now that we've seen it, let's go through a summary, and see some next steps.

Summary & Next Steps

- LiDAR uses laser light to measure distances and create detailed 3D maps of objects and environments. Their key strength is distance estimation. They key weakness is weak velocity estimation, and weather conditions.

- RADAR emits radio waves and measures their reflections to detect objects and calculate their speed, even in bad weather. They key strength is velocity estimation, they key weakness is noise, context, and 3D estimation (most are only 2D).

- Traditional setups combine LiDAR, RADAR, and cameras because each sensor complements the others' strengths and weaknesses. It's near unthinkable to use one as a standalone.

- Recently, technologies like 4D FMCW LiDAR and Imaging RADAR have emerged, offering both high resolution and velocity measurement. FMCW LiDARs use the Doppler effect, and Imaging RADARs use more antennas.

- While the future of RADAR is (I believe) RADAR+Imaging capabilities, I believe the future of LiDARs may be solid-state based, and not necessarily FMCW/4D based.