LiDAR Point Clouds: Intro to 3D Perception

When winter 2007 arrived, most of the people around me weren't apprehensive. My world wasn't really "Oh no, it's going to be cold!". I was 12 years old, and I was about to live the best years of my childhood, because this winter, came the release of the Nintendo Wii!

The launch of the Nintendo Wii in 2006 revolutionized the gaming industry, bringing motion-controlled gaming to the masses. I must have played tennis for hundreds of hours, and bought nearly all accessories for gaming, shooting, sports, fitness, etc...

However, it wasn't until the release of the Microsoft Kinect in 2010 that we saw the true potential of full-body, immersive interaction with games and other applications. In the case of the Kinect, it used an infrared sensor and an RGB camera to capture depth information, which was then used to create a point cloud of the scene. This allowed the Kinect to track the movements of the player in real-time, creating a more immersive and interactive gaming experience.

Point clouds are a type of data representation that captures the position of points in 3D space. They are commonly used in applications such as 3D modeling, virtual reality, and autonomous vehicles, and are generated using technologies such as LiDARs and RGB-D cameras.

In this article, we'll take a closer look at point clouds, what they are, how they are produced, and how they are used. We'll see which companies work on them, and we'll especially focus on the self-driving car industry.

So let's begin:

What are LiDAR Point Clouds?

A LiDAR Point Cloud is a set of points in 3D space — hence a cloud of points. In it, each point represents the 3D location of an object in the real world. These objects can be anything from buildings and trees to people and cars.

The points can be produced using a variety of methods, including LiDARs, 3D reconstruction models, RGB-D cameras, and RADARs... but we'll get to that later!

Why do we need point clouds?

Take a self-driving car. If it's driving with just a camera, it's going to get 2D Images. But the real world is in 3D, and if we have the information that a car is at pixel (200, 399), it doesn't tell us anything about the car's whereabouts in the real 3D world!

When cars drive autonomously, they work by processing LiDAR point cloud data. And even those driven only with camera systems, such as Tesla cars, will first convert the images into a point cloud, and then process it.

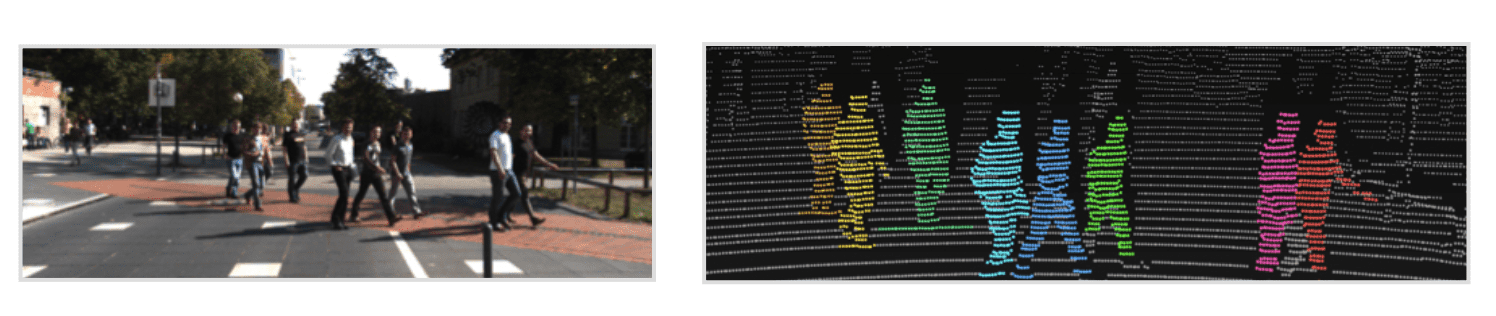

Today, Point Clouds are used in many industries, such as self-driving cars, robotics, drones, augmented reality, and even in the medical space. But you know a use of Point Clouds I didn't think about until recently? Surveillance!

Because Point Clouds are just a set of points, they provide total anonymity. You don't have to make people sign a paper for their image right, you don't have to worry about people's privacy...

With a Point Cloud, privacy is built-in!

So now let's see:

How to produce point cloud data?

3 techniques I want to describe:

- Structured Light (RGBD-Cameras)

- Time Of Flight (LiDARs)

- 3D Reconstruction (Cameras)

Structured Light

We talked about the Kinect, which used an RGB-D camera working on the Structured Light Principle.

What happens is: The Kinect shines a pattern of special light around, and then takes a picture of how the light bounces back using an infrared camera. By looking at the way the pattern of light is distorted, the camera can figure out how far away the things are. It then puts all this information together with the colors it sees to make a 3D picture called one final point cloud.

An example here:

LiDAR Point Cloud (Light Detection And Ranging)

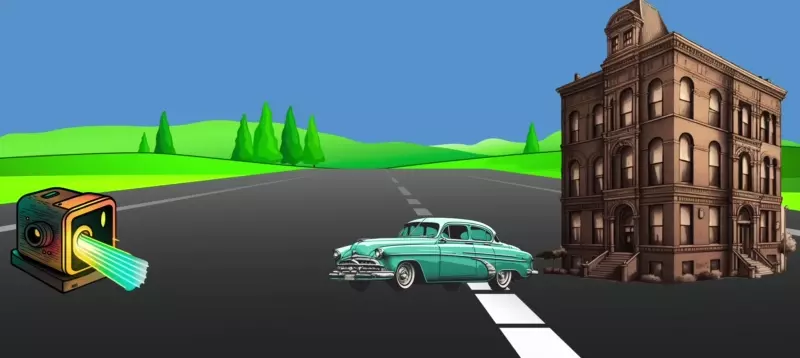

The most common and popular technique is to use a LiDAR (Light Detection And Ranging). A LiDAR can be done using lots of different techniques, but the most common one is through the Time-Of-Flight principle.

In this setup, a laser scanner sends a light beam and measure the time it takes to reflect and come back to the receiver. Similar to this image:

It does it for millions of points, and therefore builds a set of 3D data points, each containing the values X, Y, and Z. Some LiDARs also go beyond just XYZ and include the velocity. You can learn more about it through my article on FMCW LiDARs that are types of LiDARs that also return the velocity information.

3D Reconstruction

Earlier, I said how even if you're not using a LiDAR, you end up working with point cloud data. A very common technique for this is by using 3D Reconstruction.

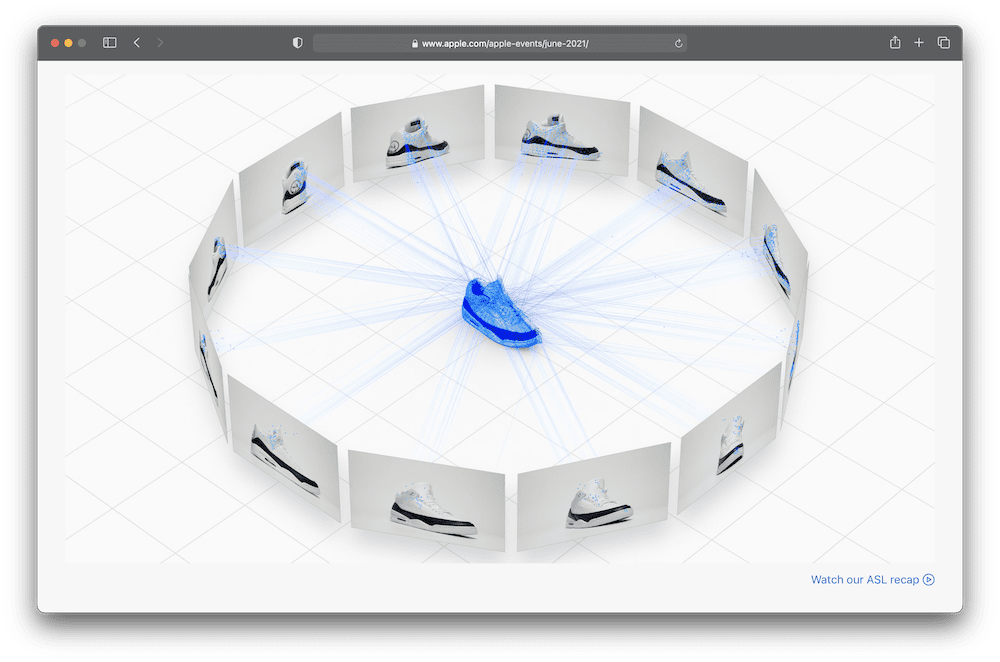

3D Reconstruction is when you convert 2D Images to 3D Point clouds. So you essentially want to project your pixels in three dimensional space. It can be done using 1 camera (rare), two cameras (stereo vision), or even an entire set of cameras!

For example, see Apple's Object Capture feature, displayed on their website:

Unlike with the LiDAR where the Point Cloud is directly rendered, this method will involve AI or Computer Vision based techniques to recreate the Point Cloud. We call this field Photogrammetry. and you can learn more about it in my article on how to build Pseudo-LiDARs.

Point Cloud Formats & Files

Whether you're doing one of the 3 techniques, the file contains lines (one for each point), and each point data has its information.

But there are multiple formats to choose from. When choosing between the different exporting capabilities, we usually encode the information in an ASCII file or a Binary file.

An example here with two files containing LiDAR measurements in .PLY (ascii) vs .PCD (binary) extensions:

Point Cloud Processing: How to process raw point cloud data

Point clouds can be processed using a variety of techniques, and can be done with software, or coding.

For coding, usually, we're using libraries. They provide a range of algorithms for point cloud processing, registration, filtering, downsampling, clustering, and even surface feature extraction. You can pick your poison between many of them, the most popular is called PCL (Point Cloud Library); my personal favourite is Open3D. Both are compatible with Python and C++.

Self-Driving Car uses: 3D Object Classification, Detection & Segmentation

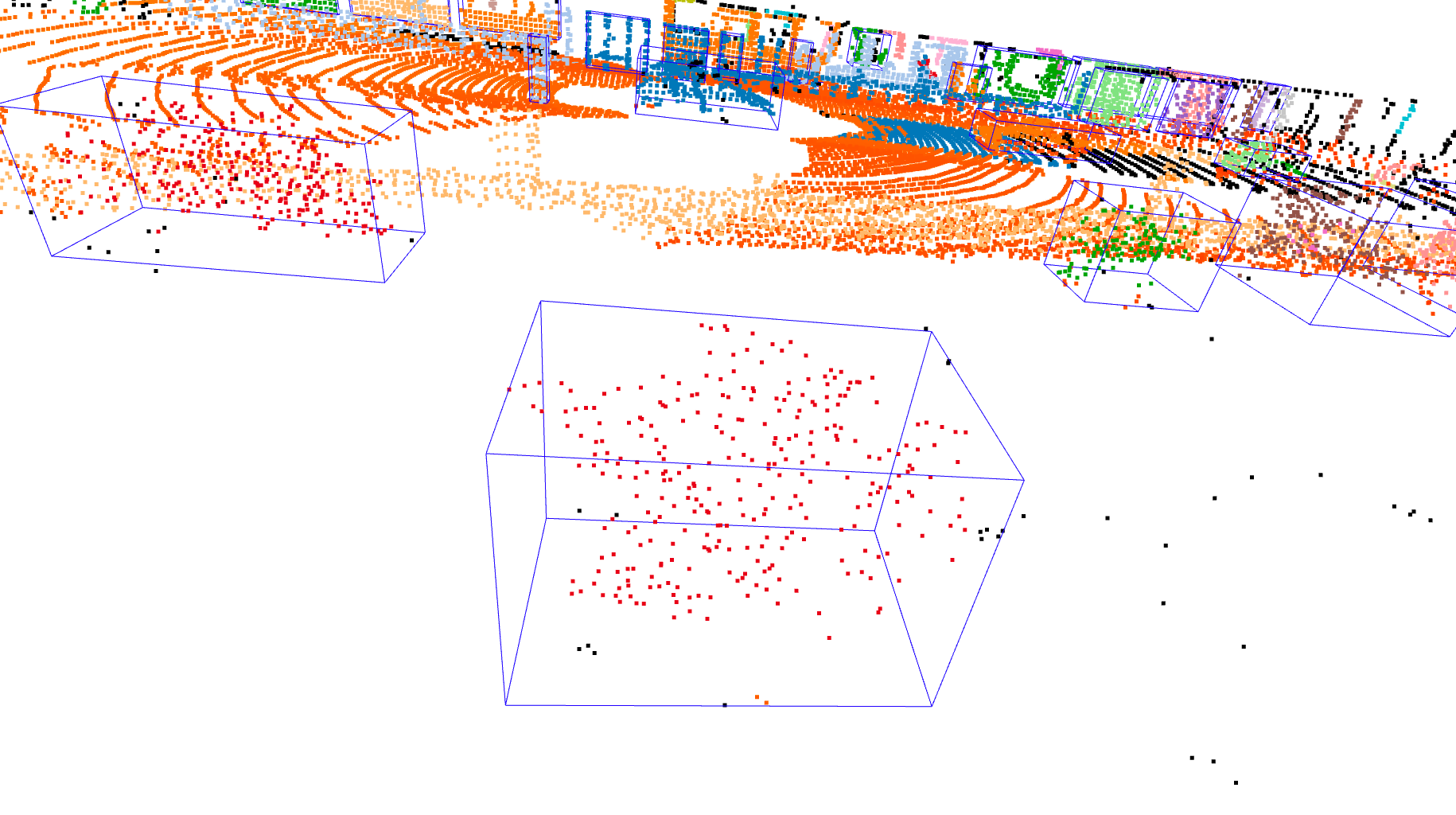

In self-driving cars, all the classical applications such as point cloud classification, 3D object detection, segmentation, etc... are valid.

You send a Point Cloud to a Neural Network, it returns 3D Bounding Boxes with the object's positions, class, and orientations. It can also segment the different planes, drivable road, etc... Algorithms for this are PointPillars, Point-RCNN, and more...

You can also use more traditional approaches such as unsupervised machine learning, I teach many of them in my Point Clouds Fast Course.

Example here:

If you want a more advanced introduction to Point Clouds processing, I recommend my article "How LiDAR Object Detection Works". Typically, what we want to do involves:

Object Classification

For direct classification, you send a Point Cloud to a Neural Network, it classifies the result. For that, the backbone model is typically a PointNet or a VoxelNet, trained on a point cloud dataset. I teach how to build and train one from scratch in my course Deep Point Clouds.

LiDAR Tracking, SLAM, and more....

Another technique we can talk about is SLAM. With SLAM, you use the LiDAR to build a 3D map of the world. In many cases, you also compare the scanned environment to an existing model in memory; which involves point cloud alignment and other techniques, exclusive to point clouds.

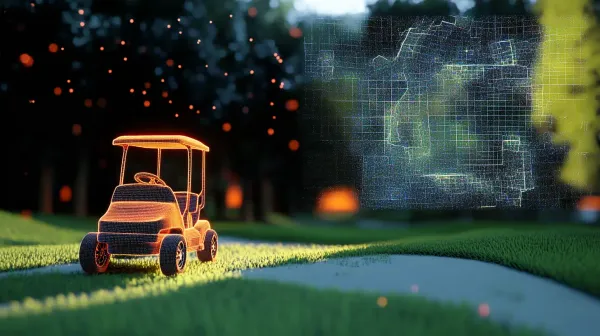

Here's an example:

Non Self-Driving Car Fields

Of course, it doesn't stop there. Point Clouds are used in the movie industry, in CAD (computer aided design), in the video game industry, in 3D modeling, in environmental monitoring...

It's used by Google in their Street View, it's used in the construction field, the architecture industry, in quality inspection, augmented reality and many more.

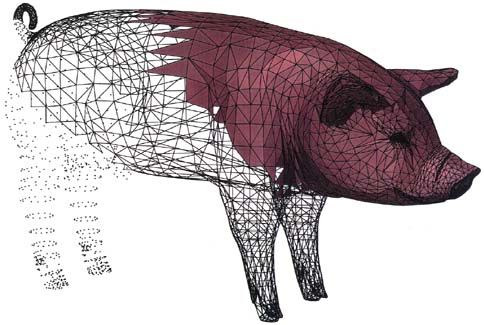

Something super basic we could talk about it Surface reconstruction. it's the idea of creating a continuous 3D surface from a set of unordered point clouds (or other 3D data). This surface can then be used for visualization, analysis, or further modeling.

A Point Cloud can also be converted to meshes, voxels, or any other type of 3D data, and from there we can do many other things!

Point Clouds from today

If you have any project related to 3D, you'll want to work with Point Clouds. As an AI or Robotics engineer, you can't just stick to images.

The real world is in 3D, and understanding how to work with 3D Data can make you infinitely more scarce. Many AI Engineers can process an image with OpenCV, very few know how to process a point cloud file.

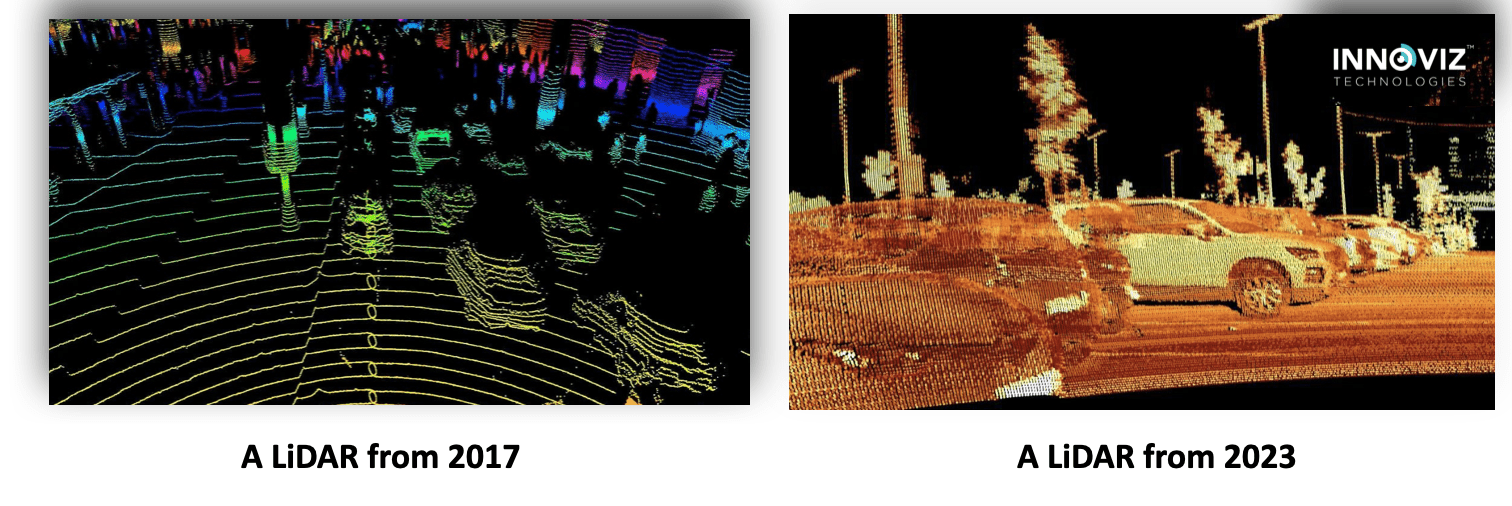

With that, there are new challenges coming in Point Cloud processing — for examples LiDARs are becoming more and more capable these days, and companies such as Innoviz, AEye, Aeva, or Velodyne are serious players.

But they also push the boundaries, and make LiDARs that produce millions of points per second, which involves many more points to process.

This good news means engineers will also need to learn how to compress Point Clouds Data, how to build satellite architectures, and how to make their entire system more efficient and ready for millions of point clouds!

Conclusion & Summary

If you've heard about Point Clouds before, you know now a bit more about them.

- Sensors such as RGB-D cameras or laser scanners produce raw data: a point cloud. From there, there is sometimes a manual correction in place, but most of the time, you can process your point clouds as they are. Techniques such as 3D Reconstruction are also useful when you only have cameras.

- To process a point cloud, you can either go geek mode and use libraries such as Open3D or PCL, but you can also use point cloud processing software.

- Point clouds are great because (1) they give us the 3D information directly and (2) they provide built-in privacy.

- In self-driving cars, there are several applications, such as object detection, classification, segmentation, SLAM, and more...

- In other fields, mesh creation, surface reconstruction, augmented reality, video game, and other applications exist.