4D LiDARs vs 4D RADARS: Why the LiDAR vs RADAR comparison is more relevant today than ever

A few years ago, I was freelancing for a company who asked me if I could help them decide on their sensor suite for their last mile delivery shuttle. Their question? Camera vs LiDAR vs RADAR, which set of sensors to pick?

At the time (2020), what made the most sense was to combine all 3. "These sensors are complementary" I would reply — "The LiDAR is the most accurate sensor to detect a distance, the camera is mandatory for scene understanding, and the RADAR can see through objects and allows for direct velocity measurement".

In most articles, courses, or content related to autonomous tech sensors, the same answer has been given: Use all 3 sensors! Except for Elon Musk who really doesn't want anything else than the camera in a Tesla, the answer has been universally approved.

But the world has changed. In the recent few years, these sensors have evolved. If the LiDAR used to be weak in velocity estimation, and if the RADAR used to be very noisy, it's no longer the case. This mainly due to 2 emerging technologies: The "FMCW LiDAR" or "4D LiDAR", and the "Imaging RADAR", or "4D RADAR".

In this article, I want to describe the evolution of LiDAR and RADAR systems, and share with you some of the implications for the autonomous driving field.

Let's begin with the LiDAR:

Understanding LiDAR and RADAR sensors (pre-2020)

I have to say that nothing crazy happened in 2020 (at least on this topic), but it’s a new decade that saw many new 4D sensors... So let’s use it as a reference.

The LiDAR pre-2020: What is a LiDAR?

LiDAR (Light Detection and Ranging) is a technology that leverages laser light to measure distances and create detailed 3D maps of objects and environments. LiDAR systems operate by emitting laser pulses and calculate the time it takes for the light to return after hitting an object.

LiDAR is widely used in various applications, including autonomous vehicles, where it helps in object detection and navigation, as well as in surveying and mapping, where it provides accurate topographical data. The technology’s ability to deliver precise distance measurements and detailed environmental mapping makes it indispensable in modern sensing technology.

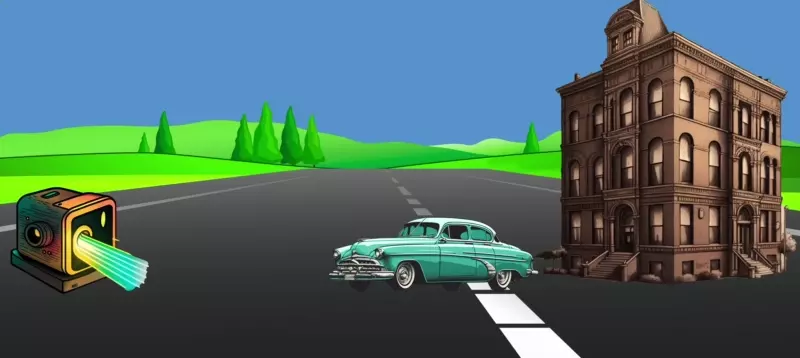

In the 2010 decade, when we talked about LiDARs, what people meant was TOF LiDAR systems (Time Of Flight LiDAR). These are sensors that send a laser pulse to the environment and measure the time it takes for a wave to come back. By measuring the time it takes to come back, we use the “time of flight” lidar principle to measure the distance of any object.

Here is an example:

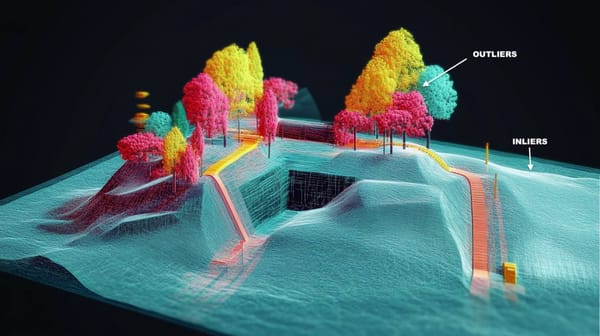

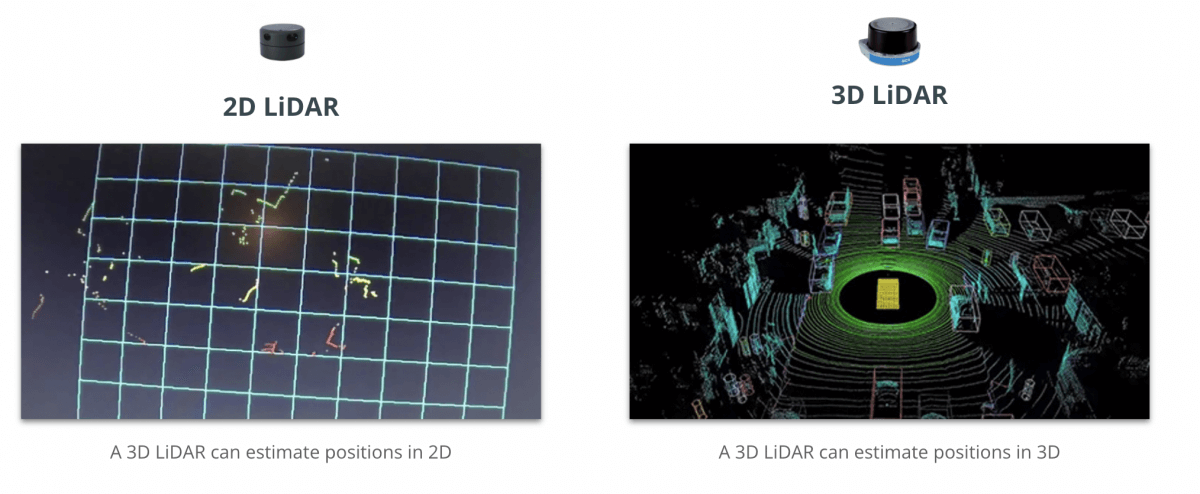

Depending on the number of vertical layers your LiDAR has, you can have a 2D or a 3D LiDAR, that creates a point cloud of the environment. Here is a comparison below:

The advantages of these is in the accuracy of the measurements, we have a laser-like accuracy. Lidar utilizes laser pulses to send out laser beams, measure distances, and create detailed 3D maps. Many Computer Vision systems are indeed trained using ToF LiDAR labels. But the drawback is that if you want to measure a velocity, you need to compute the difference between 2 consecutive timestamps.

Now, the RADAR:

The RADAR pre 2020: Radio Waves in Action

Let's begin with the basics:

RADAR stands for Radio Detection And Ranging. It works by emitting electromagnetic waves (EM) that reflect when they meet an obstacle. And unlike cameras or LiDARs, it can work under any weather condition, and even see underneath obstacles.

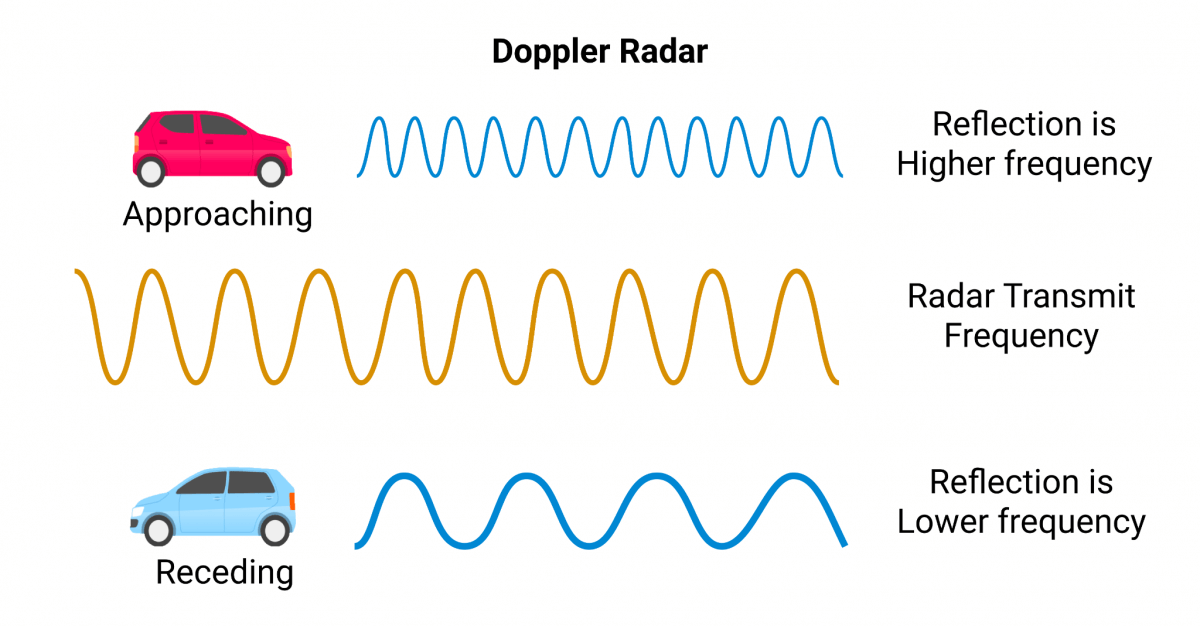

Thanks to the Doppler Effect, we can measure for every obstacle:

- The XYZ position (sort of)

- The Velocity

Which is already good, right? We have the 3D Position, plus the speed, so we have a nice 4D Output.

This technology is very mature (>100 years old), and is used in various industries, including aviation, where it is crucial for air traffic control, cars, missile detection, and even weather forecasting. RADAR’s ability to function effectively in diverse weather conditions and its proficiency in detecting objects at long ranges make it a reliable and versatile sensing technology.

Output from a RADAR system

But let me show you the real output from a RADAR sensor:

Pretty bad, right?

First, it's written 3D, but it's really a 2D output. We don't have an accurate height of each point. The only reason it's called 3D is because the third dimension is the velocity of obstacles, directly estimated through the Doppler effect.

Of course, we can work on these "point clouds", apply Deep Learning (algorithms very similar to what I teach in my Deep Point Clouds course), and after a RADAR/Camera Fusion, we can even get a result like this:

Notice how the yellow dot changes to a green color as soon as the car moves, and how each static object is orange, while moving objects have a color. This is because the RADAR is really good at measuring velocities.

Summary: LiDAR vs RADAR Pre-2020

If we summarize, we can see this output:

Notice how the camera has lots of advantages related to scene understanding, RADAR has lots of strengths in its maturity, weather conditions, and velocity measurement, and the LiDAR has one key strength: the distance estimation.

Now the problem is:

How do we avoid using all 3 sensors? In self-driving cars for example, the more sensors we use, the more expensive the car will be. What Elon Musk and Tesla did when removing the RADAR, and staying without a LiDAR, is the best economical decision for them to sell cars directly to consumers.

Today, we have access to new LiDAR and RADAR systems: the 4D LiDAR and the 4D RADAR. These both return a great depth estimation, a clean point cloud, and directly measure the velocity. Integrating lidar data with advanced processing techniques enhances the efficiency and accuracy of workflows, benefiting areas such as urban planning and disaster response.

Let's see how...

The new RADAR and LiDAR sensors of self-driving cars

I mainly want to talk about 2 technologies. They aren't "new" technically speaking, but they're new in the use, and the adoption of the market. It's the FMCW LiDAR and the Imaging RADAR.

You'll see that if a LiDAR vs RADAR comparison made not too much sense before (because they were complementary), it now makes a lot of sense because they can become competitive.

First, the 4D LiDAR:

Introducing the FMCW LiDAR (Frequency Modulated Continuous Wave LiDAR) or 4D LiDAR

An FMCW LiDAR (or 4D LiDAR, or Doppler LiDAR) is a LiDAR that can return the depth information, but also directly measure the speed of an object. What happens behind the scenes if they steal the RADAR Doppler Technology and adapt it to a light sensor.

Here's what the startup Aurora is doing on LiDARs... notice how moving objects are colored while others aren't:

To generate this, the LiDAR uses the Doppler Effect — If you're interested in learning more about how FMCW LiDAR sensors use the Doppler effect, I have a post here detailing the process here.

The main idea can be seen on this image, where we play with the frequency of the returned wave to measure the velocity.

The Doppler Effect is exactly about measuring this frequency. And this has now been adopted in FMCW LiDAR systems, but still with light waves instead of radio waves.

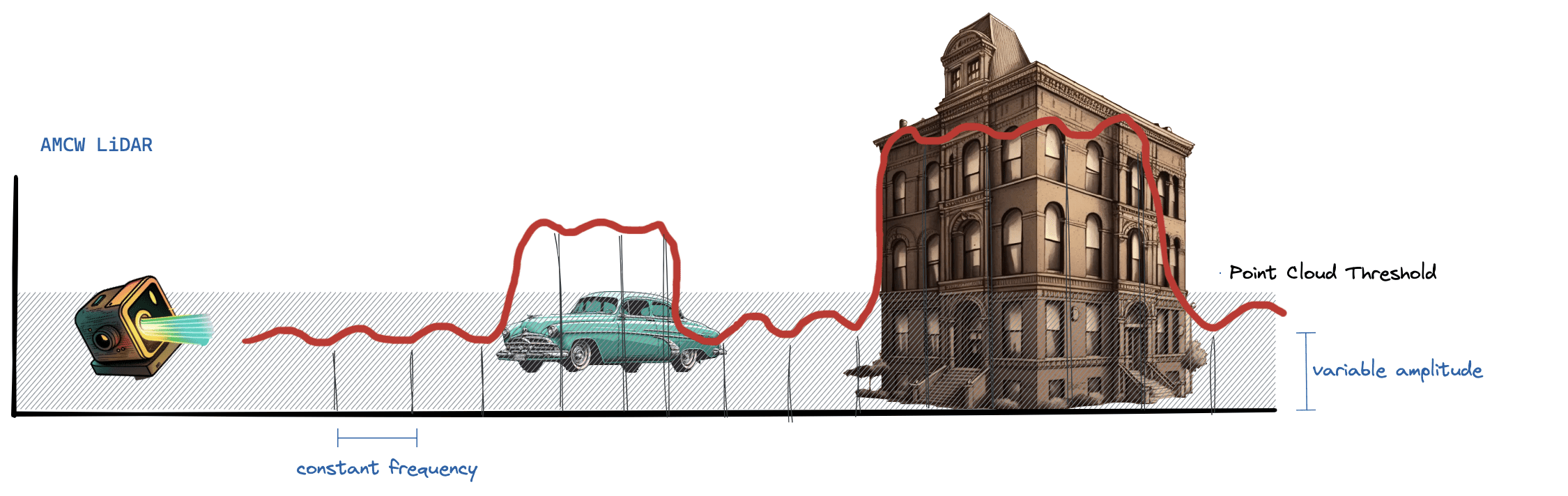

The AMCW LiDAR: Another type of LiDAR

There isn't just the FMCW LiDAR that is "new". LiDARs can also do AMCW — Amplitude Modulated Continuous Wave modulation.

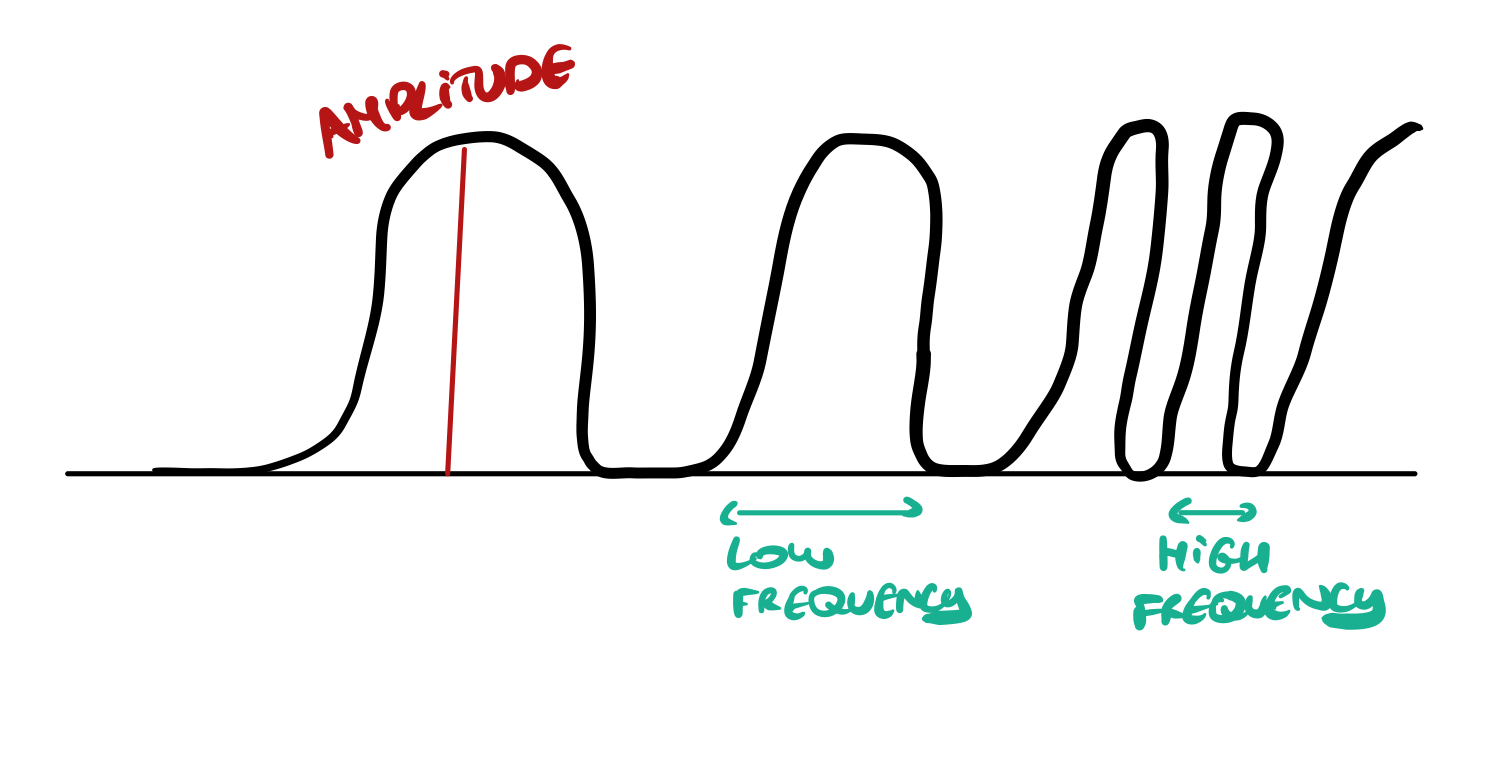

The difference is that these FMCW LiDARs measure the frequency of the waves while AMCW LiDARs were measuring the amplitude of the LiDARs waves. As a reminder, the frequency is about the wavelength, while the amplitude is about the "height" of the wave.

Here is a terrible (but clear) drawing to explain it:

In a traditional LiDAR, we don't look at the frequency, we're sending laser beams and measure the amplitude of the waves, and based on that amplitude, we consider it a point or not:

To sum up: A Time of Flight does time-of-flight measurement, an FMCW LiDAR does Frequency Modulation and an AMCW LiDAR does Amplitude Modulation.

This might seem like a small shift, but look at what it can do according to self-driving car startup Aurora:

Next:

Introducing the 4D RADAR (or Imaging RADAR)

When moving from 3D to 4D RADARs, we expect a much better resolution, and less noise. And this is what's happening with Imaging RADAR systems. These new sensors are becoming more and more popular, and have even been accused of being...

RADAR on steroids!

To understand better why, we need to understand the main concept they use called MIMO Antennas.

MIMO Antennas

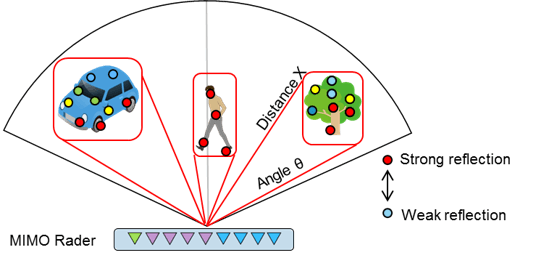

4D RADARs work using MIMO (Multiple Input Multiple Output) antennas. Dozens of mini-antennas are sending waves all over the place, both in horizontal and vertical directions.

In a 3D RADAR, it's only a horizontal process, so we don't have the height, and we have a pretty bad resolution.

When analyzing all these antennas, we can get a much better resolution, range, and precision. We could in fact detect obstacles inside a vehicle, and classify children from parents.

So this is it, the power of self-driving cars in the palm of your hand.

Thanks to this addition, the RADAR can now get pretty cool results. What does it look like? Here's a demo from Waymo's blog post. Do you notice how well it can see obstacles that are barely visible on cameras?

As you can see, Imaging RADARs are pretty good too.

Conclusion: 4D LiDARs vs 4D RADARs — Which sensor is the best?

Back in the day, any comparison didn't make sense because the sensors were highly complementary. But today, these sensors can be in competition. The evolution of these sensors bring a lot of benefits, so let's take our comparison from the beginning, and see where it improved.

What we can note is how both sensors got better, and could work as standalone, at least much better than their "other" versions.

- On the LiDAR side: The FMCW makes it better with weather conditions and adds the velocity estimation.

- On the RADAR side: The overall better resolution removes lots of noise, and makes it easier to find distances, classify, etc...

So, let's take a look at both:

Sensors in Action

When I was at CES 2023, I could see these sensors in action through 2 startups: Aeva and Bitsensing. So let's visualize both solutions.

Aeva's 4D LiDAR:

Bitsensing's Imaging RADAR:

What's interesting is also to look at the applications of these sensors...

Applications of LiDAR vs RADAR and my predictions

In many fields, LiDAR and RADAR technologies dominate without the other being too present. It's the case in aviation, where RADAR systems are invaluable for their ability to measure the speed and distance of objects, even in adverse weather conditions, making them essential for collision avoidance and adaptive cruise control systems.

In air traffic control, RADAR technology is indispensable. It provides continuous monitoring of aircraft positions, ensuring safe and efficient management of airspace. LiDAR, while less common in this field, is increasingly being explored for its potential in airport mapping and obstacle detection.

RADAR, particularly synthetic aperture radar (SAR), is used for large-scale terrain mapping and monitoring changes in the Earth’s surface, such as detecting landslides or monitoring deforestation.

LiDAR sensors on the other hand are pivotal for creating real-time 3D maps of the vehicle’s surroundings, enabling precise object detection and navigation. This capability is crucial for the safe and efficient operation of self-driving cars. It is also extensively used in surveying and mapping. LiDAR’s ability to generate high-resolution topographical maps makes it a preferred choice for geological surveys, urban planning, and environmental monitoring.

What's interesting is the case of autonomous vehicles: both can be used, and if they used to be complementary up to 2020, they are in PURE COMPETITION in 2025+. We saw it with Mobileye, who "deleted" the development of their FMCW LiDAR to focus on Imaging RADARs...

When I first wrote this article, I predicted that Imaging RADARs and FMCW LiDARs would be in heavy competition. It is indeed the case today.

My prediction is that Imaging RADARs could help companies get rid of the expensive LiDAR and rely on a Computer Vision solution only; while FMCW LiDARs could help companies enhance their LiDAR even more and get closer to autonomy.

Next Steps

- Learn about the FMCW LiDAR here