5 Best LiDAR Datasets to Learn & Process Point Clouds Data

A lot of things impress me about nature. The perfect symmetry of humans. The food chain and how every single animal or even insect is useful to the entire humankind. Yet, there is one thing that I find incredible: the way birds navigate. When you think about it, a bird can leave its branch, fly for dozens of kilometers, and then come back to that exact same branch. They have a built-in GPS (Global Positioning System).

When trying to look for LiDAR Datasets (Light Detection And Ranging), it may be difficult to know where to go. Where do you find good LiDAR data online? Are there free LiDAR data sources?

Often times, engineers begin with one dataset, and halfway through their project, realize it isn't great for the other task their wanted to add. This can cause you to never explore more datasets, or worse: restarting your project from scratch.

Just like birds, you may need a GPS system. In order to help engineers find resources to load point cloud data, apply Machine Learning models (or Deep Learning), or simply use the data labelling for new tasks... I wrote a list of datasets to download and work on.

Just like most LiDAR dataset, this article will be overfitted to the self-driving car problem, but I'll also provide resources for non-self-driving car datasets.

Let's begin:

Where to find LiDAR data for autonomous driving?

I have a list of a few datasets. Let's begin with one of the most popular among the Computer Vision community:

1. The KITTI Dataset

The KITTI Dataset is probably the most popular dataset in autonomous driving, ever. This dataset has been produced in 2013 with a Velodyne HDL-64 (meaning a point cloud with 64 layers) and a pair of stereo cameras.

A few things I love about it:

- First, it's very diverse: you can work on object detection, stereo vision, optical flow estimation, depth completion and prediction, odometry and SLAM, 2D and 3D Object Detection, Bird Eye View Detection, Multi-Object Tracking, Segmentation, and more...

It's so diverse each of my courses has been built using this dataset. It's the perfect "generalist" dataset: there's almost everything you can think of. - Second, this dataset gives the right information: We have the Velodyne point clouds, but also the projection matrices, calibration files, raw data, and all the conversion schematics.

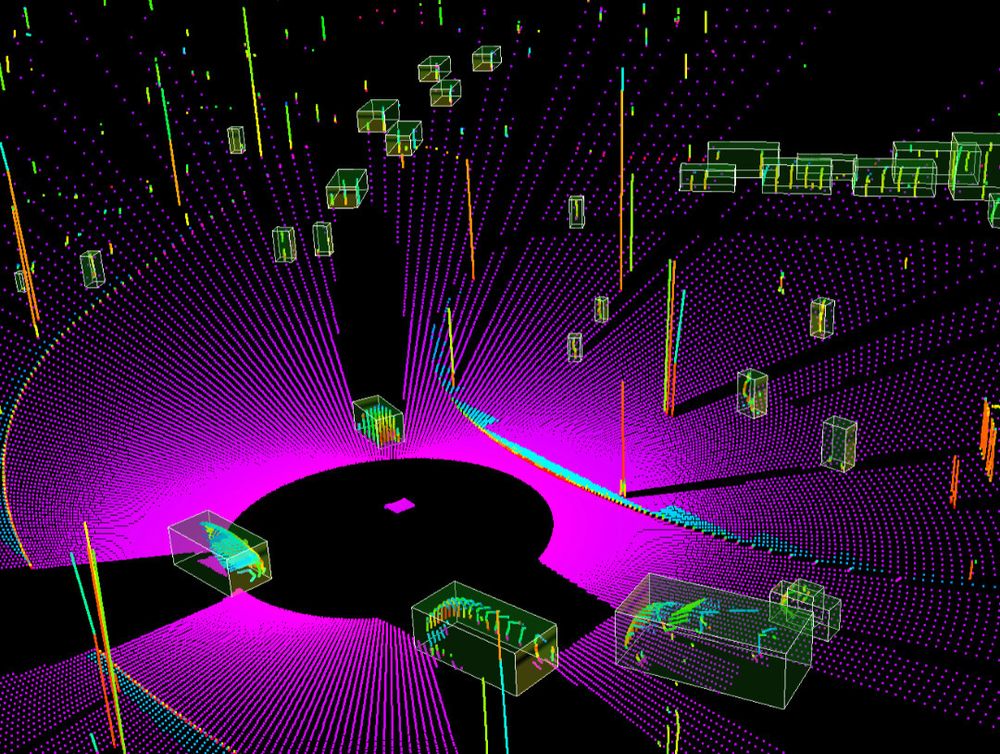

Using this, my edgeneers (cutting-edge engineers) have been able to produce nice sensor fusion systems in my VISUAL FUSION course:

So this is the first one I recommend, mostly if you're a student or researcher and want something easy to use and scalable.

👉🏼 You can try and explore the KITTI dataset here: https://www.cvlibs.net/datasets/kitti/

But KITTI has the generalist flaw: it's not specialized in LiDAR.

Which means that everything I listed: semantic segmentation, 3D Object detection, etc... is valid only for the camera. The LiDAR Point Clouds are provided, but there is no labeling on them.

This is why I recommend:

2. Semantic KITTI: A Dataset for Semantic Scene Understanding using LiDAR Sequences

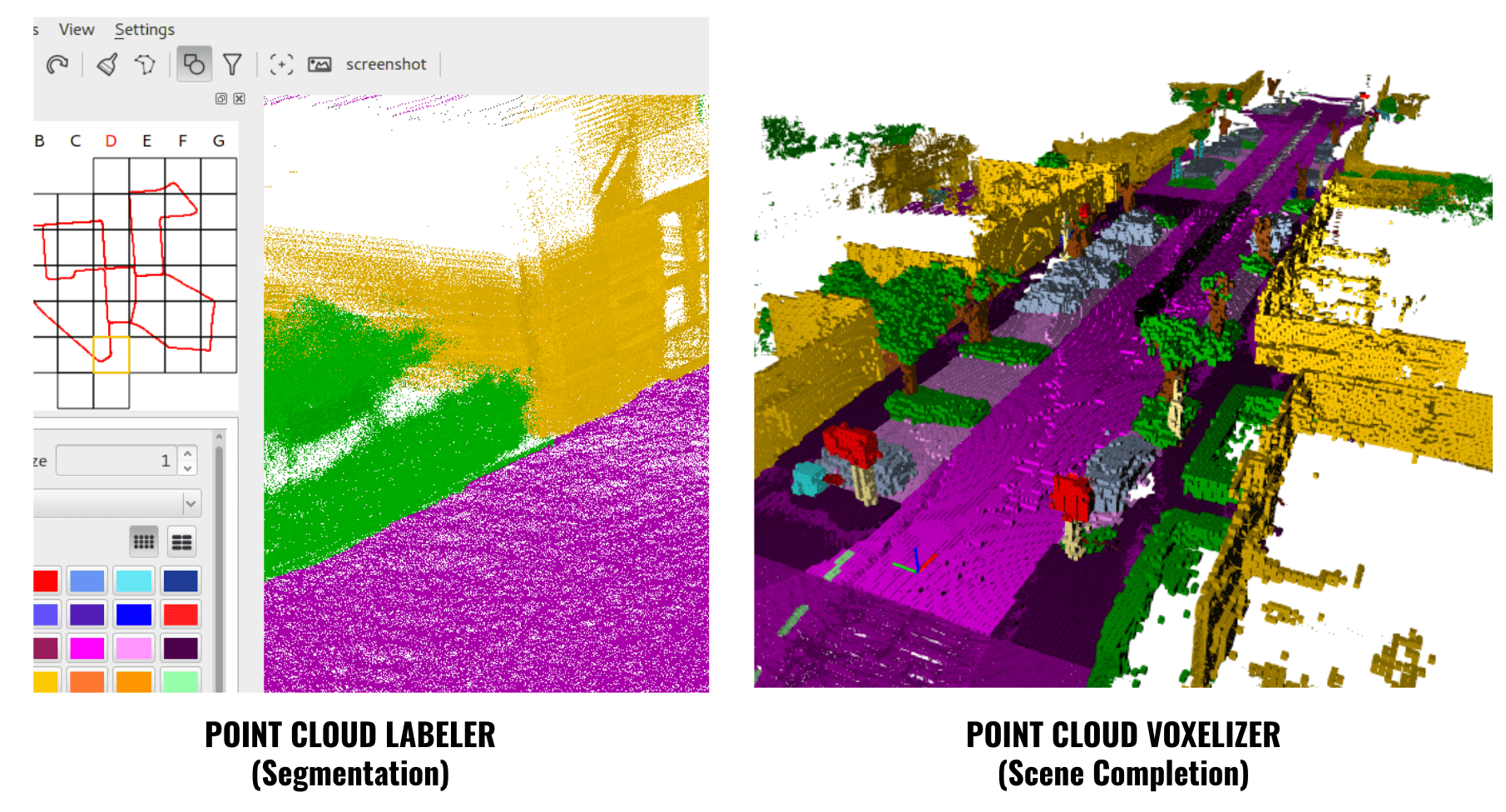

This dataset is from 2019, and is built on top of KITTI to add segmentation labels on Point Clouds. The main tasks you can do in this one are semantic segmentation, panoptic segmentation, and scene completion.

This dataset also comes with tools to label or voxelize the point clouds. Here is an example:

👉🏼 You can learn more about this dataset here: http://www.semantic-kitti.org/index.html and find their paper here.

But we're not done with KITTI just yet, there is another...

3. KITTI-360: A large-scale dataset with 3D&2D annotations

This dataset is from 2020, and is also really cool: You can use it for 3D Object Detection, as well as segmentation in the LiDAR.

A few tasks available: 2D Semantic & Instance Segmentation, 3D Semantic & Instance Segmentation, 3D Bounding Box Detection, 3D Scene Completion, Novel View Synthesis, and Semantic SLAM.

On their page, you can see their trailer and run their Point Cloud Visualizer. I embedded it in this article so you can try it:

The KITTI 360 Live Visualizer (try it)

If you're looking for datasets to experiment, this one is worth a try.

👉🏼 Explore the KITTI-360 Dataset here: https://www.cvlibs.net/datasets/kitti-360/demo.php

KITTI isn't the only Dataset available, here are a few more:

4. Waymo Open Dataset

It's no secret, Waymo has cars. And these cars can be used to train models, but also to collect datasets and make them publicly available (yes for free)!

This dataset is split in 2:

- The Perception Dataset, with high resolution sensor data and labels for 2,030 segments; also including panoptic segmentation.

- The Motion Dataset, with object trajectories and corresponding 3D maps for 103,354 segments.

Unlike the others, this dataset has been produced by several LiDARs: 1 mid-range lidar and 4 short-range lidars. But exactly like KITTI, you can access the synchronized lidar and camera stream, projection matrices, calibration and poses, etc...

You also have lots of tasks: Bounding Box Labels, 2D Video Panoptic Segmentation Labels, Key point labels, 3D Semantic Segmentation Labels, 2D-3D Association Bounding Boxes, and even monocular 3D Bounding Boxes.

Which makes it one of the best (if not the best) dataset for learning and working on LiDAR. The motion subset also provides you with a map and objects to build a more complete solution.

Unlike the KITTI Dataset, this dataset also has lots of different weather and lightning conditions, a focus on construction scenes, pedestrians, and, interestingly, lots of different geographic locations.

It's not perfect though, and you will probably lack things like Optical Flow or Stereo Vision labels if you'd like to try them; I'd then recommend you go to KITTI.

👉 Explore the Waymo Dataset: https://waymo.com/open/

Finally:

5. NuScenes

Created by NuTonomy (now Motional), NuScenes is also a really good dataset for autonomous driving and LiDAR. If you want to play with point cloud data, you're going to enjoy it!

It has been recorded in 2019 in Boston and Singapore, has lots of diverse conditions, and is suited for both Computer Vision and LiDAR. In fact, in 2020, the Motional Team released a NuScenes LiDARSeg, a sub dataset specialized on LiDAR.

Although it has 6 cameras, the data has been collected with only one LiDAR sensor. Other than LiDAR, there is also NuImages and NuPlan, which means you can also train simulated agents for Motion Planning.

This dataset has 3D objects, segmentation, and many other tasks available.

👉🏼 Explore the dataset here: https://www.nuscenes.org/nuscenes

More Datasets to work on Point Cloud Data, and non Self-Driving Car Datasets

We have mentioned so far 5 Datasets, 4 of them being specialized in LiDAR. I would like to complete this article with a few additional datasets that exist and you may find useful too:

- ApolloScape: https://apolloscape.auto/index.html

- Panda Set: https://pandaset.org/

- Argo Verse: https://www.argoverse.org/ (Argo having shut down, I have no idea if that still exists by the time you read this)

For a complete list, including Non-Self-Driving Car Datasets (To work on topography maps, or simply to get your national lidar dataset):

- Complete List: https://data.world/datasets/lidar

If you've liked this article, I recommend you check out my introduction to LiDAR: Point Clouds Fast Course: Introduction to 3D Perception.

Jeremy