Introduction to Loop Closure Detection in SLAM

Sean was walking endlessly along the beach. "How long have I been lost here???" he asked out loud. But nobody answered. Sean was alone. Lost in a desert island, with nobody to rescue him. "What is this beach?! Where am I?". He was walking, again and again, until suddenly...

"Wait a minute! I've already seen this red rock! I must be at the entry to the beach!"

He then realized...

"I'm not lost! I'm running in circles!"

Dear reader, welcome to the world of Loop Closure!

Loop closure is a fundamental algorithm in Robotics and Computer Vision. But where it's the most important is in SLAM. So before I jump in loop closure, a 1-min intro to SLAM.

The 1-min Intro to SLAM (Simultaneous Localization And Mapping)

Have you ever been to Manhattan? It's a place I really love. And in part because it's really convenient for walking, wandering, and discovering new places. So picture yourself in Manhattan, and imagine being asked to walk in the city, and every time, keep track of where you are.

So you start in Time Square, and join the 5th Avenue in a few minutes. You then walk along the 5th Avenue towards Central Park, and as you get closer, you're perfectly able to update your position on a map.

This is SLAM.

It stands for Simultaneous Localization And Mapping, because you're simultaneously creating a map of Manhattan and locating yourself in it.

Now imagine walking for 20 minutes along the 5th Avenue, starting at Abercrombie, and then suddenly after 20 minutes, being asked "Which number are you EXACTLY?".

Then you don't know. Even if you counted the number of steps you took, there is a slight risk of error. Who knows? Are you number 100? 102? 122?

This is what we can call accumulated errors.

And this is why Loop Closure is important. Loop closure is the equivalent of seeing the Apple Store along the 5th Avenue, and then immediately thinking "Oh, it's Apple! We're at 767!"

And robots need it!

Because Loop Closure is what helps a robot understand that an object has already been visited, and therefore the robot will update its location and the map accordingly. Imagine being blindfolded, and then transported at the back of a car with armed kidnappers, only to be released near the Eiffel Tower. Suddenly, you realize "Hey, I know this place!" and you're (somewhat) relieved.

This is loop closure! It's highly involved in SLAM, but also in Augmented Reality, and even Object Tracking. By the way, you can learn more about SLAM in my course on SLAM which covers many of the algorithms and help you run these.

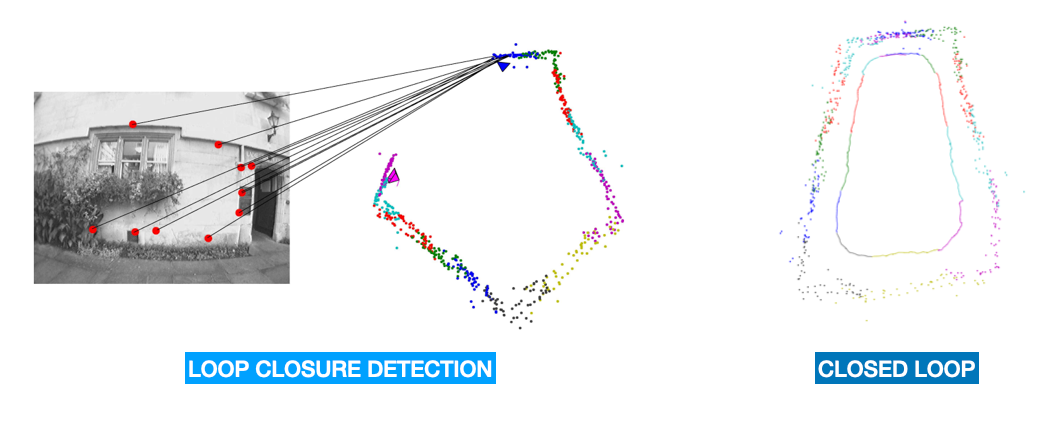

An example? See how this robot drives, and then suddenly recognize a place, and update the entire map by aligning the locations.

Which leads us to:

How Loop Closure Works

There are several loop closure techniques. And in fact, I talk about many of them in my SLAM course. In this post, I'm going to give you a brief intro. There are lots of ways to classify the algorithms, but because I'm a simple guy, let's simply classify them as:

- Vision Based

- LiDAR Based

Vision Based Loop Closure Detection (Visual Features)

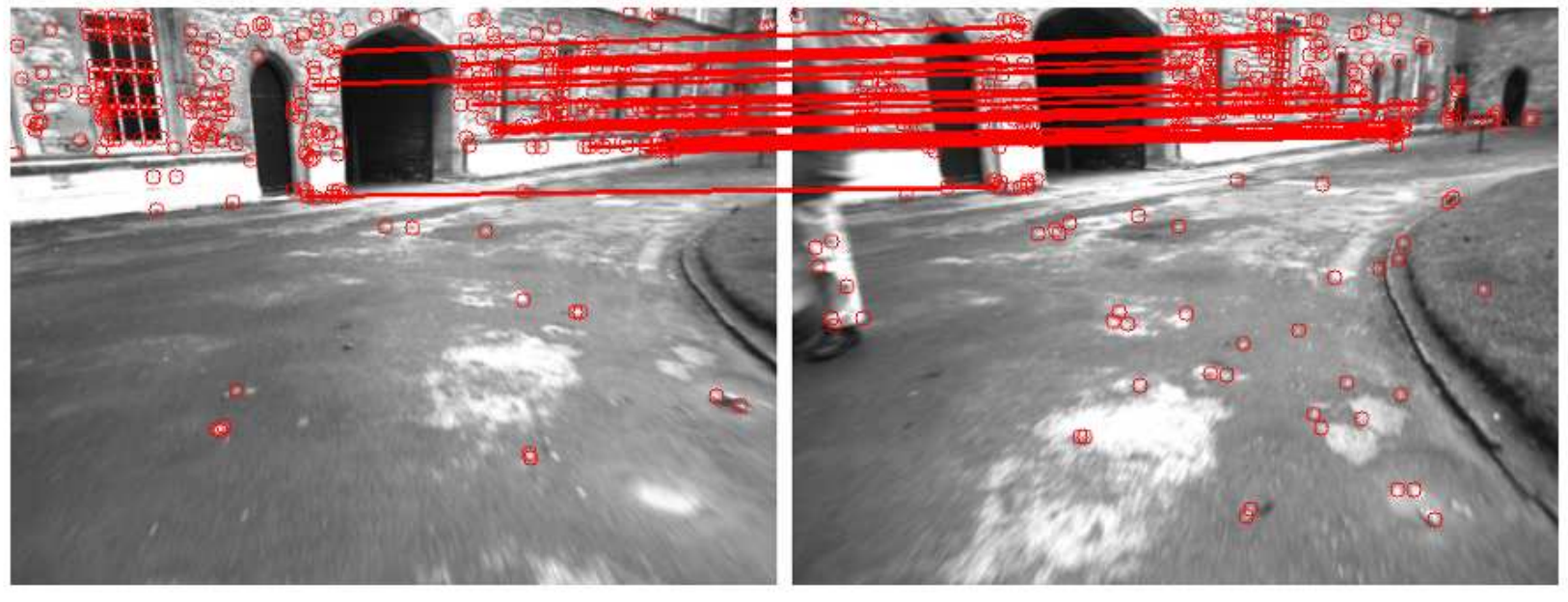

When we have a camera, the first things we want to look at are features. Feature-based methods rely on identifying distinctive features in the environment, such as corners or edges, and matching them between different sensor data frames.

The algorithms for this are called SIFT (Scale-Invariant Feature Transform), SURF (Speeded-up Robust Features), ORB (Oriented Fast and Rotated BRIEF), etc.... If you want an intro to these techniques, you can check my post Visual Features & Tracking: The Fundamentals.

In a traditional feature matching process, like for example in Object Tracking, we're doing something like this:

- Detect the features using a feature detector (SIFT, SURF, ORB, AKAZE, ...)

- Describe the features using a feature descriptor (SIFT, SURF, ...)

- Match the features in the current image with the memory features (from previous images), this using RANSAC or other similar algorithms

- Do your application: tracking, loop closure, etc...

An example here:

So let's note this as our pillar: if we want to detect previously visited location, we'll do it via visual features.

In SLAM, what we mainly do is to build what's called a Bag-Of-Words.

Bag Of Words

In Simultaneous Localization and Mapping (SLAM), we want to represent the visual features as a fixed-length vectors and match them efficiently to detect loop closures. And we want the features we're looking at to be matched based on some sort of dataset, because it'd make things so much easier. If you look at a window, you know it's a window because you've seen hundreds of windows before. But if you look at an unknown object, matching it is much harder.

In a Bag of Words approach, we're matching our descriptor features to visual words in a fixed-size vocabulary. By matching the features to a pre-trained vocabulary, we're able to do the loop closure detection much faster (computations are more efficient).

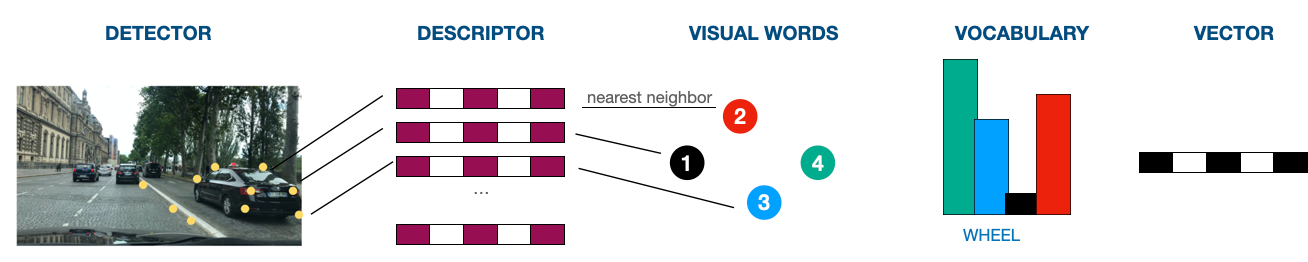

So it goes like this (simplified):

- Feature Detection (SIFT, SURF, ORB, ...)

- Feature Description (SIFT, SURF, ORB, ...)

- Bag of Words: The raw descriptors are assigned to the nearest visual word in the vocabulary, and a histogram of visual words is constructed for each image. This histogram represents the BoW vector for the image, which is a fixed-size representation of the image features. This BoW vector can then be used for efficient matching and loop closure detection.

It kinda looks like this:

And then we're matching the vectors together with the previous keyframes when we need to run place recognition.

So here's an example here with an algorithm called ORB SLAM:

So this is for Visual SLAM Algorithms, and it works in known environments, because we're working with a Dataset. Sometimes, the environment might change, it may rain, or it may be dark, and often, our Bag-of-Word will fail. This is why there are also algorithms such as FAB-MAP (Fast Appearance Based Mapping) that can work better in dynamic environments or complex environments with lots of variability.

Next, let's move to LiDAR:

LiDAR Based Loop Closure Detection (Direct Methods)

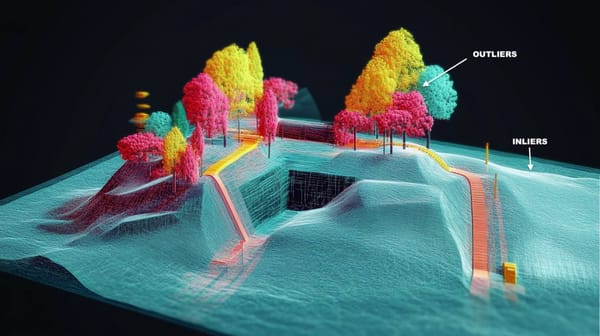

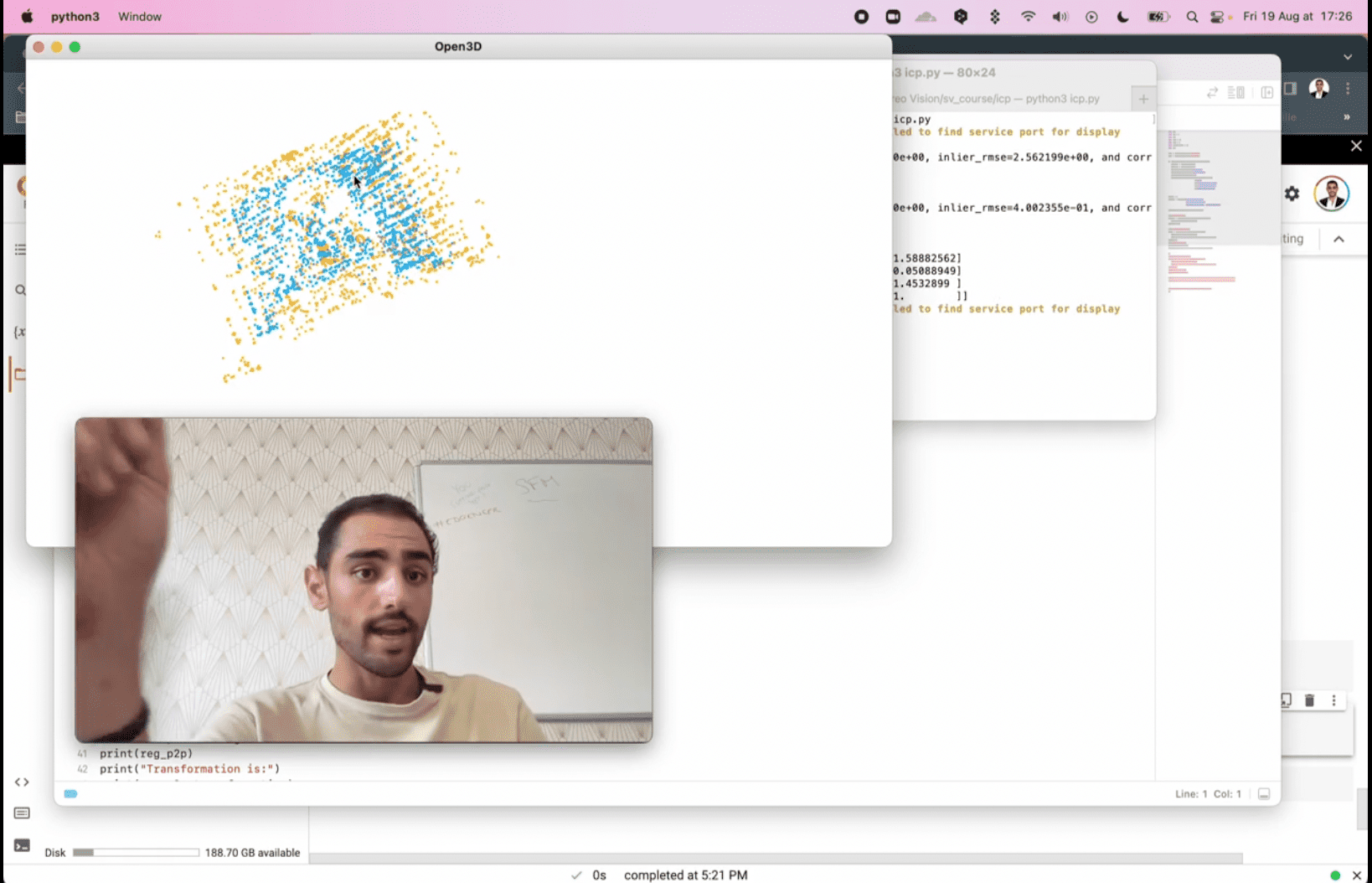

In a LiDAR, we don't extract any feature. We work directly with the point clouds. Direct methods use the raw sensor data to estimate the robot's position and recognize a previously visited location. If you've heard of algorithms like ICP, or Bundle Adjustment, or Pose Graph Estimation, this is it. So let's see some of them:

Iterative Closest Point (ICP)

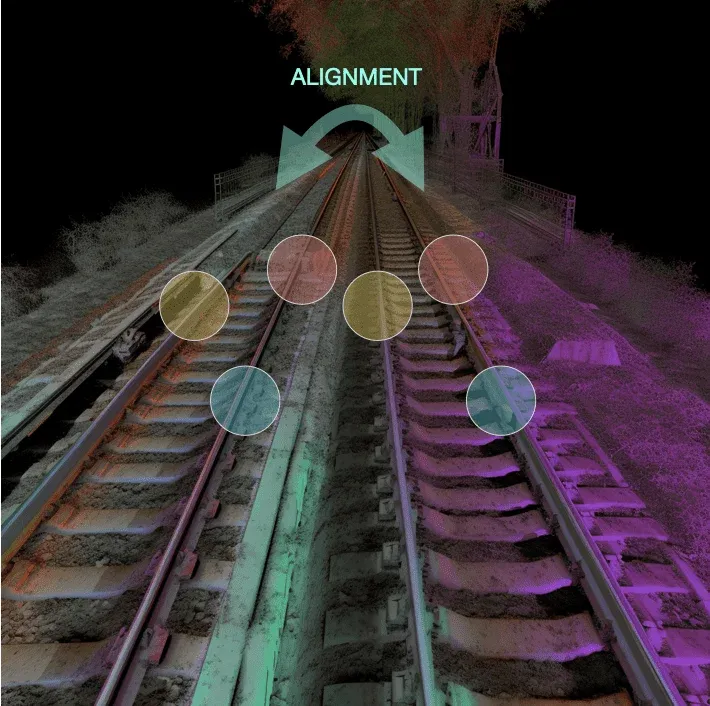

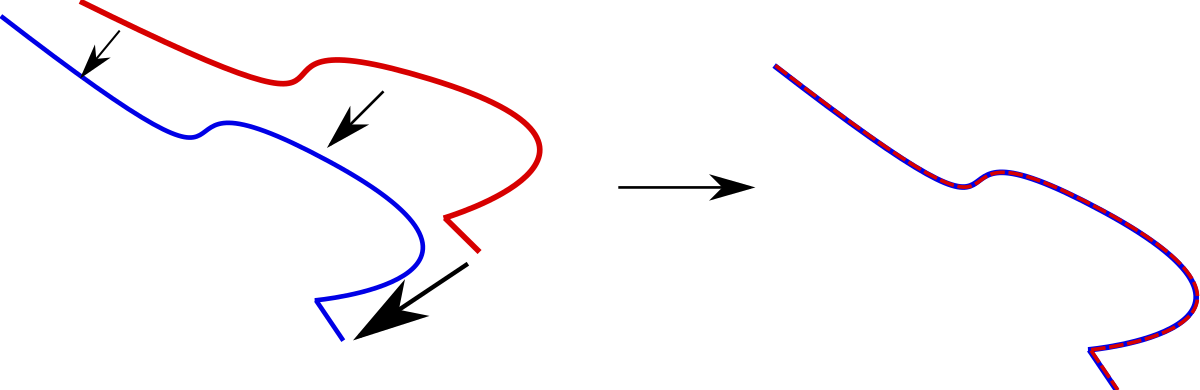

For example, the Iterative Closest Point Algorithm (ICP) is a method used to align two point clouds iteratively by minimizing the distance between their corresponding points. It is commonly used for 3D Point Cloud Registration tasks, such as aligning point clouds from LiDAR sensors or depth cameras. ICP can work well in environments with limited feature points, such as indoor environments or dense forests.

The ICP algorithm starts by finding the corresponding points between the current point cloud and the previously recorded point clouds in the map. These corresponding points are used to estimate the transformation between the current point cloud and the map using a least-squares approach. The estimated transformation is then used to align the current point cloud with the map.

Bundle Adjustment

Bundle Adjustment, on the other hand, is an optimization technique that estimates the camera's intrinsic and extrinsic parameters by minimizing the reprojection error of a set of 2D image points onto a 3D model.

It can work well in environments with a high number of feature points, such as urban environments or open fields. Where we use it the most is probably 3D Reconstruction.

Similarly to how we've done in the Feature-Based techniques, loop closure detection will be done by matching and aligning points from time (t) with points from time (t-1).

What about Deep Learning?

Hey! Are we crazy? We haven't talked about Deep Learning, Transformers, and all these models until now! What's up?

Well, let's just say it's a world that is highly bayesian, and that prefers traditional approaches. But it doesn't mean Deep Learning isn't involved. For example, we discussed matching detected features with ORB or SIFT, but we can also detect these features with Convolutional Neural Networks.

Similarly, these days, there are SLAM algorithms based entirely on Deep Learning; but I gotta tell you; this is mostly in research for now, and most SLAM applications, and even most loop closure detection applications are based on what I shared so far.

Loop Closure Candidates

An important point in loop closure is deciding how to match features from time t and t-1. There are TONS of reasons why this could go wrong. You could look at a point cloud of a house, but it's not the same house you think you've visited. You could look at a barrier, but it's not the right one. A window, but it's a building with 100s of windows.

There are solutions to matching features, or as we call them, loop closure candidates. One of them is to use the Bag-of-Words / FAB-MAP. But to improve this, there are other things we can do:

- Geometric Constraints: The geometric constraints between the current observation and the previously visited locations can be used to generate loop closure candidates. For example, the distance and orientation between the current observation and the previously visited locations can be compared, and the ones with the closest match can be selected as loop closure candidates.

- Time-Based: We can include time-based methods that will use the temporal information in the robot's trajectory to generate loop closure candidates. For example, the robot's position and orientation at different time steps can be compared to identify the closest matches, or the time intervals between the robot's visits to different locations can be used to generate loop closure candidates.

- Region-Based: Similarly, you can set a Region of Interest (ROI) to determine what you'll match, and don't match. This can save computations, as well as potential errors.

Map Correction

Earlier, we've seen how to detect loop closure candidates, and how to confirm that a place has been seen before.

In the sentence "Hey! I've seen this rock! I must be at the entry of the beach!" — we have covered the "Hey! I've seen this rock!" part. But not the "I must be at the entry of the beach!".

This second part belongs to map correction.

How does the map correction work? It depends on the type of SLAM algorithm you're using. Loop Closure "Detection" is about detecting the position of landmarks you've already seen. But map adjustment is another module in SLAM.

There are usually several types of SLAM algorithms, for example, Graph-SLAM is about optimizing a graph based on landmarks. When using graph SLAM, map correction will be done via graph optimization.

But if you're using EKF-SLAM for example, which uses an Extended Kalman Filter, map correction will be done by incorporating the information from loop closures and observations of landmarks into the Kalman filter's state estimate. If you're into Kalman Filters, it's about the update step.

So this isn't really part of loop closure, this is what happens right after!

Overview

We've seen a few things concerning the loop closure detection process, let's review them:

- Loop closure is a sub algorithm of SLAM that is about identifying previously visited locations and using them to correct the accumulated errors in the robot's pose estimation.

- Loop closure detection can be achieved using different methods, including geometric constraints, appearance-based methods, and time-based methods.

- Loop closure candidates are potential matches between the current observation and the previously visited locations in the map.

- False positives in loop closure can be caused by similar-looking locations, lighting changes, sensor noise, or other factors. For that, we can use geometric constraints, region constraints, and even better algorithms like FAB-MAP.

- We mainly run loop closure detection on images and LiDAR point clouds. For this, we use Visual Features on images, or algorithms like ICP or Bundle Adjustment on point clouds.