High-Level vs Low-Level Motion Planning for Self-Driving Cars

The McDonalds brothers got out of their cars, and looked at Ray Kroc:

— "Franchise!" he yelled.

— "Beg your pardon?" replied one in confusion.

— "Franchise! Franchise the damn thing! It's too damn good for just one location. There should be McDonald's everywhere! Coast to coast. Sea to shining sea... [...] When I saw these lines, your whole operation, tasted your product, I knew what needed to happen. Franchise! Franchise! Franchise! Franchise! Franchise!"

In the movie "The Founder" you can view this scene where Ray Kroc, the visionnaire behind McDonalds, is pitching the idea of franchising to the McDonalds brothers for the first time. Yet, while it was (obviously) the right thing to do, the first reaction from the brothers was negative, and they even said how they already tried. So why was Ray the one who could make it happen?

Because Ray was a strategist, while the McDonalds brothers were tacticians. In many business lessons, we are taught that skills should be complementary — the truth is, the best combination is when mixing strategists, with an incredible vision of what a product can bring, and tacticians, with an incredible know-how.

This idea, which is behind building startups, is also behind the implementation of the brain of self-driving cars: Motion Planning.

A self-driving car is built around 4 Pillars: Perception (see objects, roads, ...), Localization (place yourself in a map), Planning (drive in the map), and Control (move the vehicle).

The Motion Planning problem may look like a set of elements such as "bezier curve fitting" or "finite state machines", or even "A* and Dijkstra!!!", but it's actually more complex that this. There are 2 main sub-topics to handle:

- High-Level Motion Planning: How you plan a route from A to B. (strategist)

- Low-Level Motion Planning: How you overtake a vehicle, generate trajectories, etc... — aka the Tactician. (tactician)

In this article, I'd like to talk about these 2 elements of path planning, and in a third point, I'll talk about edge cases.

So let's begin:

High-Level Motion Planning

What are the main steps when going anywhere? You pull out your phone, use a GPS app to decide on the main streets you'll take, and then, at every time, decide how fast you're driving, if you should overtake, etc... The GPS part is high level, but the "overtaking" and "stop at red light" part is low-level.

We will need two things here:

- A map of the world – referred to as HD Maps.

- A motion planning algorithm to create a route from A to B

So let's start with the maps.

HD Maps

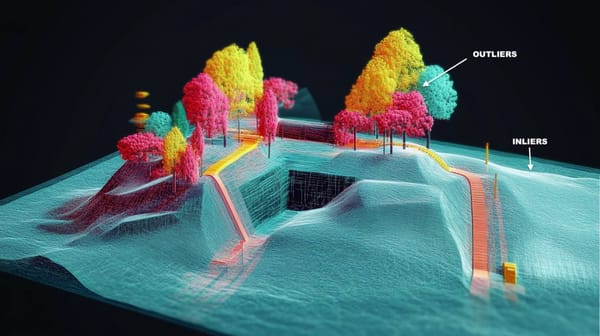

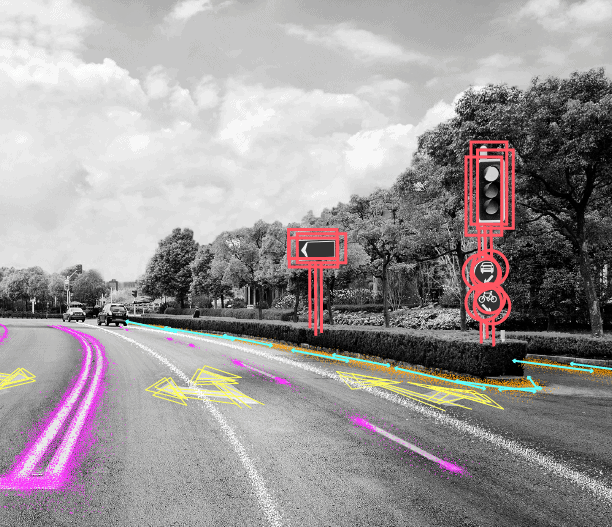

So what is an HD Map? Well, it's a map, like you have on Waze/Google Map, but with a centimeter level accuracy. The difference is: everything is mapped. Not just a street, but also how many lanes it got, the width of each lane, the position of every traffic sign in this lane, the location of bumpers, etc...

We usually build these using LiDARs, and some companies like HERE specialise in mapping and can get you a map. I note: it's a manual process you do once and reuse all the time.

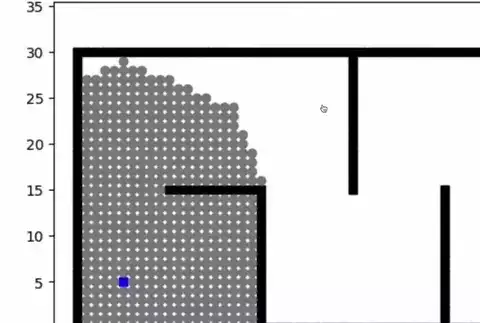

So what does it look like? The first time I got into a self-driving car, I could actually see one. The car had a screen, with an interface showing a visualizer, and a software named "QGIS". What it is? It was a satellite software, where you can open maps, add your GPS position, and therefore drive in the map.

Let's see it in action:

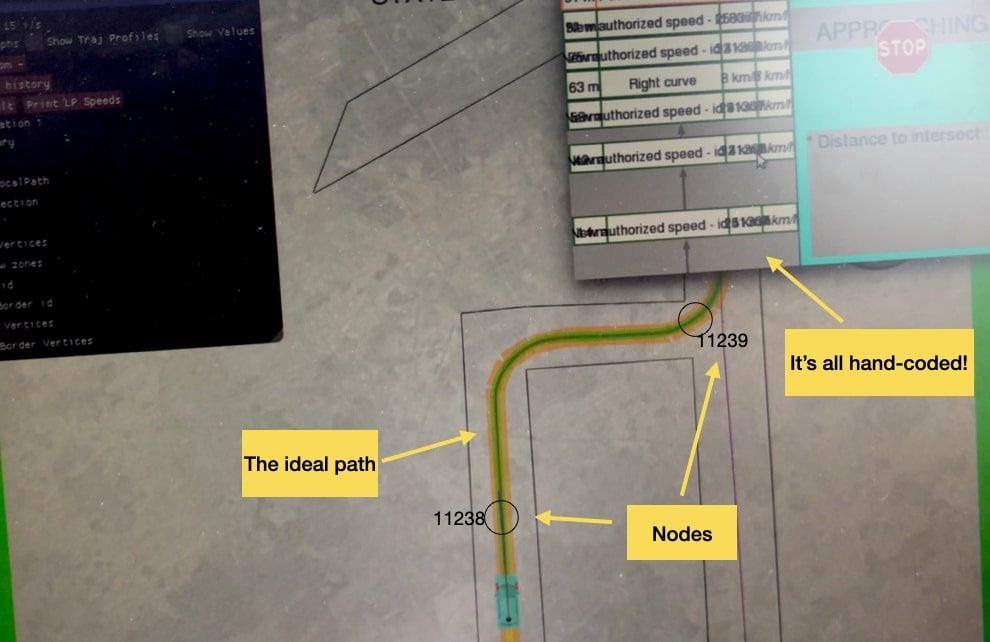

See the red area on the left? and values for the car driving in the roundabout? Well, the map has 100x more info than this. Every lane has an ideal trajectory, with nodes or checkpoints every 10 meters.

How can you use it for autonomous driving? When you say "I want to get to the coffee shop" you actually say "I'm on Node 238 and I want to get to node 1129". Then, an algorithm plans for the route from node to node.

One quick note: Maps can also be dynamic. This is what mobileye does in their "AV Map". Rather than doing the mapping once, they let their fleet do it continuously. What happens is, they sell ADAS devices to carmakers, and millions of vehicles are equipped with their tech. Then, the process is simple: tons of cars collect information, send it back to a server, and then all the data is aggregated and combined into one, random forest style!

So this goes into the map I showed earlier. So now let's see the actual algorithms.

The High-Level Planning Algorithms

See the map as a graph, with nodes. What is the first "shortest path" algorithm that comes into mind to go from A to B? You guessed it! Dijkstra! Dijkstra is a search algorithm, and it's probably the first one everybody learns at school and in computers.

There are 3 families of search algorithms that people use:

- Discrete Planners: Algorithms that will discretize the environment into cells, and then create a trajectory from A to B. Algorithms like Dijkstra, Breadth First Search (BFS), Depth First Search (DFS), or A* and Hybrid A* are the main ones. I would say most implemented robot motion planning algorithms use a version of A* as v1 (and iterate from there).

- Sample-Based Planners: Sampling based algorithms don't explore the entire map, but will generate enough "samples" (or nodes) so you can create good enough path from A to B, going through the generates nodes. Some algorithms like Probabilistic Roadmap (PRM) and Rapidly Exploring Random Tree (RTT) or their evolved versions (like RRT*) are very popular in this field.

- Probabilistic Planners: Any algorithm based on AI, Reinforcement Learning (Q Learning, Value/Policy Iteration, ...) is belonging to this family. These algorithm use experience and neural networks to plan the high-level route.

In a post on Tesla's End-To-End algorithm, I shared the "Monte-Carlo" method they're using in the planner of FSD11. Intuitively, where would you fit it? Well, because it creates a "tree", we could fit it in #2 on Sample-Based approaches. But it's worth noting it also uses a Neural Network, and could as well fall in category #3.

If you want a cool visualization, there's also this Github repo that has implemented many of the algorithms so we can visualize them.

So, we now know how to build a map, and then generate samples and drive from A to B. Now let's see low-level planning.

Low-Level Planning

High-level planning tells us that we should drive from node 238 to 255 passing through 239, 240, etc... Low-Level planning tells us how we'll do it. There are several ways to do it, some of them are explained in my post on Robot Path Planning (which is mostly about Low-Level Planning).

Level 1: GPS Driving 📍

Theoretically, if you have an accurate GPS position, and a good object detection system, you could drive in the map with the GPS alone. You don't even need to detect the STOP signs etc... because it's all manually mentioned.

Your GPS and map say that if you're at node 238, you should drive at 30 km/h, and that at node 239, you will have a bumper and should slow down to 25. Then it knows that from 239 to 288, you will be able to drive at 50 km/h, etc, etc...

You will still need a few elements, such as:

- A lane detection algorithm. Even though you use the GPS, you still want to drive in the middle of the lane, and a lane detection algorithm is going to help you steer the vehicle left and right to keep your center lane. You can learn about some anti-OpenCV lane detection approaches through this post.

- An obstacle detection algorithm, or at least, a LiDAR obstacle avoidance system. This is mostly when you'll have obstacles, or anything dynamic like traffic lights (unlike stop signs).

Now, this won't get you very far, it's the 'Level 1' of autonomous driving, but it's very possible to implement a last mile delivery robotaxi or shuttle with these alone. Now, if you ever go in a tunnel, or if you don't have access to a GPS with RTK correction (meaning, it'll be super imprecise), it will all fail.

So let's see Level 2, which involves moving from GPS to trajectory planning.

Level 2: Cost Functions 📉

The problem with GPS driving is, it heavily relies on GPS, and it's a bit dumb. If you're stuck in front of a parked vehicle, it's game over. So, at this point, you'll start by adding an "overtaking" function, that will help you generate a path to overtake stopped vehicles, but it will become increasingly complicated with the number of "exceptions".

This is when you'll switch from basic GPS following to cost functions. And this is done via 3 steps:

- Path Prediction: It will predict the path of every single vehicle your perception system detects. This is pure motion forecasting. It can be done with techniques like Kalman Filters, or with Deep Learning algorithms. Companies like Waymo release several papers per year on this.

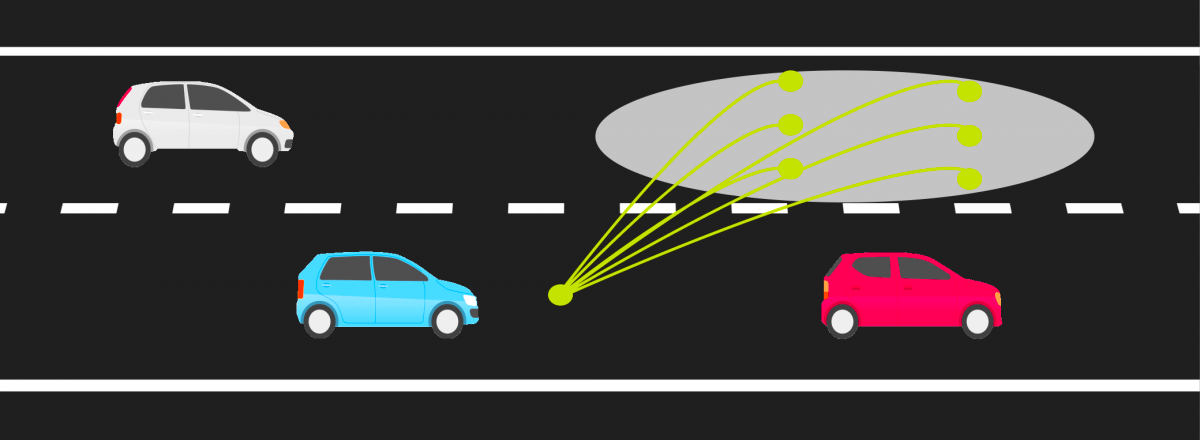

- Trajectory Generation: From where you are, you'll want to generate a set of possible trajectories to go to. For example, if you want to overtake a vehicle, there are many "rules" you need to follow, such as yielding to existing vehicles on the adjacent lane, deciding on the speed to adopt, etc... You'll generate a trajectory per option (and yes, there can be dozens).

- Decision Making: At this step, each trajectory will have a "cost" associated to it, and your job will be to pick the lowest cost trajectory. This cost will be a weighted function of criterias such as comfort, collision probability, manual intervention likelihood, speed (it's best to drive at maximum speed when you can), etc...

For example, this is what the overtaking situation would look like on a slow vehicle. This schematics actually lacks a critical option to stay in its own lane (which is probably favored here), but this at least illustrates the point:

Why doesn't our motion planner have a million trajectories then? Because on top of our cost functions, we can already eliminate a whole number of trajectories that will for sure have maximum cost. For example, we want a collision free motion, no trajectory that is 'around' another vehicle will be tolerated. We also want to respect the laws of physics -- we want a technically feasible path (we can't turn the wheels 90°, backward, etc...) in a defined configuration space.

So we have these static constraints, and we also have dynamic constraints, like the changing maximum speed, a dynamic environment with moving objects, traffic light changes, .... This is why, from a million motion planning strategies, you can actually reduce that to 3 or 4 actual paths.

From there, each trajectory has a cost associated to it, and the role of your motion planning algorithm is to pick the lowest cost trajectory. Once this is done, you pass that information (a continuous path) to the Control module, that will use is to move the car.

Yet, this isn't the most advanced we can do...

Level 3: End-To-End & Renegade Approaches ⛔️

Most startups begin with the GPS scenario, and quickly evolve to the Cost Functions scenario because of a client need, or a desire to fill more complex tasks (roundabout, tunnel, etc...), or to drive in more regions.

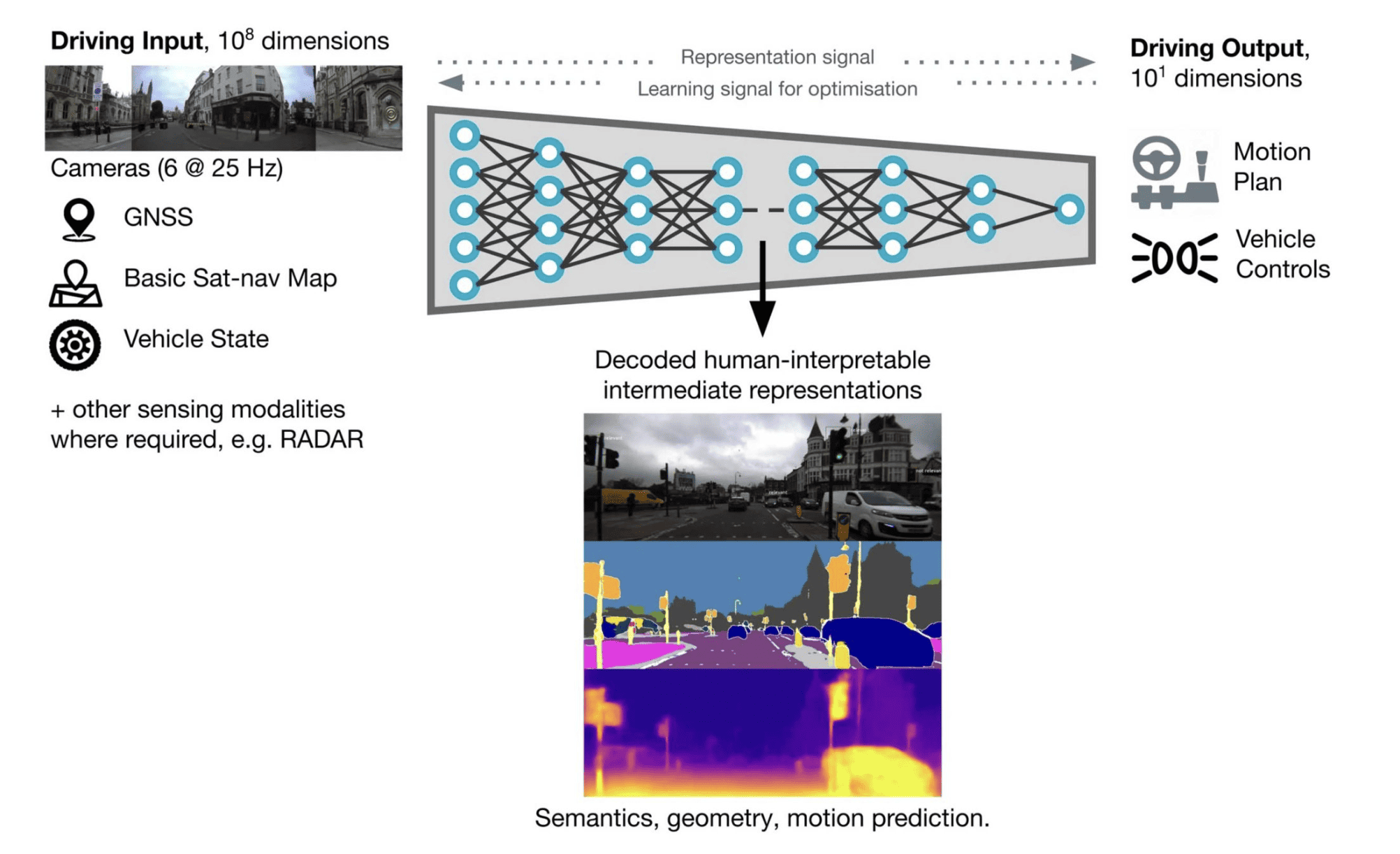

And then there are the renegades, that either implement a hardcore version of the cost functions (like Tesla does — explanation here), or they use approaches like Reinforcement Learning or End-To-End Learning (like Wayve), and this changes the entire thing.

In these approaches, you don't handcode anything. There is still the original map and the high-level stuff, but the low-level is done purely using AI. This means that you don't tell the car "you should drive at maximum speed", you let it figure it out on its own based on what others did.

An example with the Wayve "MILE" (Model-based Imitation Learning) algorithm, that takes all the data as input, the GPS info as well, and outputs a driving decision directly:

When talking about Renegade Approaches, what I'm talking about is actually End-To-End Learning, and there are 2 types of these:

- Imitation Learning: Learning from demonstrations via Behavioral Cloning or Inverse Optimal Control.

- Reinforcement Learning: Learning from experience via Q-Learning, Value/Policy Iteration, or other RL approaches.

I'll likely detail these in a separate post about Imitation Learning & Reinforcement Learning. If you're already reading my private emails, you already have access to what I'm going to write about.

So we've seen high-level and low-level, now let's see how to use these together...

Edge Cases

In this last point, I want to highlight the difficulties in making these work. Indeed, any of these high-level and low-level strategies will have difficulties to overcome. We often call these edge-cases.

There are hundreds of edge cases and opportunities for failure. And these can come from anywhere, whether it's Perception (phantom obstacles, misclassifications, etc...), or a wrong Localization (GPS off, clouds, etc...). It's important to understand what are your limitations if you're using a type of planning.

For example, if you're using the Cost Function Approaches, you may need to deal with edge cases on after the other. An example from Waymo in 2019, where they talked about solving the reflection problem.

Consider that people could be holding stop signs, that roads can be blocked, or that humans could do the traffic management instead of signs, and all of this needs to be handled. It's very difficult to estimate the "source" of each edge case, so this mean that you'd need to deal with them one-by-one.

On the other hand, the Tesla and Wayve new approaches (End-To-End) will try to solve it with data. If you face have a situation you've never seen before, you'll try to match it to the closest element in the dataset (a bit like humans do when they drive abroad).

Alright, so this sums up this little 'note' on edge-cases. We could say much more about this, but I'll probably dedicate an entire article to it.

Let's summarise:

Summary & Next Steps

- A self-driving car is built around 4 Pilllars: Perception, Localization, Planning, and Control.

- Planning is divided into high-level and low-level motion planning. High Level Planning is the task of planning a route from A to B, and Low-Level planning is more like planning each sub-trajectory within that route (which speed to use, how to overtake obstacles, ...)

- High-Level Motion Planning is about 2 elements: The HD Map and the algorithms. The HD Map can be done once, or continuously be updated by a fleet.

- The High Level Planners are of 3 types: Discrete (Dijkstra, A*, ...) Sample Based (RRT*, PRM, ...), and Probabilistic (Reinforcement Learning, ...).

- Low Level Planning has 3 levels of difficulty: GPS (1), Cost Functions (2), and the Renegade End-To-End (3). GPS is about following a GPS line and stopping at objects. Cost Functions is about generating trajectories and taking the lowest cost one, and End-To-End is about imitating a dataset of previous drivers.

- The simpler your problem, the simpler your level of low-level planning. If you drive in a delimitated route with few options, you may do really well with GPS Driving. In most cases, you may do ok with Cost Functions. In complex and everchanging environments, you may want to research End-To-End.

The task of implementing the brain of a car can be very difficult. This post covers the two types of approaches, strategist and tactician. It's interesting to realize that each of us is often either a strategist (more thinker, planner, see high-level, vision based) or a tactician (very implementation based, want to do more tasks, etc...). The magic happens when you combine the two together.

Receive my Daily Emails, and get continuous training on Computer Vision & Autonomous Tech. Each day, you'll receive one new email, sharing some information from the field, whether it's a technical content, a story from the inside, or tips to break into this world; we got you.

You can receive the emails here.