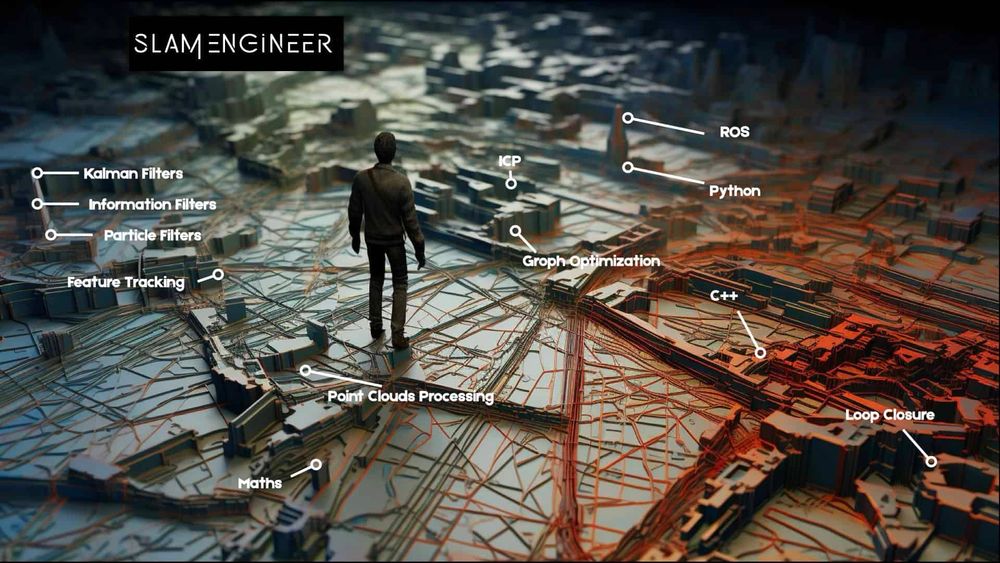

SLAM Roadmap: Which skills are needed to become a SLAM Engineer?

It was a rainy Wednesday night, when I arrived right on time for my weekly "Self-Driving Car meetup" in Paris. I was one of the participants, and this week, I was excited to meet "Navya" a leader in autonomous shuttles in France back in 2018. Their director of Perception was on-point, interesting, and explained many of the algorithms they were using in their autonomous shuttles...

Yet, one of the algorithms was NOT part of the explanations, and I had to ask him in front of the entire crowd. "What are you doing for localization?!" I asked nervously. To which he replied: "Oh, we SLAM everything!"

He then showed us an army of SLAM Engineers sitting in the audience.

In most autonomous driving projects, the #1 job people target is Computer Vision Engineer. Yet, many don't realize that other parts of the autonomous driving, like LiDAR Perception, or SLAM, can be an amazing opportunity to them.

First, these roles are more rare, and less "in search". So this means that while it may be harder to join a specific company, it will be much easier to keep your job. Second, positions like this are often very related to Computer Vision & Perception, especially these days when everything is mixed up.

In this post, I'd like to talk about being a SLAM Engineer, discussing what they do, and what's needed to get there. We'll try to answer a few questions, such as:

- What is SLAM in robotics? And what companies work on SLAM?

- Which sensors and algorithms are involved?

- What are the skills and technical expertise needed?

What is SLAM in robotics? And what companies work on SLAM?

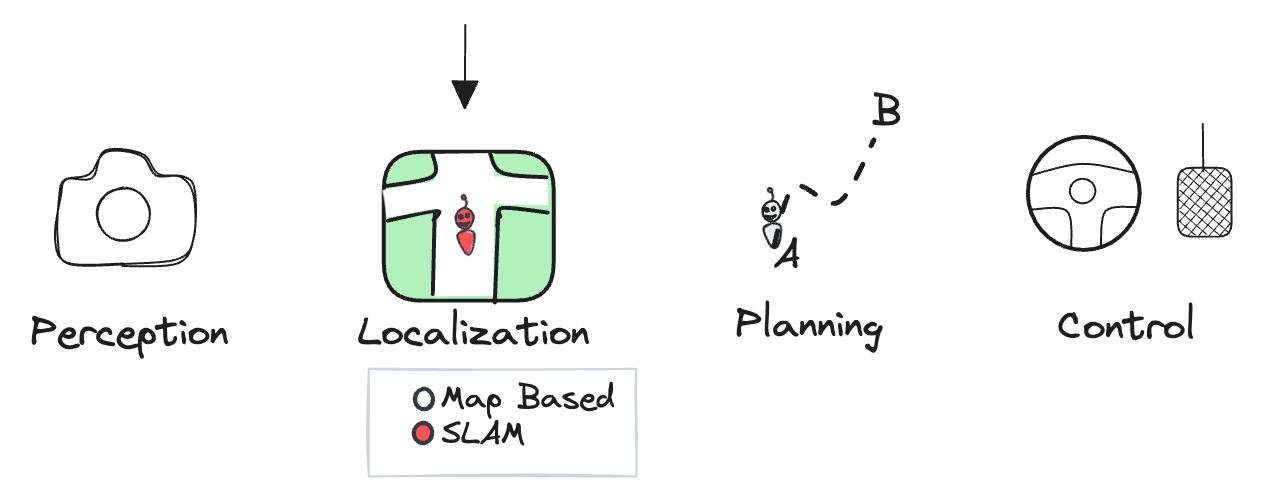

SLAM stands for Simultaneous Localization And Mapping. This involves the two components: localization and mapping. But these can have different meanings depending on the company you're working on.

Let's take back the example of the autonomous shuttle. In any autonomous robot, car, shuttle, drone, etc... SLAM will fit in the localization module, and the goal will be to locate yourself in a map you also create. In this case, you'll work on the team that is using the sensors (camera, GPS, LiDAR, ...) to build a map and continuously localize the robot inside the map.

As you can see, we have two ways to localize ourselves:

- With a Map

- Without a map (SLAM) (this article!)

Not all companies using SLAM are robotics based. You can see SLAM Engineers working at Apple, Meta, and tons of Computer Vision startups. This is because sometimes SLAM is used to drive in an environment, but sometimes it's simply used to create that environment in 3D, for example in AR, VR, and other 3D scenarios.

We therefore have two types of companies working on SLAM:

- Autonomous Robot companies: Your job will be to use SLAM to feed the plannign algorithms

- Non Autonomous Robot companies: Your job will be to use SLAM for purposes like AR/VR/3D Reconstruction, etc...

Which sensors and algorithms are involved?

Now that we have a good idea of what SLAM is, and what is your role as a SLAM Engineer, we need to zoom into the "how" you'll fill this role. Since your job is to both create a map and localize yourself in it, it involves several sub-techniques, such as:

- Creating a 2D or 3D environment (map) using sensors

- Defining your position in a map

- Keeping the map up to date, and the position precise

Creating a 2D or 3D environment using sensors

There are mainly two types of sensors used in SLAM: LiDARs and Cameras. Let's begin with LiDARs.

SLAM with LiDARs

A LiDAR is building a point cloud of the world. In a way, this "point cloud" can already be your map. Whenever you detect a wall, you could add the point clouds related to the wall into some sort of map. So every point of the cloud is part of the map, and the more you drive, the bigger the map.

On the other hand, a camera needs to go through an intermediate process. Your data is 2D based (pixels), and you want to convert that to the 3D world. You also want to have keypoints that'll be considered as point clouds. This is a field called Visual SLAM, and it's usually done using Computer Vision features, such as ORB, BRISK, AKAZE, SURF, ...

Your features are similar to the point clouds from the LiDAR.

Other more advanced techniques leverage Neural Radiance Fields and 3D Reconstruction to convert each pixel into a point cloud and therefore drive in a dense environment (as opposed to sparse features). In this case, it's not just a few features that form a map, it's every pixel.

Defining your position in a map

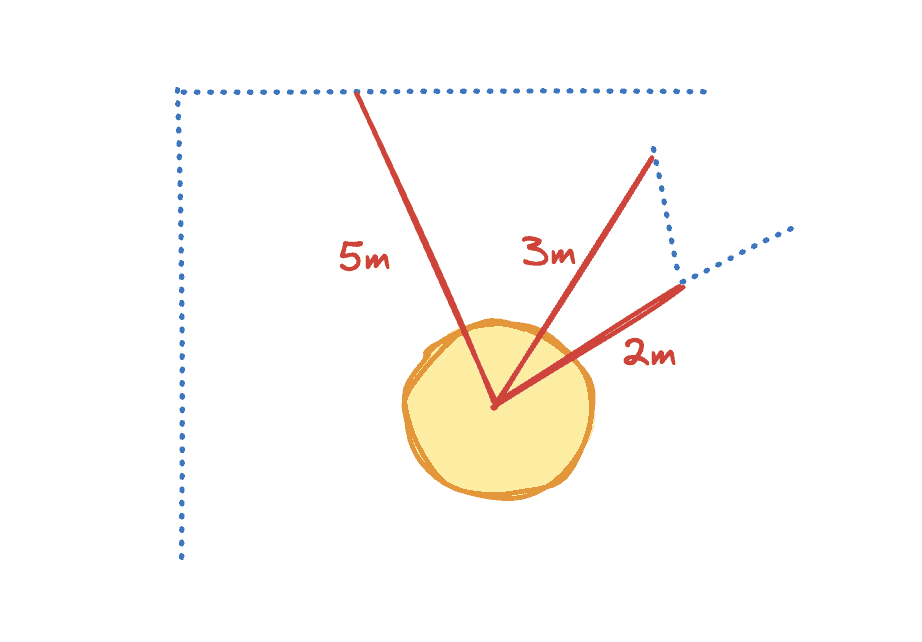

The first step was "mapping", and our second step is localization. In this step, we want to define our relative position to each point in the map. How far are we from this wall in front of us, and from this fence on the side?

The role of localization is to look at all the landmarks we have, and estimate a position that will be true for all landmarks. So if you're estimating to be 5 meters in front of a wall, but at the same time 2 meters in front of another object, your only possible position is at X.

This step is most of the time solved with 4 types of algorithms:

- Kalman Filters

- Particle Filters

- Information Filters

- Graph Optimization

This is how you have algorithms like EKF-SLAM, Fast SLAM, GraphSLAM, etc... It's also important to note that there are several sub-approaches done here. For example, FastSLAM uses Particle Filters to estimate the position of the robot and landmarks.

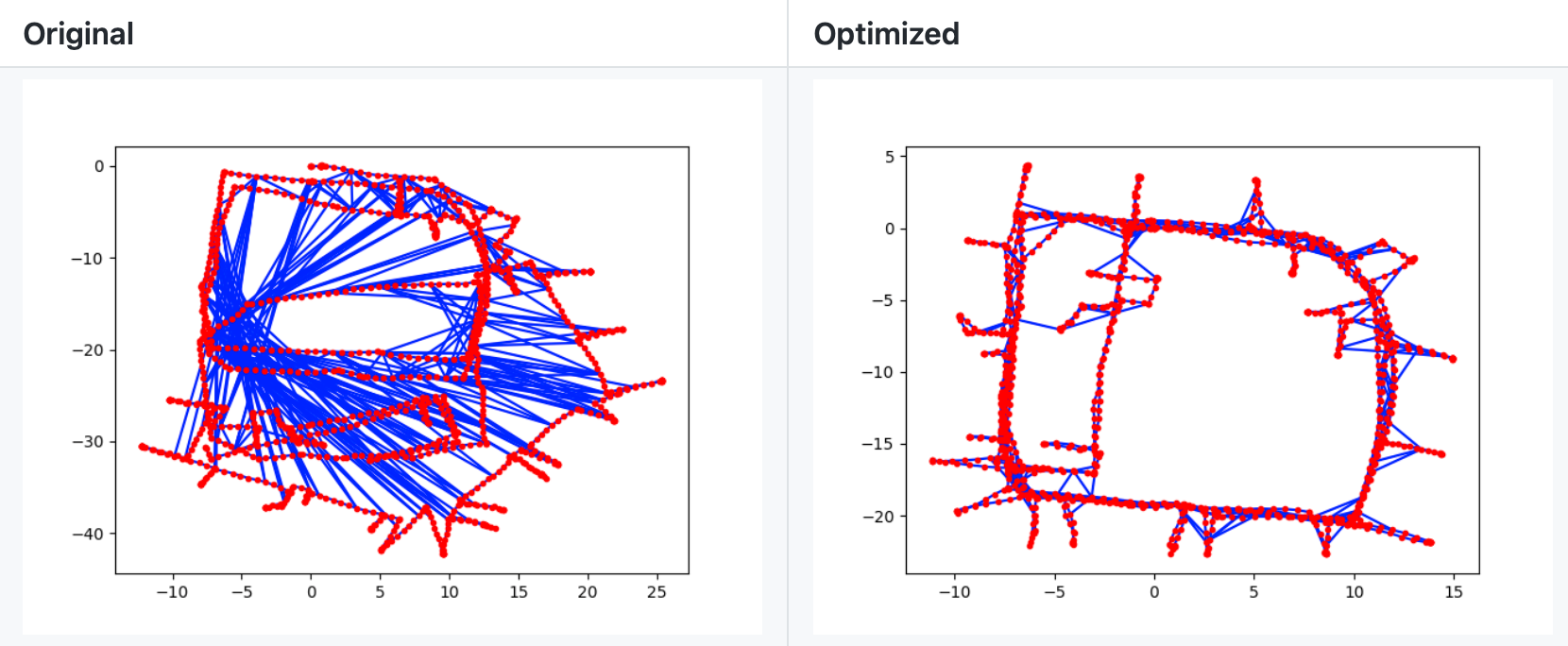

Keeping the map up to date

The final step is about continuously feeding and updating the map without being wrong. Your position estimate may be less and less precise over time, and your map measurements as well. Especially in the case where you visit some positions twice in a row, and you may have superposition of point clouds.

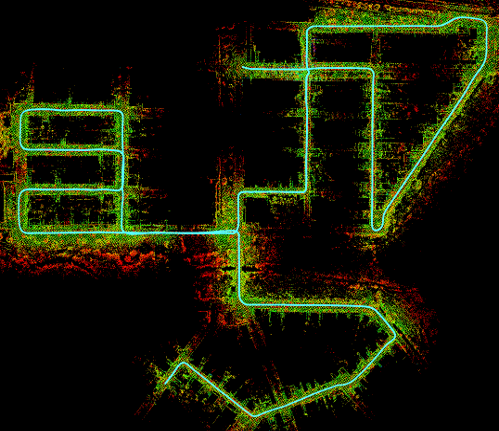

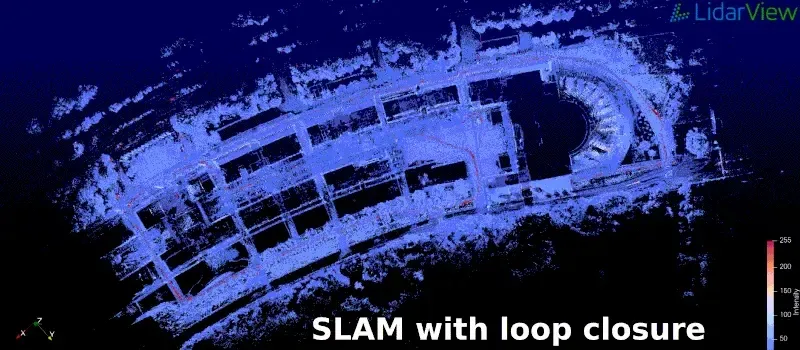

This is a problem known as Loop Closure, and I highly recommend reading my article on Loop Closure, and my other one on Point Cloud Registration, to "get" that part.

Another example in action:

So this is one other important task in SLAM. Next, let's see what type of skills you need to build.

What are the skills and background needed?

What would be the best way to understand the skills you need to get one of these SLAM Engineer Jobs? Probably to look at job offers!

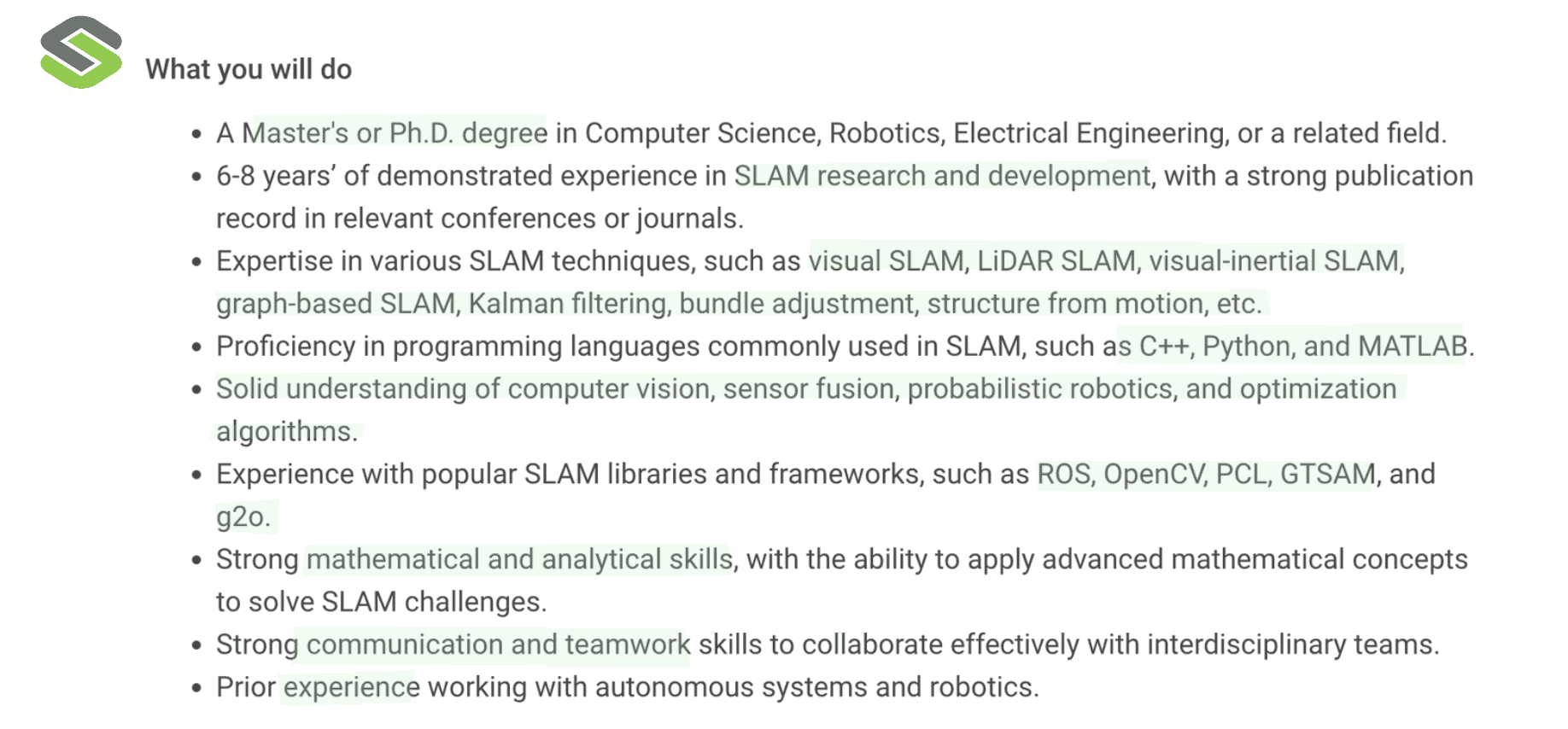

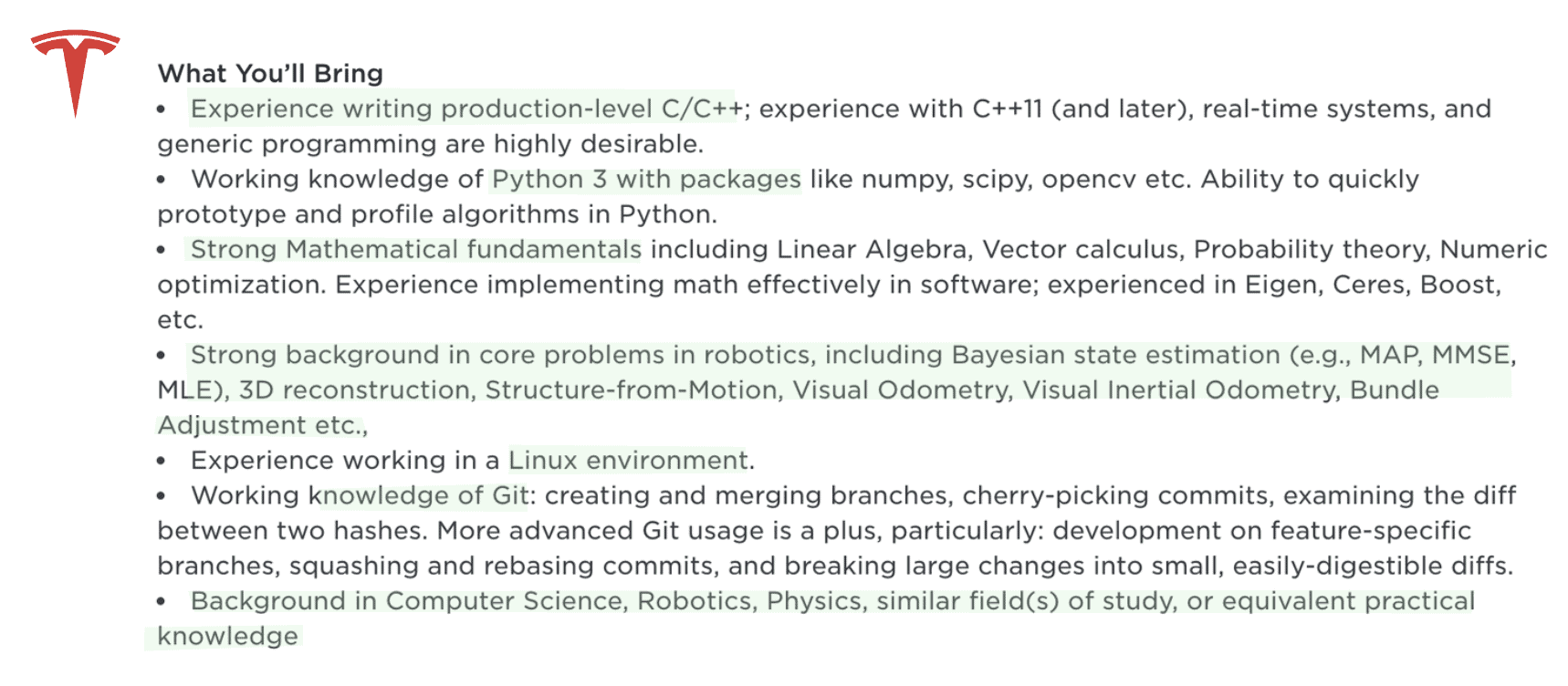

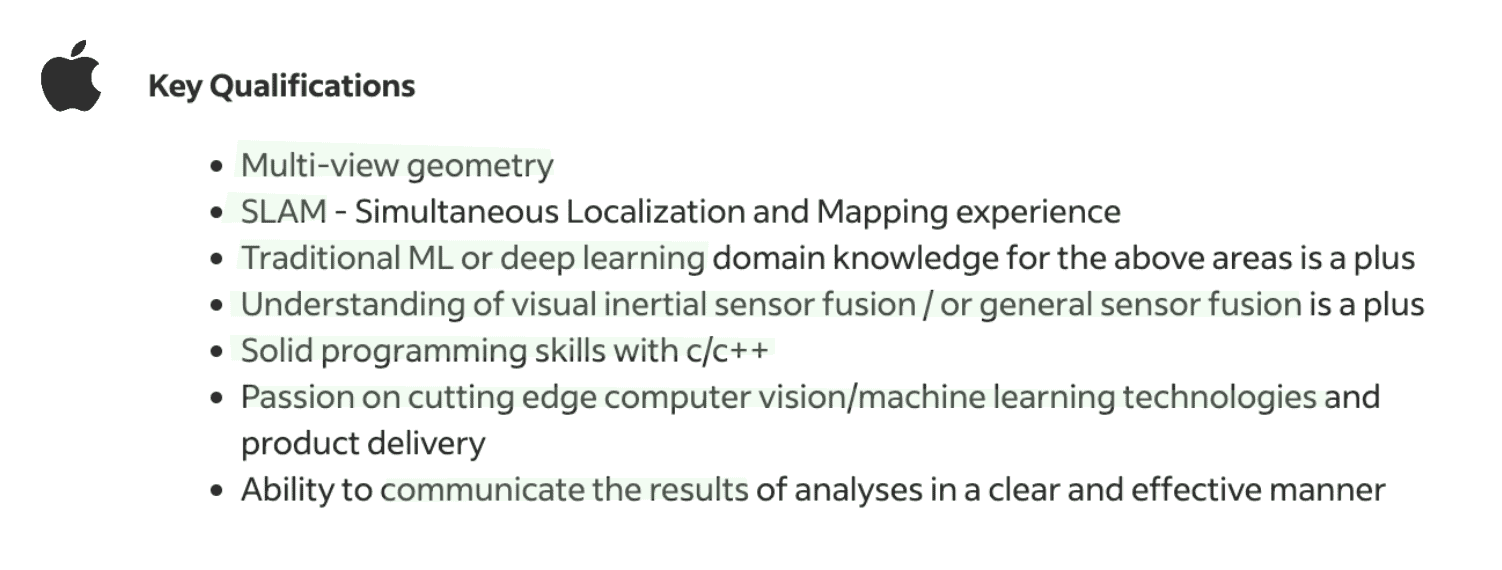

I selected 3 job offers mentioning SLAM Engineer in the title. One is from a company called FoxRobotics, one is from Tesla, and the last one is from a warehouse management company called Symbotic.

What you'll do

As you can see in the "What" part, we have several responsibilities, but it's not absolutely trivial to understand. What does it mean to improve the algorithms? Or to stay up-to-date with approaches? The only way to truely understand it, is to picture the key role of SLAM at the company you'll work on.

For example, if you choose to work with Symbotic, you'll work on autonomous robot inside warehouses like those of Walmart and Amazon.

Now, let's see what you need to do:

Your requirements

Let's see the rest of the job offers, starting with Symbotic, Tesla, and Apple:

As you can see, we have several redundant elements, such as:

- The languages: C++ and Python

- The background: Master's Degree (in Computer Science, or specialized in AI or Robotics), Strong mathematical fundamentals

- The fields: Computer Vision and Sensor Fusion skills, background with autonomous robots, Machine Learning, eSLAM, LiDARs, ...

- The hard skills: 3D Reconstruction (Structure From Motion, ...), SLAM Algorithms (EKF-SLAM, Graph SLAM, Bundle Adjustment...), Libraries (OpenCV, ROS, GSLAM, ...), State Estimation (Visual Odometry, MAP, ...)

So this is what you need to know. Now let's see some SLAM Engineers in action.

Example 1: SLAM at Kitware Europe

Kitware is a company known for creating and supporting open-source software in various domains such as scientific computing, medical imaging, computer vision, data and analytics, and quality software process. Through their various offers, they have one named "LiDARView", in which they create a Point Cloud Visualizer, and compatible with SLAM.

So let's see how they do "SLAM" with LiDARView:

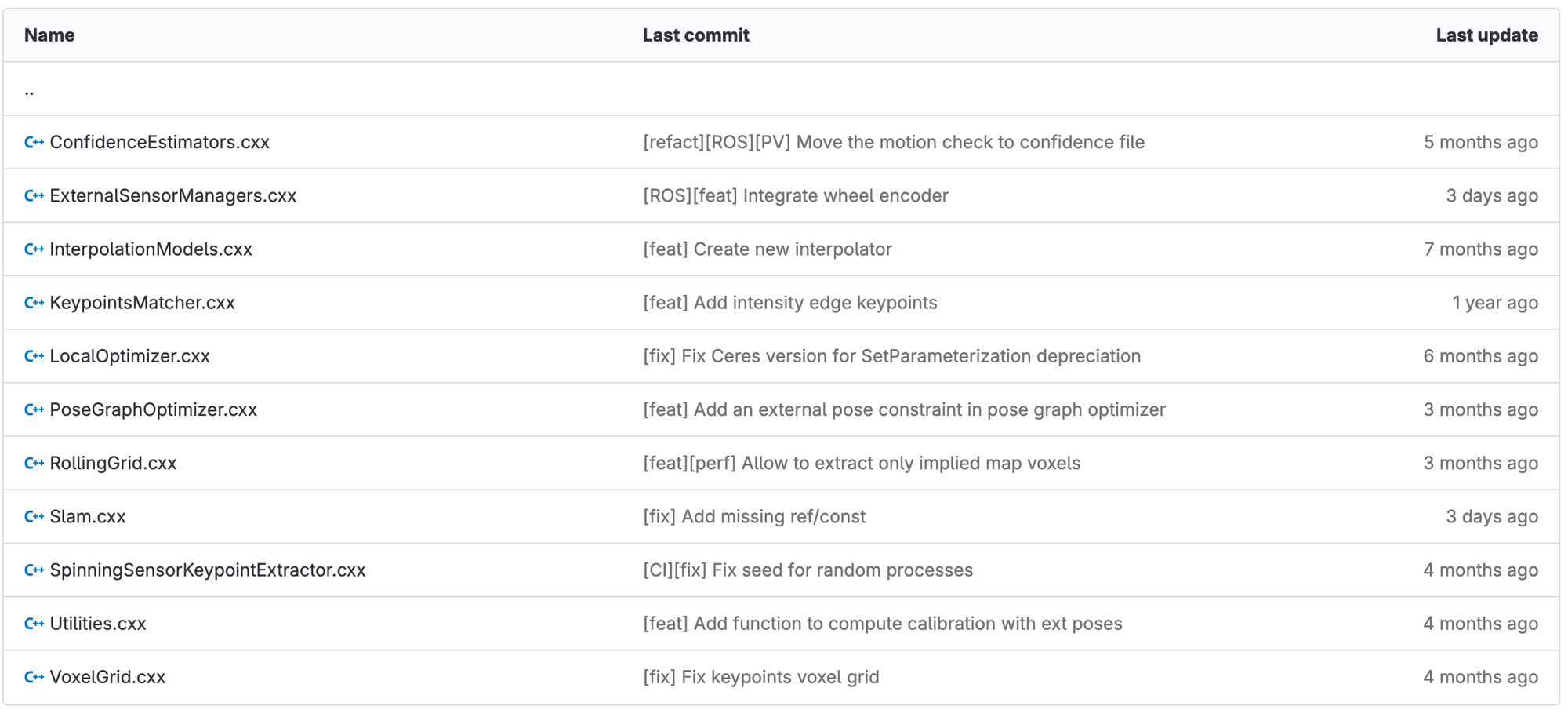

Looking at their Open Source code, you may see a list of files:

This is Part 1 of working as a SLAM Engineer: Building a SLAM Library for your company.

In this case, they're building a SLAM Library based using C++ (cxx = c++), and they're using several components, such as Voxelization, Keypoints Estimation & Matching, April Tag Detection.

Let me briefly describe how they SLAM algorithm works:

- Keypoint Extraction: They extract keypoints on point clouds (from LiDAR).

- Ego Motion: Using the point cloud at time (t), and the one at time (t-1), the software computes a "shift" from t-1 to t, and tries to recover the motion of the LiDAR (and thus the car), by using a closest point matching function.

- Localization: They add the extracted keypoints into a map, as well as the new position calculated by ego-motion.

They then run this in a loop to update the map, and add elements such as Loop Closure to refine the map on revisits.

Hidden Skills inside

- LiDAR Processing: Most of the work is done by processing point clouds. The two main skills are Point Clouds (as taught in my Point Clouds Conqueror course), and SLAM (as taught in my SLAM course).

- Geometry: There are many rotations and translations being done in the repository, which implies you NEED to have these in your skillset. You can also see spline interpolations for ego motion prediction.

- Maths: SLAM is a complex problem which often involves heavy math skills. It is worth putting it apart of geometry, as you may have matrix factorizations and other elements involved.

- Graph SLAM Optimization: There are many SLAM algorithms available. Unless mistaken, this algorithm is implementing the "Graph SLAM", and therefore any skill in graph optimization is useful.

- C++ and ROS: Worth mentioning, but the code is implemented in C++, running on ROS — which implies you'll need to know these two in this case. In many SLAM projects, C++ is the dominant language.

As you can see, we have these 5 main SLAM-related skills, in this specific case.

Let's see a different example, with different skills:

Example 2: ORB SLAM v3

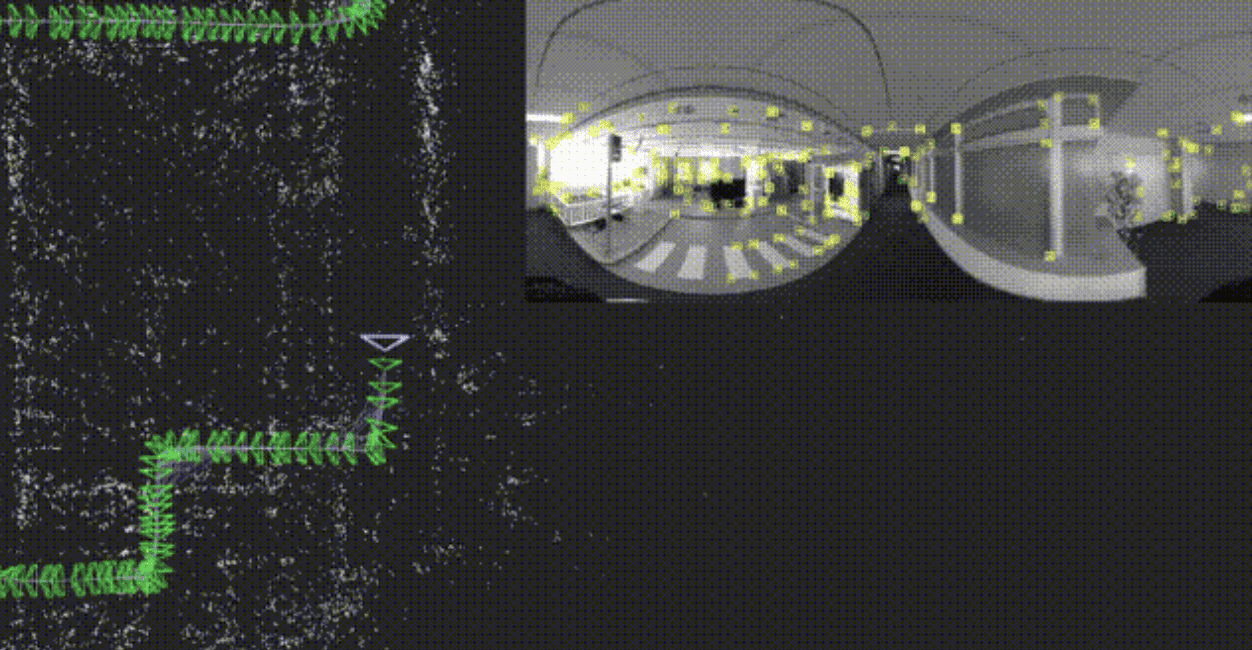

You've seen a graph-based LiDAR application of SLAM. Among LiDAR approaches, you can also use Kalman Fitlers, or other types of filters. And among SLAM itself, you can also use Visual SLAM. Visual SLAM happens with a camera, so let's see how this works...

For example, ORB-SLAM (Oriented FAST and Rotated BRIEF SLAM) is a SLAM algorithm working on camera, and doing the following:

- Feature Detection & Matching: Find Computer Vision features like corners and edges, and implement an algorithm to detect and track these features. In this case, we're using FAST keypoints and BRIEF descriptors.

- Pose Estimation: Implement a graph SLAM algorithm to estimate the pose of the robot by matching the extracted features from frame to frame.

- Mapping: Position the extracted features in a map.

- Loop Closure: Use loop closure algorithms to remove redundancies with features.

- Optimization: Perform bundle adjustment and optimize the graph globally to update the map

In this case, you may notice a very similar pattern than in the previous algorithm (it's a graph SLAM approach again). The main difference is that this time, rather than finding LiDAR keypoints, we're finding camera keypoints. The loop closure & optimization steps are likely done on the Kitware example too.

Additional Hidden Skills in Visual SLAM

- Feature Detection and Matching: Knowledge of ORB (Oriented FAST and Rotated BRIEF) features and other feature descriptors (Harris corners, BRIEF, etc...).

- Camera Models and Calibration: Understanding intrinsic and extrinsic camera parameters and how to calibrate cameras.

- 3D Reconstruction: Many Visual SLAM algorithm use 2-View Reconstruction, especially when working in stereo setups.

- Sensor Fusion: Especially, fusion with sensors like GPS, IMU, Odometers, or others that are often used in Visual SLAM or other approaches (in fact, most SLAM algorithms use these sensors).

So, as a quick summary, we have...

Isn't it too hard and specific? And can't I just use these?

Is it too hard? Yes, SLAM is one of the hardest topics in the field of Robotics. It's (in my opinion) much harder to understand a SLAM algorithm than it is to understand any Neural Network architecture.

However, SLAM is re-using many existing skills you build in Computer Vision and Perception, which makes it an "okay to get" skill if you already have these skills. I would therefore recommend to learn these skills for what they are first (3D reconstruction, feature detection, ...), and then learn them in the context of SLAM.

Can't you just use these? The same way you can use object detection algorithms as black boxes, you can use SLAM algorithms as black boxes. For this, you can use existing software, like the ones from Kitware, or clone GitHub repository. Making it work with your hardware stack may on the other hand be much harder than when using other types of algorithms.

Some companies will require you to use these as applications. In this case, you may not "need" to master every single algorithm inside, but you'll still eventually get there. Using an algorithm rather than another requires understanding of the algorithm. Therefore, if you:

- Change your LiDAR keypoint estimator

- Use a different type of visual feature descriptor

- Change from online to offline SLAM

- Change from Kalman Filters to Particle Filters

- ...

You'll know why you're doing it, the underlying principles behind these approaches, and how to make them work.

So let's summarize:

Summary & Next Steps

- Being a SLAM Engineer is a good career choice, as much fewer engineers are able to specialize there. It offers a wide range of companies, working on robotics (Robots, Cars, Drones, ...) or not (VR, Computer Vision, Geomapping, ...), and can be a lucrative option.

- There are 2 types of sensors used in SLAM: LiDARs and Cameras. LiDAR-based approaches work by tracking 3D Point Clouds and putting them on a map. Camera-based approaches work similarly, but the points to track are 2D computer vision features (like edges and corners) projected to 3D.

- Analyzing most job offers, we can deduct that working as a SLAM Engineer involves coding, testing approaches, optimizing algorithms, modifying environments, and making things fast and robust.

- We also understand that SLAM requires the following hard skills: C++ and Python, 3D Reconstruction (Structure From Motion), Point Clouds Processing, working with Libraries, Sensor Fusion, and more...

- As a SLAM Engineer, you may be writing SLAM code, or using it. Writing code for SLAM will require you to dive deeper into the specific algorithms used, such as keypoint detection on point clouds, or frame to frame ego motion estimation.

- However, using SLAM algorithm will still require you to build a strong understanding of these algorithms. The way around is difficult in this field.

- If LiDAR Engineers are scarce, it's for a reason. SLAM is a difficult topic, and I recommend to learn the core algorithms before you learn SLAM.

If, like me, you like to meet autonomous driving companies and engineers, you may be surprised to see that not everybody works in AI & Deep Learning. There are engineers working on connectivity, planning, control command, and yes, SLAM and Localization. These engineers have specialities, they have an "edge" where many AI engineers look alike.

But specializing in these topics means closing the door to AI and Computer Vision. Unless you work on SLAM. Because SLAM relies so much on Perception, it's the perfect tradeoff between to build a scarce profile, while still working extensively on AI and Perception.