Why I believe Tesla still secretly uses CNNs in FSD12 (and not just Transformers)

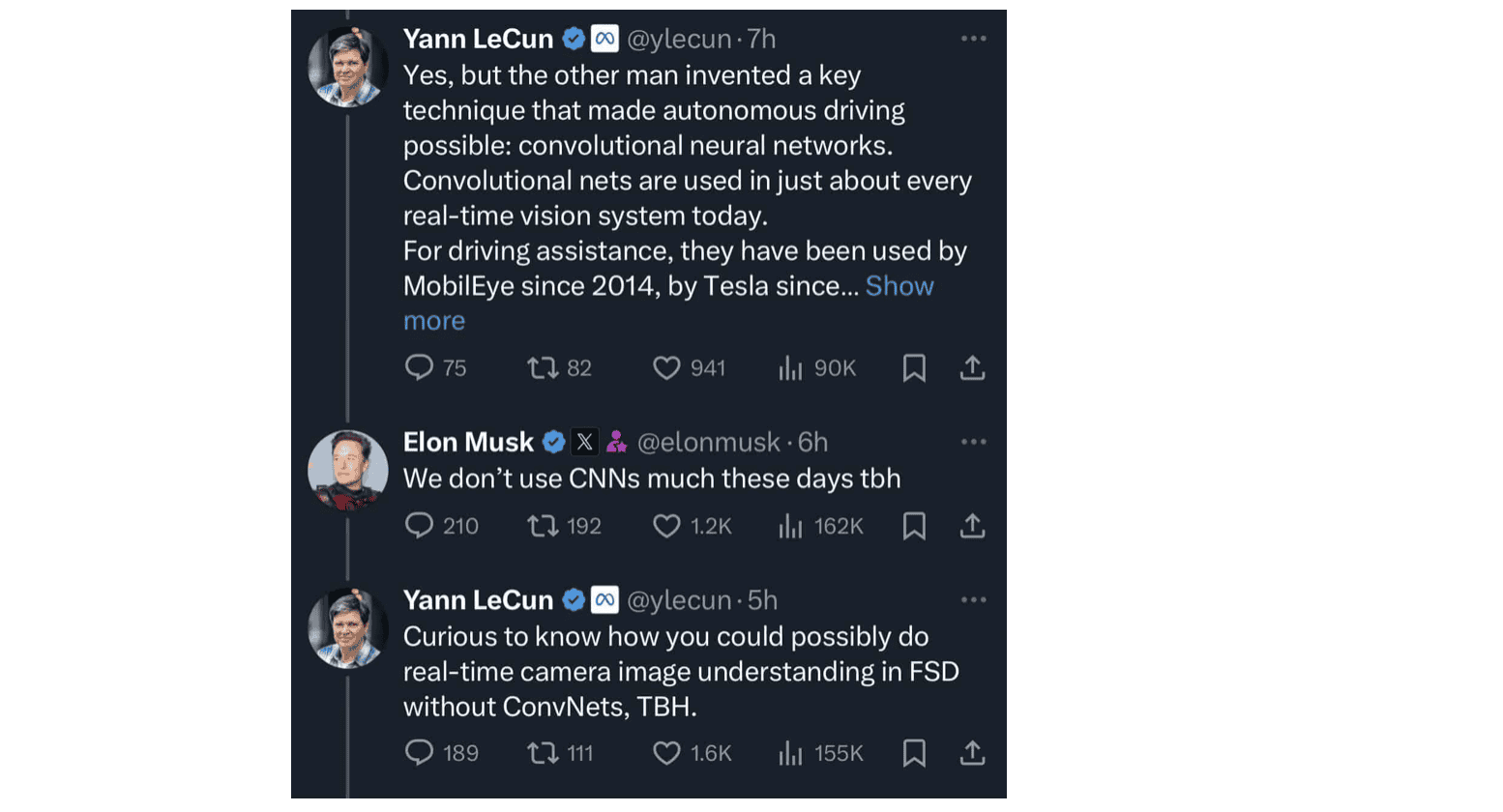

Last night, my wife and I were having dinner at friends, when suddenly, out of the blue, one of them said:

— "Hey! About your email from yesterday..."

— "My email?" I asked confused. "You're reading these?" (mentioning my daily emails)

— "Yes! I'm 100% with Yann LeCun. I know you're on Elon Musk team, admit it."

Oh, I hoped he didn't make me pick. But if I have to... YES! I'M WITH ELON MUSK! MWAHAHAHAHA!

Except today!

Well, I don't know about their current feud, but one thing particularly interested me in the CNN/Transformer fight.

Let me give you some context:

Now this interests me.

Why "we don't use CNNs much these days?" Is this true? Is it really all Transformers? And are all these people on Twitter saying it's just Transformers complete idiots?

In this article, I am going to tell you who I think is right, and more importantly, I'm going to explain why it's Yann LeCun, and why Tesla will still use CNNs...

Ready?

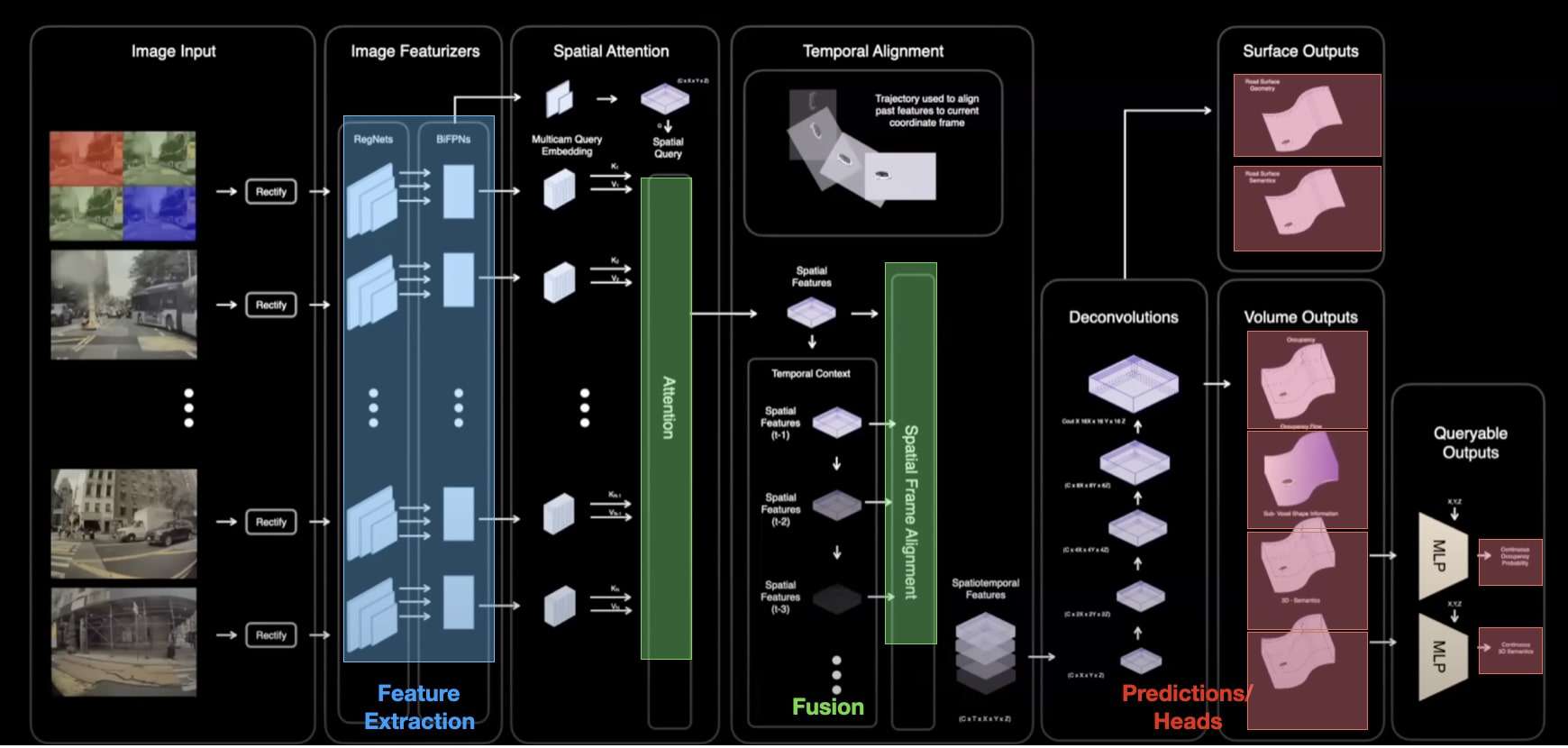

First, my research is based on Tesla's conferences, the AI Day 2021 and 2022, and CVPR 2023 — and you can find lots of details on my blog posts on Tesla's HydraNet, Tesla's Occupancy Network, and Tesla's End-To-End Architecture.

And in all of these, they share essentially the Perception, done with both a HydraNet (for lane & objects) and an Occupancy Net (for 3D occupancy & flow).

These modules are plugged together with a Deep Planner in an end-to-end fashion.

The question I want to answer is...

"Do either of these use Convolutions?"

Let's see:

Does the HydraNet use convolutions?

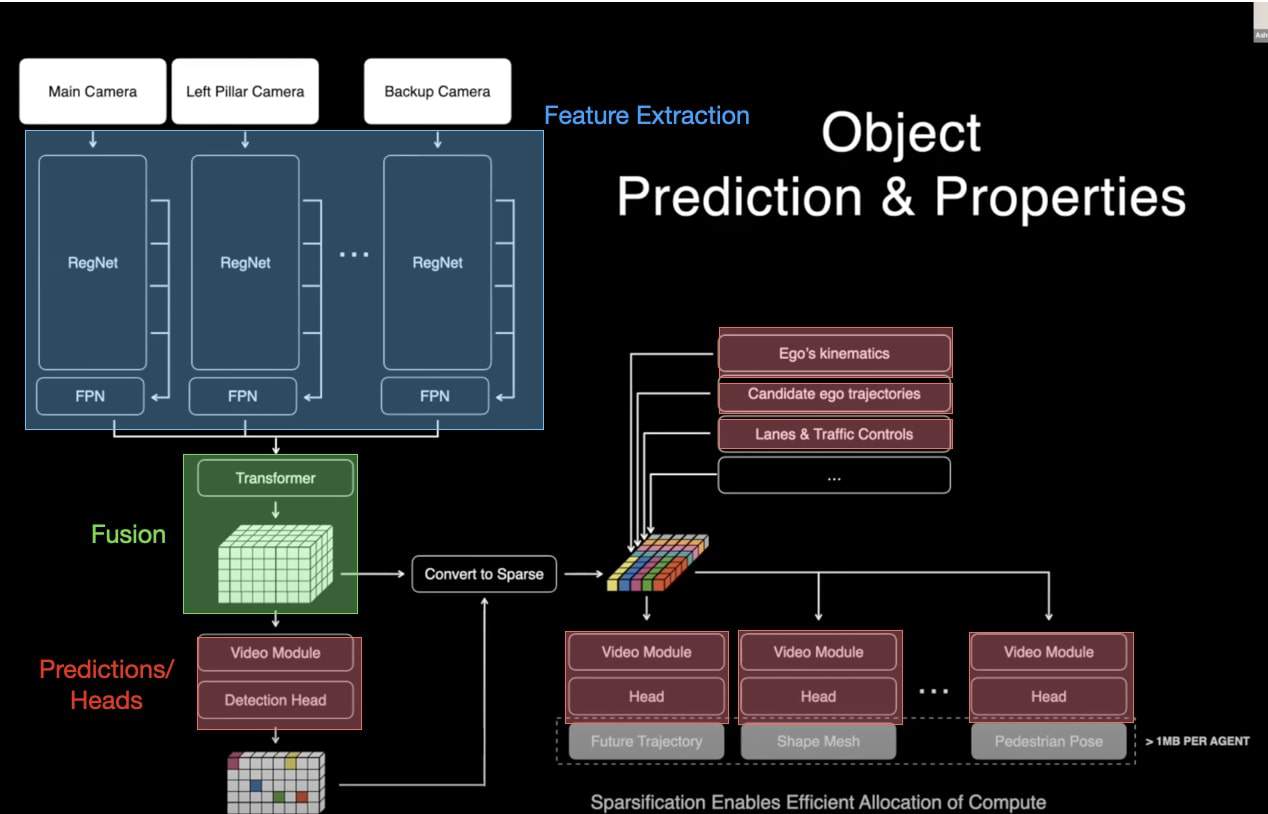

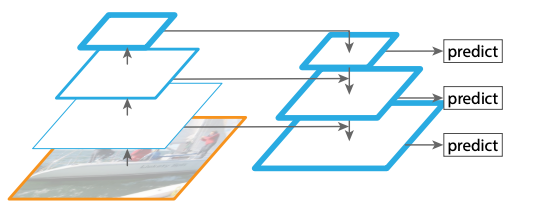

Here is the latest available architecture for the HydraNet (as a reminder, the HydraNet is a network that has multiple heads, each head capable of solving one task, you can find more details in my HydraNet course):

You can notice 3 key parts:

- In blue, feature extraction using RegNets

- In green, a Transformer based fusion

- In red, the heads doing the prediction (objects, lanes, ...)

And of course, most of it is Transformer based now...

Except one part:

The RegNet& FPNs!

Inside RegNets

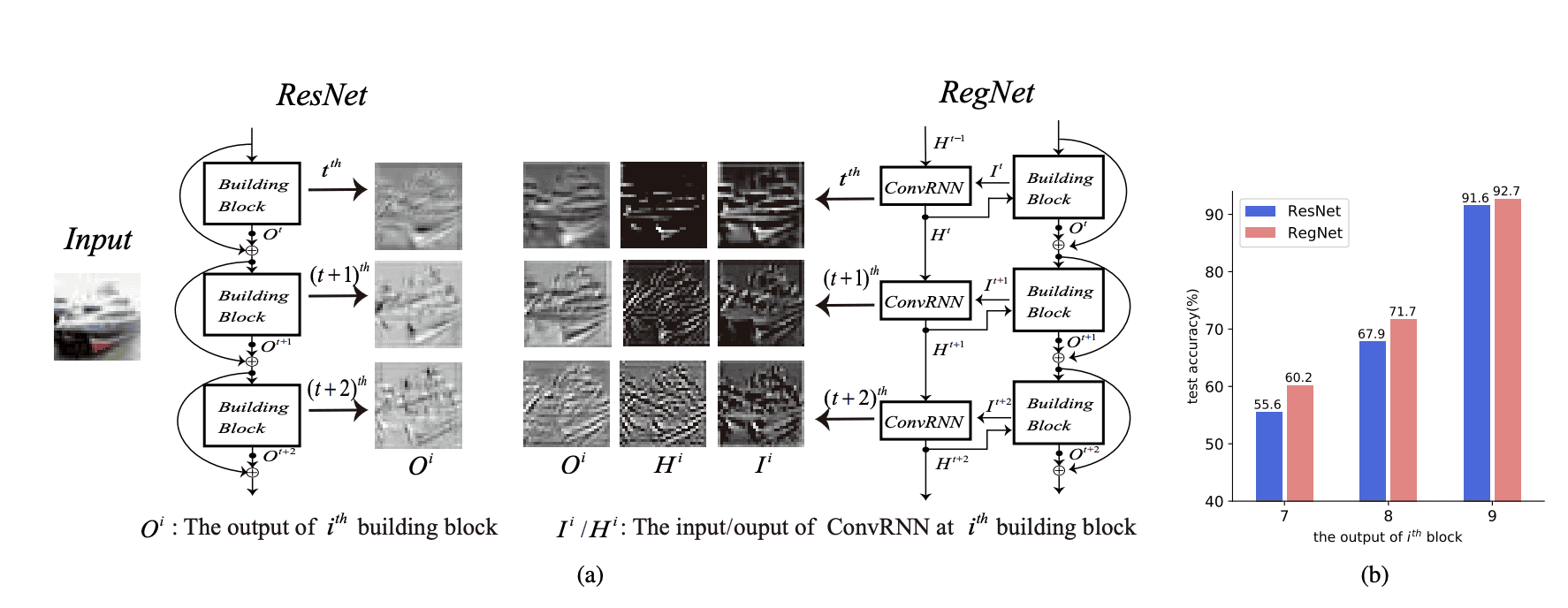

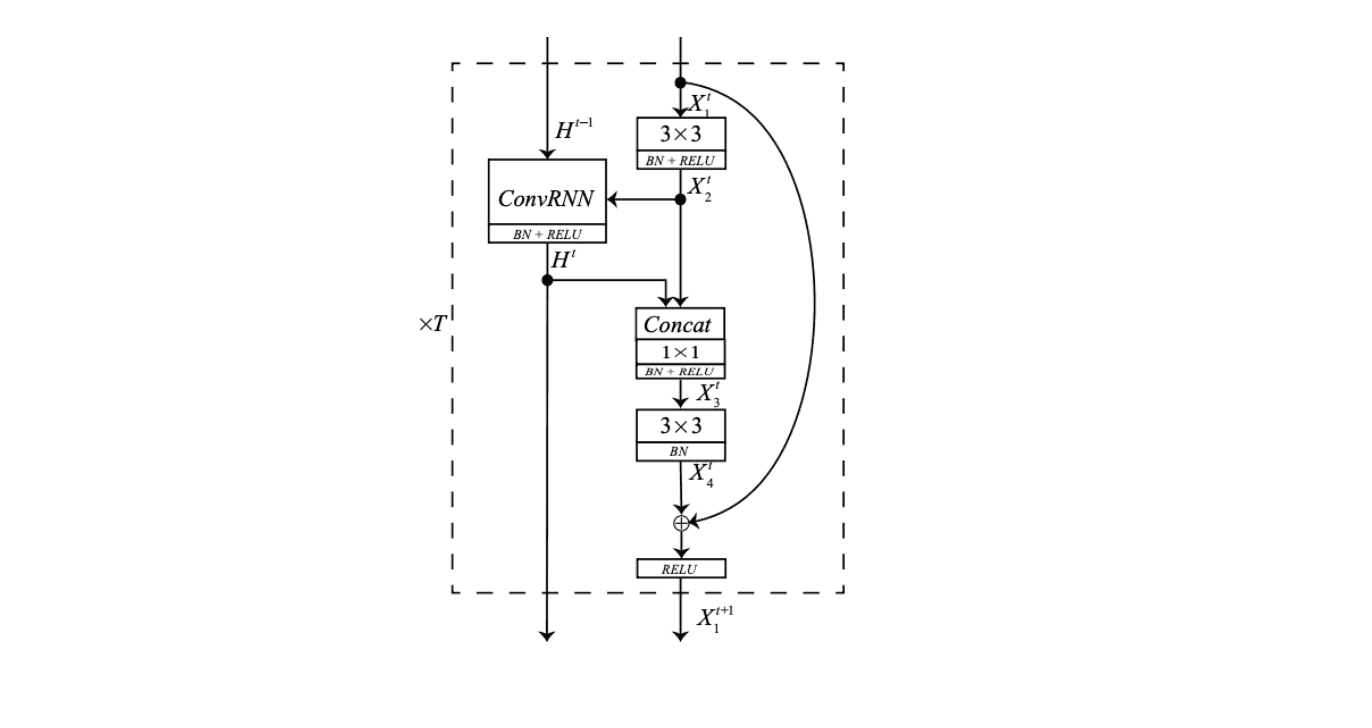

RegNet (RNN-Regulated Residual Networks) is the algorithm doing feature extraction, and when looking at the paper, they tell you it's a ConvRNN based feature extractor they use heavily.

So as you can see, it's a better way to do feature extraction (the output looks better at least); and this by using a ConvRNN. Here's the gist of it:

- We start with a ResNet design, which is a good feature extractor. The "building block" noted is a set of Conv+BatchNorm+ReLU.

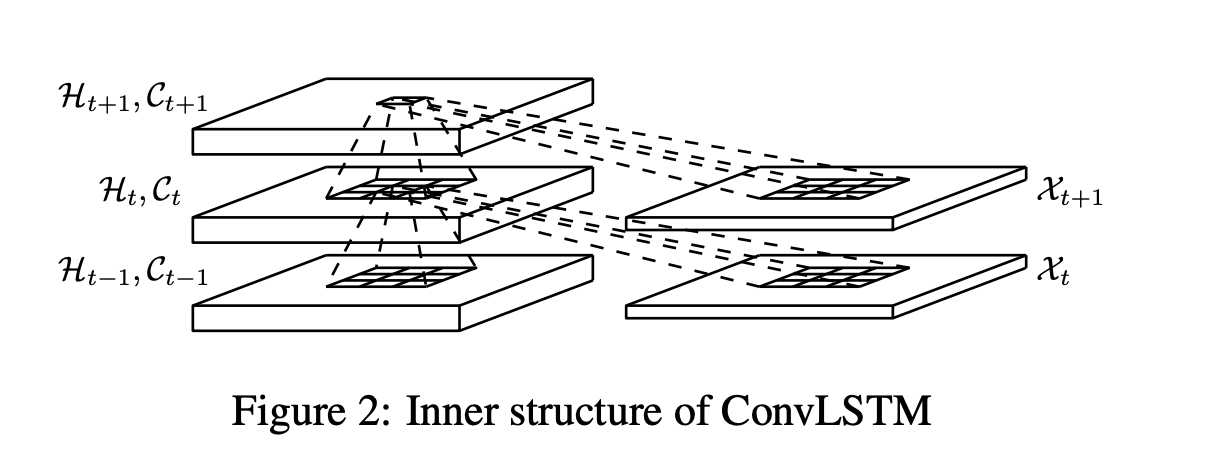

2. At each output, we pass it through a RNN. This is the ConvLSTM architecture; putting a Recurrent Neural Network inside a CNN. This helps with temporal dependencies.

3. We pass it trough the next stage and repeat for dozens of layers. At the end, we do a global pooling of all the features extracted and pass it to the Transformers.

Okay, so this is what Tesla uses; a RegNet, followed by a FPN: a Feature Pyramid network.

FYI — I'm talking a lot about RNNs and CNNs together in my Optical Flow course, and a lot about FPNs in my Image Segmentation course.

So we know the HydraNet uses CNNs, now let's see the second one

The Occupancy Network

You can obviously find more details in my dedicated 2,000 words article, but the gist is, feature extraction is also based on RegNets and FPNs!

So, I hereby claim that according to his own conference, Elon Musk is....

WRONG!

Now, you're going to tell me:

"Hey Jeremy, how do you know they didn't replace the RegNet with a Transformer?"

And I think that this is unlikely, and I'll tell you why in the second part of this article...

Why Tesla still uses CNNs

To answer this, we have 2 key questions:

- Why do CNNs outperform Transformers for feature extraction?

- Why did Tesla choose to use CNNs before Transformers, and not Transformers directly?

Why CNNs outperforms Transformers for Feature Extraction

Well, first things first: CNNs have been built for feature extraction.

They have actually been built to replace the manual feature extractors like histogram of oriented gradients. And Transformers have NOT been built for that, they have been built to spot the "attention points" in an image, and to capture the temporal dependencies.

So if you want to use feature extraction, it's much better to do it with a feature extractor, a CNN; it's also much faster, since Transformers are slow.

If you had to cut a piece of steak, would you rather use a meat knife like CNNs, or a swiss-army knife like Transformers?

Now the second question:

Why Tesla doesn't go straight to Transformers

Well, it's likely based on their own research and trial/errors...

But it could also make sense to do:

- CNN/Feature Extraction and

- Transformers/Fusion

It's something I also explain in my Video Transformers Workshop. Because CNNs allow to reduce the dimension, they allow to capture the interesting patterns, they allow to spot both local and global features...

So basically, CNNs are here to make the Transformers job easier and faster. Rather than processing an image, they process features. Transformers are not here as replacement for CNNs, there's here as replacement for LSTMs and RNNs.

It's basically like going to the grocery shop and buying the right ingredients first, and then giving them to the chef — rather than asking the chef to also go to the grocery shop.

"Hey, here are features, find the attention spots!".

You have much less to process.

Okay, I could go on and on, but you get my point: We want to use CNNs for feature extraction, and we want to use Transformers for spatial fusion (all the tesla cameras), attention, and temporal processing (t-1, t-2, ...).

So, now let's do a quick summary:

Summary

- If we stick to CVPR 2023, Yann LeCun is right that a company like Tesla still needs to use CNNs. Transformers are slow, and they haven't been built for Feature Extraction.

- Tesla has an End-To-End architecture using a HydraNet, an Occupancy Network, and a Deep Planner.

- The HydraNet uses a ConvRNN based feature extractor named RegNet, which uses RNNs to 'self-regulate' and get a better output. It also uses FPNs after this.

- The Occupancy Network uses this exact same feature extraction technique, but with Bilinear FPNs.

- In the end, we realize that CNNs and Transformers can fit right together. They're not necessarily here to replace each other; a CNN is a perfect feature extractor, but performs badly with temporal dependencies and fusion.

- A Transformer is a versatile tool, a swiss-knife, that can perfectly handle the temporal issue, but will not be as good a a CNN for feature extraction.

Next Steps

If you liked this article, you'll likely want to learn more about what's going on under-the-hood of the models. I would recommend my 3 blog posts on Tesla's HydraNet, Tesla's Occupancy Network, and Tesla's End-To-End Architecture.