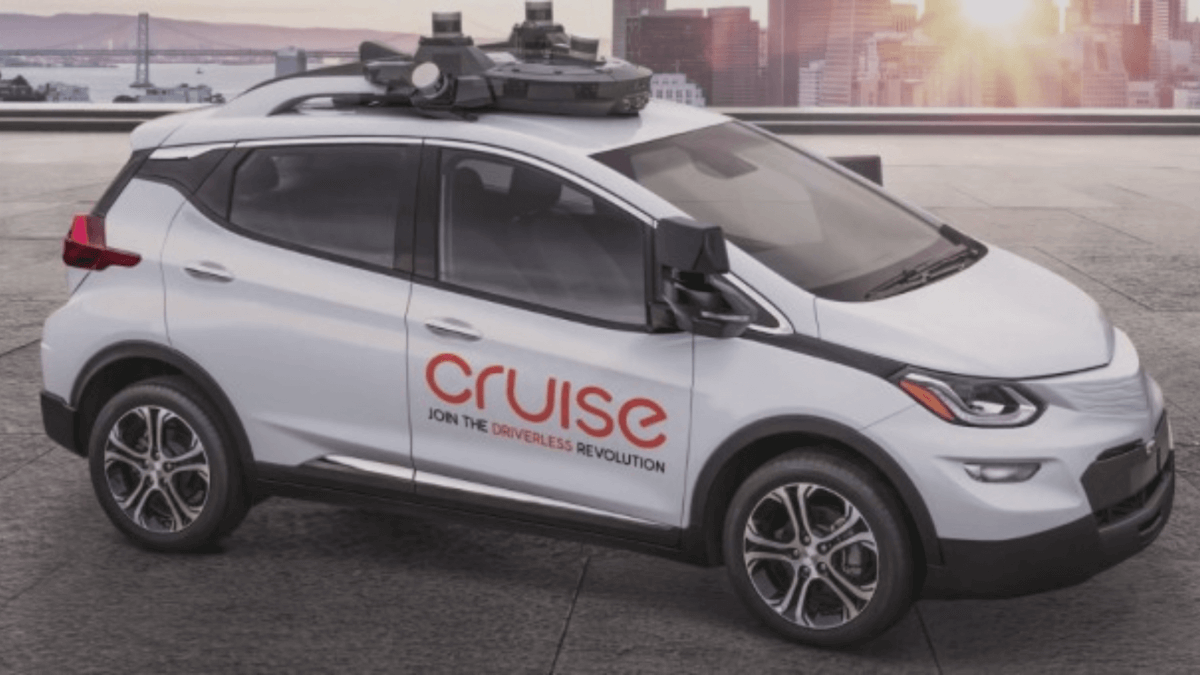

Cruise Automation — A Self-Driving Car Startup

Cruise Automation is a self-driving car company whose goal is to deploy safe self-driving cars at scale.

Founded in 2013, they develop self-driving cars that can take passengers from A to B in a safe and comfortable way. I got interested in their system and watched a few videos and interviews to get back to you with a concise analysis.

What’s behind their system? What algorithms are in use? Can they compete with Waymo and Tesla to provide autonomous driving at scale?

Let’s take a look at Cruise Automation…

📩 Before we start, I made a Self-Driving Car Mindmap that you can receive if you join the daily emails .

This is the most efficient way to understand autonomous tech in depth and join the industry faster than anyone else.

How it started

Cruise was founded around 2013 by Kyle Vogt and Dan Kan. Their main activity was highway Autopilot for Audi: Identifying lane lines with a RADAR/Camera system.

Then, Cruise wanted to do more: and moved to fully autonomous cars:

In 2015, they added LiDAR to allow for urban driving.

The startup went to an acceleration program called Y-Combinator.

A year later, they got acquired by General Motors and grew massively.

They moved the garage a few times, and named all of their self-driving cars after MARVEL’s characters: Scarlett, Iron Man, …

Today, Cruise is a huge team of over 1,600 engineers working to solve on of the most interesting and challenging problem of them all: Urban Autonomous Driving.

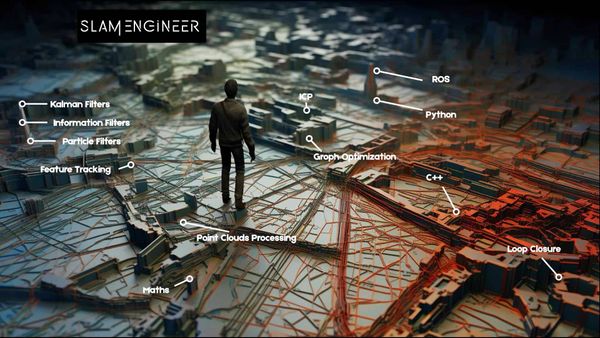

Self-Driving Cars in one image

This article will detail the Perception stack, and then briefly mention the rest.

Perception

In this interview from 2017 , former Head of Computer Vision Peter Gao described Cruise’s problem as tough and unpredictable.

Funny story, I looked for Peter Gao on LinkedIn and found a second Peter Gao, also working on self-driving cars… but for Waymo.

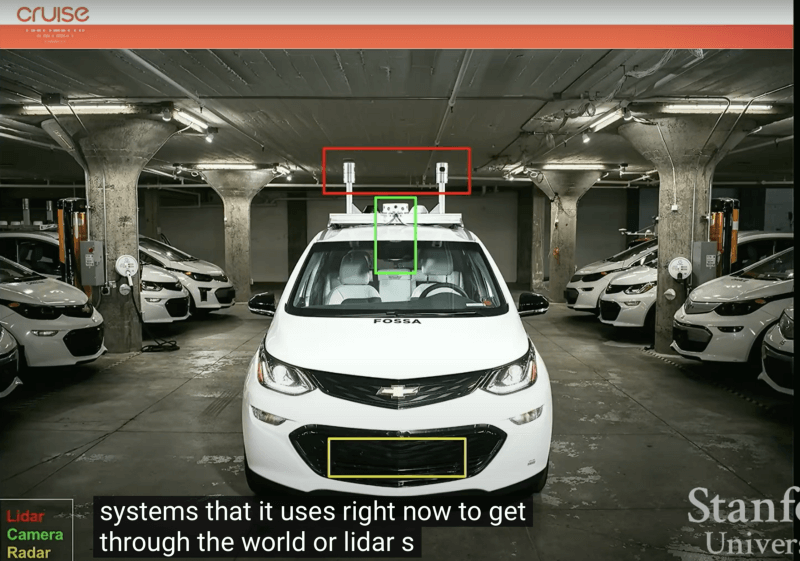

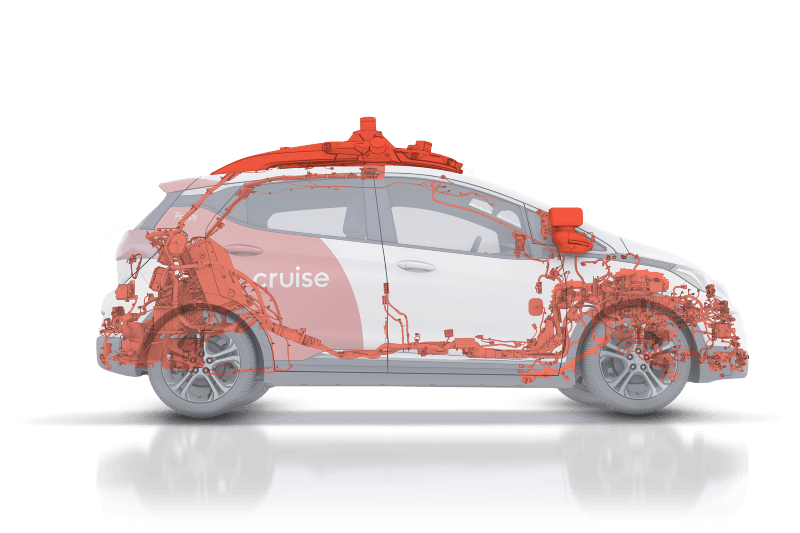

Cruise’s Perception system uses LiDARs, Cameras, and RADARs. Cameras and RADARs can be a good fit for highway autopilot, but LiDAR adds a lot of information when it comes to urban driving and pedestrian detection.

Let’s start with the camera…

In this article, I’ll mention a process called “Sensor Fusion”… the idea is to fuse data coming from different sensors (for example a camera and a LiDAR) and thus take advantage of both sensors. More on Sensor Fusion here.

How does the Computer Vision system work?

Computer Vision is everywhere in autonomous driving: to find lane lines, obstacles, or even traffic signs and lights… we need to use the camera and Computer Vision algorithms…

Cruise has a process of working in 5 Steps:

- Camera Calibration

- Time Calibration — Sensor Fusion

- Labeling — Active Learning

- Big Data — Fleet Management

- Perception Tasks — Detection, Tracking, …

Camera Calibration

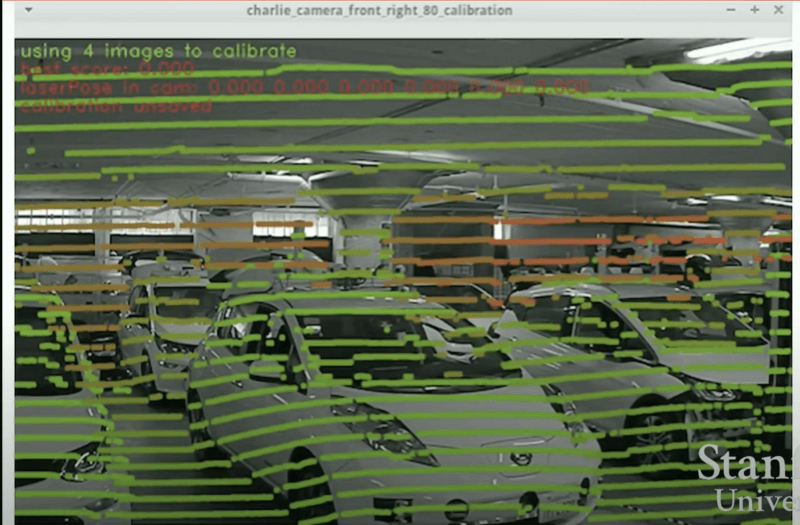

Every camera needs calibration. It helps with 3D geometry, but also to get an accurate image.

Intrinsic Calibration helps with camera image rectification — removing the GoPro effect and thus having a rectified image.

Extrinsic Calibration is a camera/car calibration — it helps with Sensor Fusion and 3D projections later.

In the following image, you can see the results of extrinsic calibration. The closer the object, the greener.

👉 If you’d like to learn more about LiDAR Camera Fusion, I invite you to take my course LEARN VISUAL FUSION: Expert Techniques for LiDAR/Camera Fusion in Self-Driving Cars

Time Calibration

One of the key aspects of Sensor Fusion is to match a frame from a camera to a frame from the LiDAR…but these must be taken at the exact same time!

Time Calibration is made using a process called phase locking: the LiDAR is scheduled to arrive at a certain point of the rotation at a certain point in time.

Labeling

There is a lot of Machine Learning at Cruise. Labeling is therefore an essential task.

2D Obstacles, tracking, lane lines, freespace, traffic lights and signs, hazard lights, and much more must be detected…

Big Data

Every vehicle generates hundreds of Terabytes every single day. If you’ve followed my course ROBOTIC ARCHITECT , you know that a 20 seconds recording is already over 5 Gb.

The number of cars is set to grow exponentially, and the resolution of cameras as well…

Cruise uses Spark to aggregate data and do cool operations such as query or even multi-machine training…

We have a brief idea of the sensors, and the pipeline cruise uses. How do they actually detect obstacles?

Autonomous Driving Tasks

Cruise is doing Urban driving. Urban driving introduces a lot of complex scenarios that are not present in community driving or highway driving. For example, we must detect traffic lights, define and understand what is a double parked cars, overtake a vehicle, predict their moves, …

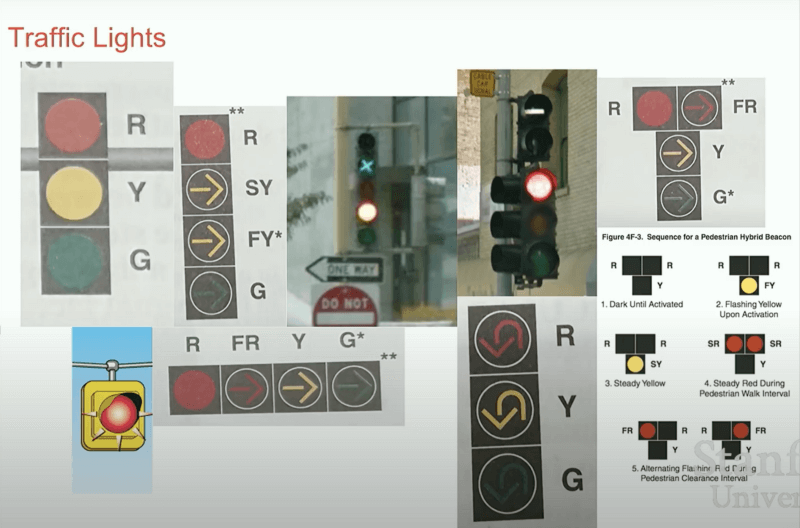

Traffic Light Detection

Cruise drives primarily in San Francisco, here’s an example of the traffic lights they classified.

As you can see, the problem is complex : it’s not just green, yellow, and red.

There can be multiple colours, directions, flashing lights, and all of these new things every single day.

The problem is still a classification/obstacle detection one, but with more than the 3 classes you would expect. In reality, traffic lights and signs are some of the most difficult problems due to the diversity of signs we can have for a single class.

As a comparison, it’s only a few months ago in 2020 that Tesla (which leads the automation race so far) announced it can now detect traffic lights.

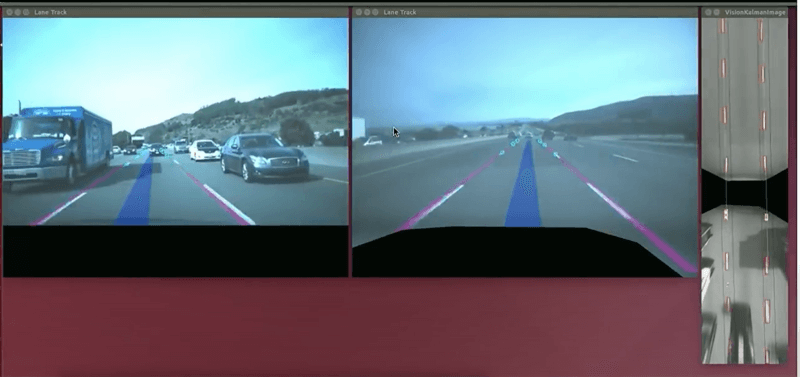

3D Obstacle Detection & Sensor Fusion

Cruise detects obstacles in 2D, and then transpose them in the 3D space.

How? The 2D obstacles are fused with LiDAR and RADAR 3D features, and then projected thanks to the camera’s extrinsic parameters.

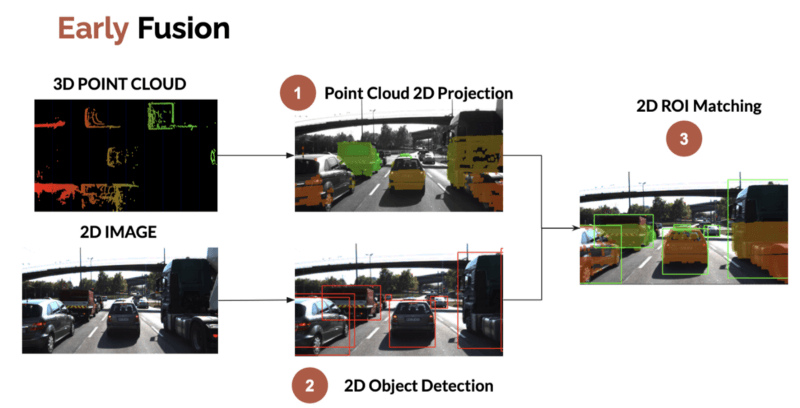

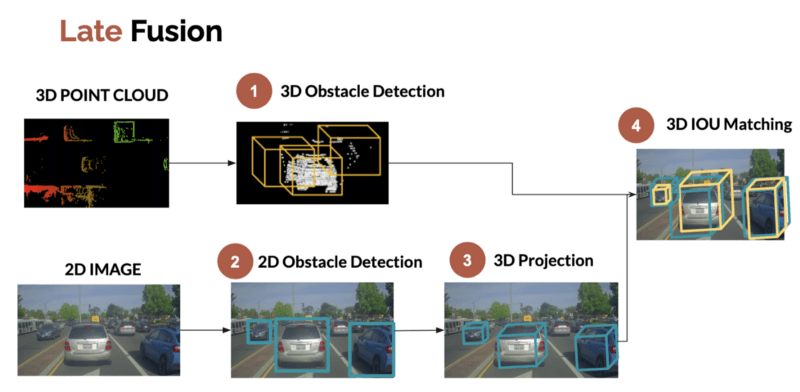

In my Visual Fusion Course , I describe two fusion processes called Early and Late Fusion.

Early fusion is about fusing the pixels and images features with the LiDAR point cloud before even running a detection algorithm. The fusion is the first operation made.

Late fusion is about fusing the independent results: a 2D bounding Box coming from a camera with a 3D one coming from a LIDAR.

Cruise is doing late fusion: Obstacles are processed individually, and then fused.

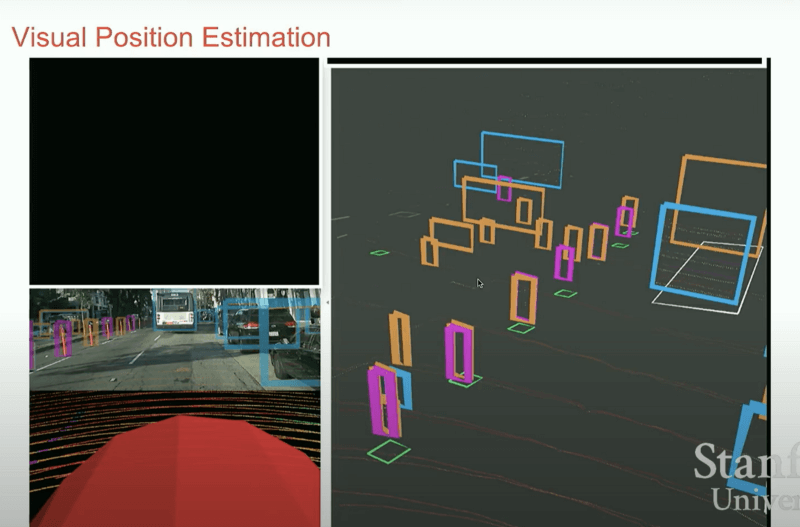

In the following image, you can see 2D obstacles (on the left) and their 3D projection (on the right).

Vehicle Light Detection — Double Parked Vehicles

Cruise drives mainly in San Francisco. When getting used to this city, they noticed something very common: double parked vehicles.

In San Francisco, double parked vehicles are everywhere. Whether it’s legal or not, it happens. A self-driving car has to adapt… or it will never drive over a minute.

(source:https://venturebeat.com/2019/06/27/heres-how-cruises-autonomous-cars-navigate-double-parked-cars/)

From the same source (VentureBeat) — In order to do this, Cruise’s cars must first identify them, which they accomplish by “looking” for a number of cues such as vehicles’ distance from road edges, the appearance of brake and hazard lights, and distance from the furthest intersection. They additionally use contextual cues like vehicle type (delivery trucks double-park frequently), construction activity, and the relative scarcity of nearby parking. […]

And later we can read that Recurrent Neural Networks (RNNs) are used to determine whether or not a car is double parked thanks to their long-term memories. We can’t determine if a car is double-parked using a single frame, we must use a sequence of images.

The Vehicle Light detection model which allows to:

- confirm if a car is parked

- detect if a park is momentarily stopped (hazard lights)

- detect car intents with directionals

The planning system can then adapt: if a car is stopped but with no directionals, it can mean that it’s just paused for a few seconds. So we shouldn’t overtake.

If it’s stopped and has the hazard lights on, let’s overtake.

Those are the core elements of Perception.

Obstacle Prediction

Today, a Cruise vehicle can detect a lot, it can even predict pedestrian and car intents for the future. Every obstacle will have this green arrow representing the future positions.

Prediction is when we take the detected obstacles and project their state (position, velocity) a few seconds into the future…

In my Tracking Pack , I use obstacle detection, Hungarian matching, and Kalman Filters to implement the SORT algorithm that detects, tracks, and predict every obstacle’s position. Cruise is probably using similar techniques too, but when it comes to intersections, it’s purely a learning approach.

Cruise has a fleet of dozens of vehicles: they leverage it.

How? Every vehicle is independently seeing different situations, and can therefore learn from the other vehicles.

Vehicles drove thousands of hours in San Francisco… They learned the trajectories in specific situations. There are some edge cases, a lot of them, but it’s still a learning problem.

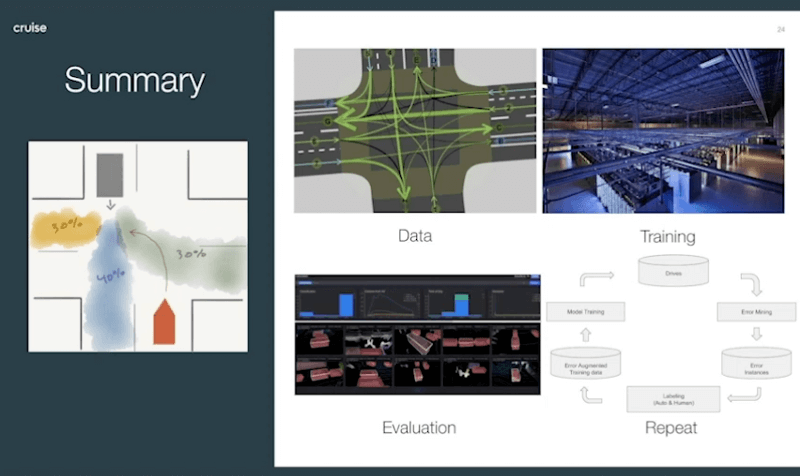

In the following image, we can see a simplified vision of their approach…

First, we can see that this is a classification problem.

The vehicle has a 30% chance of turning right, 30% left, and 40% chance of going straight.

Trajectories are computed, with likely (green) and unlikely (black) ones.

The model is evaluated, and there is a retraining process in a closed feedback loop.

Let’s see it in more detail...

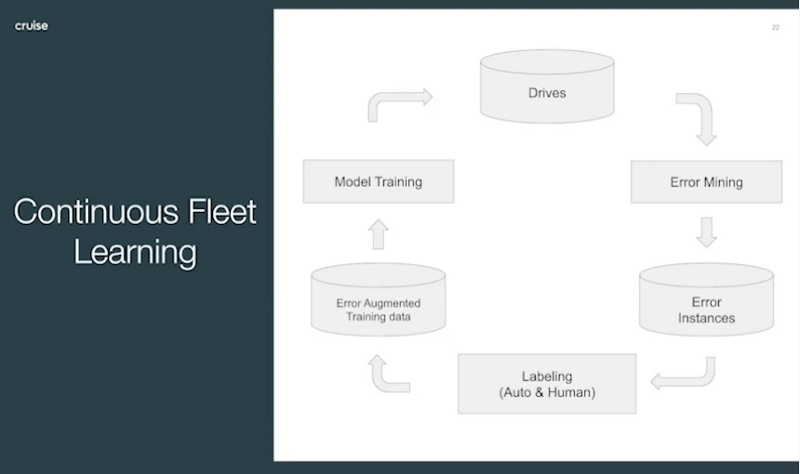

This is similar to an active learning problem : but they call it Continuous Fleet Learning.

Today, Cruise’s system is so much more powerful than what I just quoted.

Cruise has been able to drive for years in San Francisco…

They can do specific manoeuvers like unprotected lefts, but they also can drive in streets full of children safely, climb hills, and they only need about 1 operator for every 10 vehicles.

Their system is impressive and very smooth but we don’t know much about their time spent driving outside of San Francisco.

It raises an important question about Company owned Fleet and Customer Owned Fleet.

Tesla is not controlling its fleet, the users are. They can be in the UK, in France, or in the US. And it’s data from all of these countries fed to Tesla’s system.

Cruise decides where the fleet is operating but therefore is not forced to discover new regions.

Control

A final word on the controllers used. The goal of the control command part is to generate a steering angle and an acceleration value to follow the computed trajectory.

Cruise uses a Model Predictive Control algorithm to do so. You can read more about these type of algorithms in my article Control Command in Self-Driving Cars .

Conclusion

Building Self-Driving Cars is a dream, and not an easy one. With their system, Cruise proves that it can compete with the most advanced companies in the world by using state of the art algorithms, and continuously evolving their system.

I built Think Autonomous based on my field experience and with the simple objective to help you join the self-driving car industry. Whatever you decide, just keep that in mind: it’s possible, if you put your mind to it.

Learn more about Cruise—

Some images are from this video , specifically about prediction.

If you’d like to see Cruise in 2020, you can watch this great interview from Cruise’s founder Kyle Vogt .

Cruise built a ROS Visualizer called Webviz. It allows you to see RADAR output, LiDAR point clouds, and camera images.

Play with it here .

👉 It’s very similar to the work we do in my course ROBOTIC ARCHITECT that I highly recommend to get closer to real self-driving car building.

Cruise’s interns seem happy 😛 Watch their video here .

You can learn more about Cruise on their website .