The 6-Step Roadmap to Learn Sensor Fusion

Sensor Fusion is one of the most exciting topic in the robotics industry. It's about fusing data coming from multiple sensors, and then building a robust output. But how can we effectively learn sensor fusion?

Which skills should be prioritized? What is the quickest route to a job? And is there a list of selected courses I would recommend?

Before we begin, let's stop for a minute and think about the end goal...

Getting a Sensor Fusion Job

I've looked at lots of job offers in Sensor Fusion to prepare a relevant article, and here are the most common requirements:

- Experience with Multi-Sensor Fusion

- Hands on experience with radar, lidar, camera, or ultrasonics and the sensor data

- Hands on experience with Kalman Filters (Extended/Unscented) or any other type of Bayesian Filtering

- Solid Understanding of 3D Computer Vision — Projections, Intrinsics & Extrinsics

- Strong Python / C++ skills

- Deep Learning & Deployment on embedded platforms

If you want to learn how sensor fusion works, and how to become a sensor fusion engineer, you'll likely need to learn these 6.

To solve these 6 skills, and assuming you can already code in Python or C++, I have done my own list based on my experience to acquire a strong Sensor Fusion profile.

We're first going to learn about the sensors, then get an overview of the sensor fusion algorithms, then we'll explore 3D projections, Kalman Filters, Deep Learning, and see how to build a good-looking project!

After we've been through these steps, we'll summarize and I'll share course recommendations.

So let's begin:

Step 1: Learn about the Sensors

One of my favourite movies is Impossible Mission 3. In this movie, Ethan Hunt (Tom Cruise) tries to finds his wife and a nuclear weapon who have both been "taken" by the cunning Owen Davian (Philip Seymour Hoffman 🙌🏼).

And the only way to solve this Impossible Mission (and lots of others), Ethan needs to go with a team: There is his old friend Luther, the IT guy Benji, background operative Zhen, and transportation expert Declan. They all have specific assets needed to solve the mission.

Similarly, what makes a self-driving car work is its ability to gather a team of sensors. Each sensor has its own role and advantage. For example, RADARs can naturally measure velocities and can work extremely well under bad weather conditions. And cameras are naturally good at showing us the scene and context.

If you want to learn Sensor Fusion, you'll first need to understand more about your sensors. Just like the IMF agents needs to know eachother very well, you need to know each sensor individually before you can fuse them.

So here are 3 things to understand about each sensor:

- Purpose: What are the main strengths, weaknesses, and reasons to be? When to use it? and when not to?

- Raw Data: What is the data coming out of the sensor?

- Output: What are the main outputs people usually get when processing these sensors?

For example, we need a LiDAR because it's accurate with distance estimations. It emits point cloud data, and the usual output is a segmented point cloud or 3d bounding boxes (you'd need to go in more details, this is barely an example).

Next:

Step 2: Understand the 9 Types of Sensor Fusion Algorithms

Shortly after the invention of photography in 1839, the desire to show overviews of cities and landscapes prompted photographers to create panoramas. The process involved placing two or more daguerreotype plates (the first cameras) side-by-side. And the result looked like this:

You might not realize it, but this process of "image stitching" is Sensor Fusion. In fact, it's a type of fusion we call "complementary fusion".

Understanding the range of possible fusion algorithms is the logical next step on your roadmap. There are tons of possibilities when doing sensor fusion, and it's crucial to start with the overview. There are 3 ways to classify sensor fusion algorithms, so let me briefly show them:

- Fusion By Abstraction : Low-Level Sensor Fusion, Mid-Level Sensor Fusion, High-Level Sensor Fusion

- Fusion By Centralization: Centralized Fusion, Decentralized Fusion, Distributed Fusion

- Fusion By Competition: Competitive, Complementary, Coordinative

If you want to learn about these types of sensor fusion, rather than jumping in Kalman Filter classes, start by understanding these 9. You can do that in this article on my blog.

Only once you have a solid understanding of the Sensors, and of the types of Fusion, should you go to the next steps.

Steps 3 to 5 are to be followed in no specific order:

Step 3: Master Projections & 3D

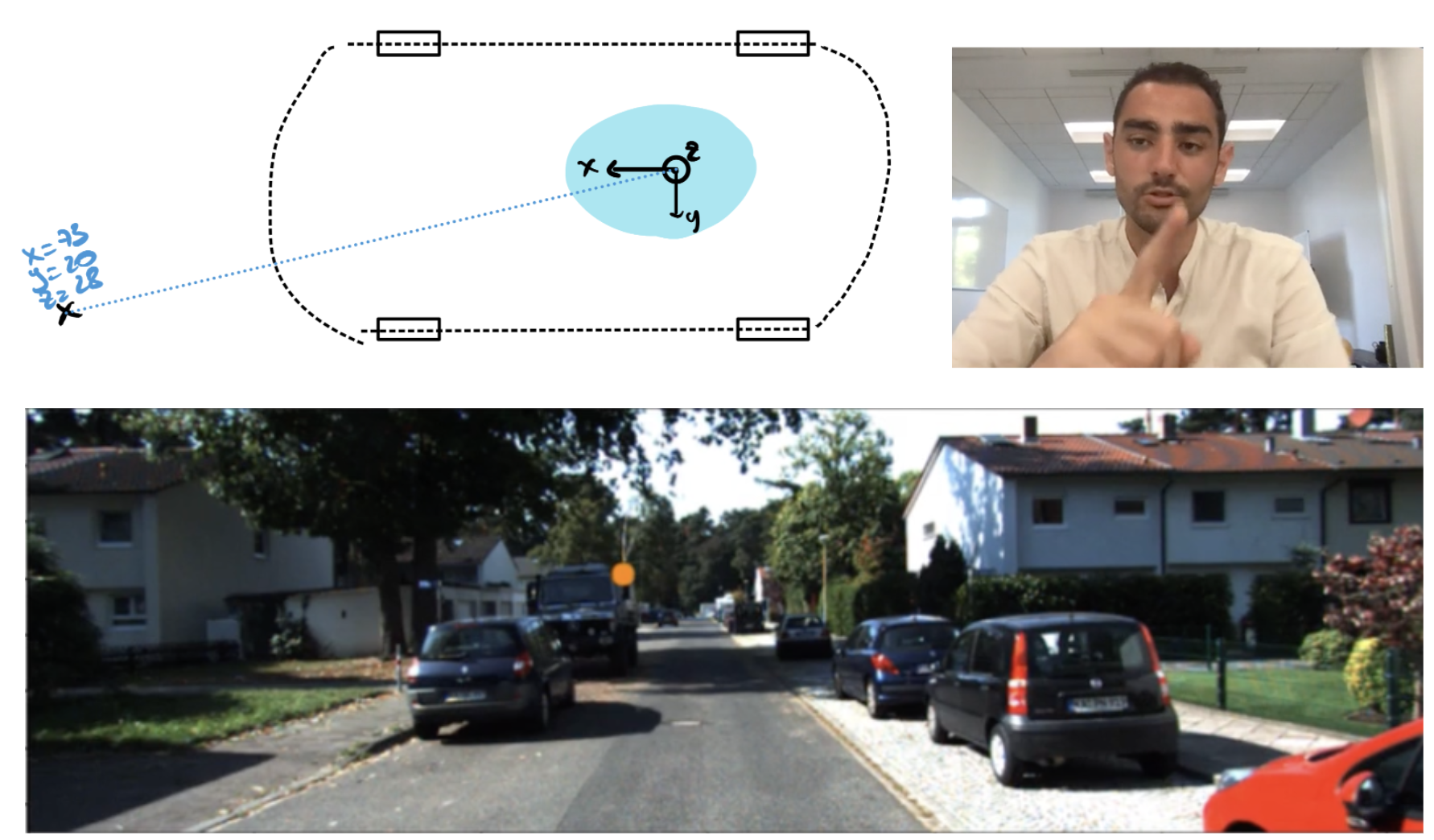

Back when I was working on autonomous shuttles, I was once tasked with a difficult mission: to combine 3D Bounding Boxes coming from a LiDAR with 2D Bounding Boxes coming from a camera.

And immediately at the beginning, I had a blocking point: "How to get 2D camera boxes and 3D LiDAR data on the same page?".

Knowing how 2D Object Detection works is nice. Knowing how 3D object detection works is even nicer, but navigating between 2D and 3D is the ultimate skill to have as a Sensor Fusion Engineer.

And for that, you'll need to learn about Projections.

In my course Visual Fusion, which is one of the most popular course on Think Autonomous platform, one module is about projecting point clouds into camera images.

This is the kind of sensor fusion algorithm that is super frequent in the autonomous vehicle space, and that I recommend you try and build. It's usually called Early Fusion, because we're fusing raw data (point clouds and pixels).

Here's what it looks like:

When you're learning about these, you're also learning about all the geometry, intrinsic and extrinsic calibration, 3D-2D conversions (and vice-versa), and also about Bounding Box fusion.

If you are to work on Sensor Fusion, you cannot avoid the 3rd dimension, and this is something you can start doing in my Visual Fusion course: https://courses.thinkautonomous.ai/visual-fusion

Step 4: Dive in Kalman Filters

If you have a LiDAR telling you that a pedestrian is 12 meters ahead, and a RADAR telling you it's actually 13 meters, which sensor would you trust?

Would you average? Maybe.

Now, what if I told you that your LiDAR is more precise than your RADAR. Would you still average? Consider just the LiDAR? Introduce coefficients?

Because self-driving cars use different sensors, they have to deal with these conflicting outputs, and they almost always rely on Kalman Filters for this process.

Most late fusion algorithms (when we fuse the output of the algorithms) rely on Extended Kalman Filters, or Unscented Kalman Filters. These are non-linear types of filters you should learn, but not before you've been though the vanilla Kalman Filter.

So, try to do a lot of linear filtering first, try to change examples, do some tracking, some time series predictions, etc...

You can learn to do that first part here: https://courses.thinkautonomous.ai/kalman-filters

We have for now a roadmap with these steps:

- Understand the main sensors, the elements of your Sensor Fusion team

- Understand the Sensor Fusion Algorithms, Types, and Techniques

- Understand 2D-3D Projections and Early Fusion

- Understand Kalman Filters and Late Fusion

The next step is to build a Sensor Fusion project. At this point, you'd probably have already built projects when learning about projections or Kalman Filters, but I still recommend you go through this step:

Step 5: Build your own sensor fusion project

For this step, you can either go with recorded data, simulated data, or real sensors. Let's see what it means

1. Going with Recorded sensor Data

There are lots of places where you can find recorded data. For example, in my ROS Course, I share a bag containing a self-driving car recording with GPS, LiDAR, camera, and even RADAR. In Cruise's Webviz platform, you have access to a recording and can plug your algorithm to it and visualize the results (it's not working today on my end, not sure why).

In this case, the main thing to know is how to interface with either ROS or the platform you're using. I don't recommend loading txt files with LiDAR and RADAR data.

2. Going with simulated sensor data

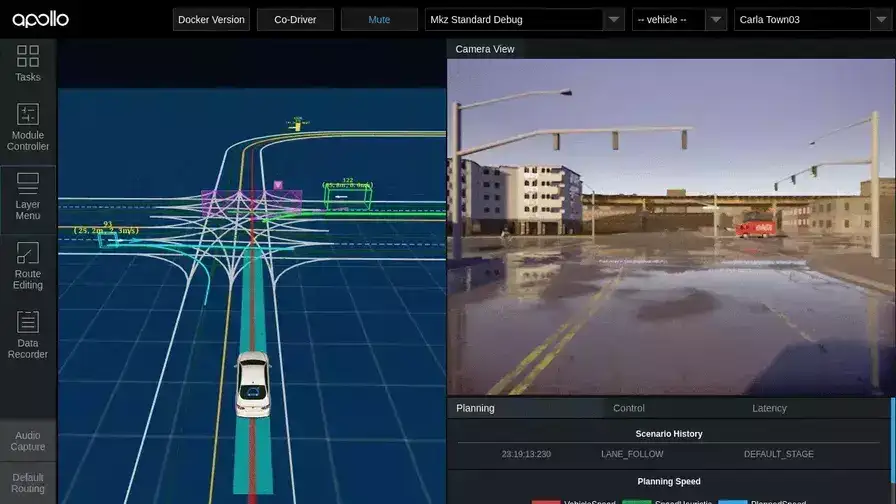

The second option is going with simulated data. For example, you can plug to the CARLA simulator, and start driving there. The simulator already emulates sensors, and so you only need to connect to the output and build your algorithm there.

That said, I don't think I'd recommend CARLA. At least not until you've been through Option 1 first, and not unless you have a really good computer.

3. Buying your own sensors

The ultimate way to learn is to have a hands-on experience. This is what is also listed on the job offers. "Hands-On Experience" means you can't stay on the cloud forever. Purchasing a sensor such as a real LiDAR or a camera is part of the journey.

There are lots of sensors you can purchase at a low-cost, like the RP LiDAR A1 or the OAK-D S2 camera. It will be a much lower resolution output, but it's really complementary to your learning.

Finally, after you've built your project, you can explore the dark side of Sensor Fusion: Deep Learning.

Step 6: Explore Deep Sensor Fusion for Automotive perception systems

Today, many self-driving car companies use Deep Learning for Sensor Fusion tasks. Some use Transformer Networks. Others use 3D Convolutions. There are so many possibilities, but what matters is that you start reading about it and discovering ways to fuse data with Deep Learning.

If you want to learn more about it, I have an entire article about how Aurora uses Deep Learning for Sensor Fusion.

With that, understanding how to use real-time algorithms, how to optimize an infrastructure, how to run a sensor fusion toolbox, and how to work on embedded devices is recommended.

Summary: Recommended Sensor Fusion Engineer Path

We've seen the logical 6 steps to learn about sensor fusion: learn about the sensors, learn about the fusion, learn 3D-2D projections, learn Kalman Filters, build a project, and explore Deep Learning.

How can you do that?

Here is a list of selected sensor fusion courses and articles for each of these:

- My course THE SELF-DRIVING CAR ENGINEER SYSTEM has an opening chapter on sensors, and it perfectly details all the sensors you need to learn. I'd recommend you start here anyway if you're planning a journey in self-driving cars.

- My Point Clouds Conqueror Course provides a hands-on experience with LiDAR, it might be a good addition to it.

- My article 9 Types of Sensor Fusion Algorithms will help you with the overview of the algorithms.

- My course LEARN VISUAL FUSION is my go-to to learn how to fuse a camera and a LiDAR.

- My course LEARN KALMAN FILTERS will give you a solid understanding of the linear Kalman Fitler. For the advanced Kalman Filtering part, an extension to this course is coming.

- My article on Deep Sensor Fusion at Aurora should give you an introduction to Sensor Fusion with Deep Learning.

To go further, you can also try:

- The Sensor Fusion Nanodegree from Udacity; a great course — some important details are omitted, but it's the only place in the world where you'll learn about RADARs, and it does talk about advanced Kalman Filters (see my review here).

- The book Probabilistic Robotics from Sebastian Thrun — It's really an essential if you want to get at the expert level. I'm not linking to these but there are PDF versions a google search away.

- MATLAB's YouTube Channel on Sensor Fusion — MATLAB does a lot of great educative content, highly recommended.

- My other posts on Sensor Fusion in this blog.

Alright, with these skills, I think you'll build a serious and interesting profile in Sensor Fusion.

Good luck,

Jeremy