Robot Mapping for Self-Driving Cars (3 Steps to create HD Maps)

Imaging you were asked to launch a self-driving car service in you neighborhood by next month. What would your first instinct be? Think about it. What does it look like? Is it a house in a mountain? Or are you in a busy street?

Do you know what my first instinct would be? It would be to take my car, and drive around this place that I know by heart. And then, I would take notes. Lots of them. I would look at the streets, the possible difficult areas, bridges, traffic signs and lights, places with no GPS signal, etc... and do a heavy "terrain analysis" for days.

In short... I would create a map! Mapping is the essential first step of an autonomous vehicle, because without a map you can't drive. It's very common with self-driving cars, but it's also a very important and common step in robotics too.

So how does robot mapping work? What are the main steps? Things we should know? Let me take you on the autonomous shuttle journey, and explain to you the core concepts.

In this post, I don't want to do an "overview" of all the mapping solutions and companies available, but rather show a "raw" approach to mapping. And this approach is going to happen in 3 steps:

- GPS Tour — We begin by taking a tour of a place, and using the GPS data, looking at Google Maps and looking at the area we'll drive in.

- Mapping — We convert our Google Map into a grid, or a graph, or anything more specific, and where our algorithms will be able to work on.

- HD Mapping — We add elements, such as signs, traffic lights, speed limits, etc...

Step 1 — GPS Tour

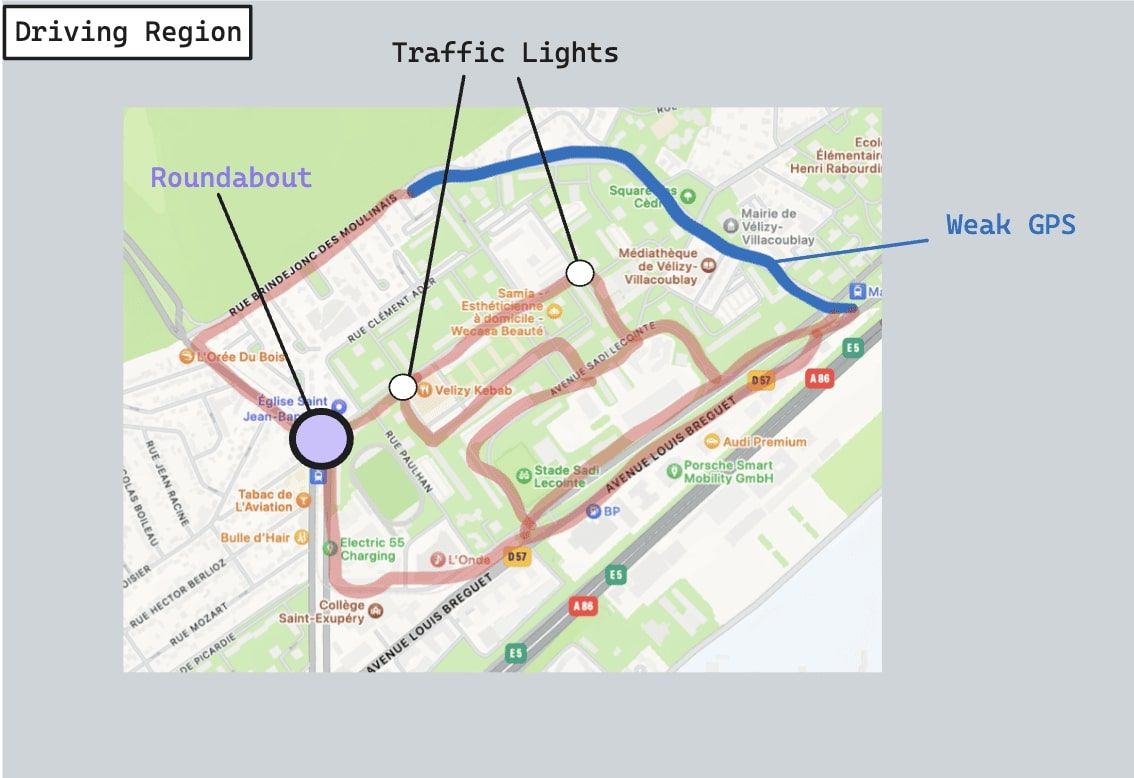

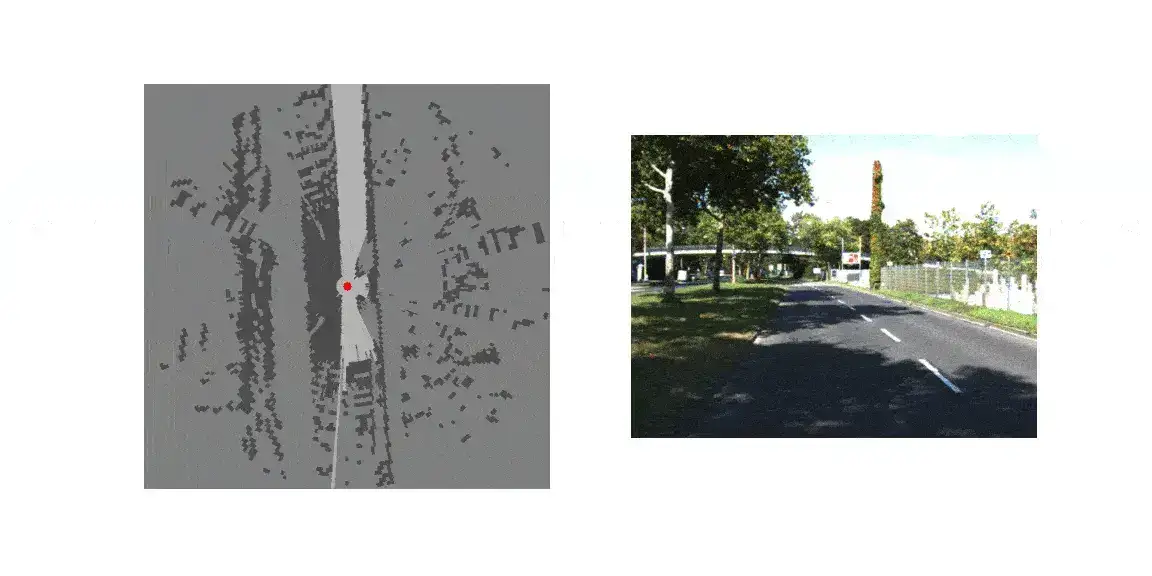

As you noticed, our first level is very high. We just want to take a look at Google Maps, do some laps, record some data, and wonder "What do we have here?"

Back when we inaugurated our new city, we began to drove in a specific loop, and tried to "note" the main elements:

- What are the roads and streets you'll be taking?

- Are there any notable challenges? (traffic lights, roundabouts, lane merging, ...)

- Is it an open road with many vehicles and people or a closed road?

We may have to drive several times, in different weather conditions, just to make sure that the GPS will be stable the entire time. We may make sure there isn't anything we'll miss, and then add this entire thing to our map.

Once we have that map of the notable elements, we can start thinking about Perception and Planning. And notice how we don't start with Perception. Although the logic chart wants Perception to be the first place we begin, the reality is finding the robot localization, and especially the step of Mapping is where it all starts. Why spending time on a lane line detection system if we'll drive without lines?

Similarly, we should try and answer as many questions as we should: Do we need a traffic light detector? Is there a tunnel? Do we need ultra-wideband? Is GPS stable on the entire region? Will a single GPS be enough? Should we build a specific localization algorithm based on lane line detection? Do we need to detect obstacles?

All these questions will feed our Perception and Localization algorithms, and have us decide whether we need one algorithms our not.

Note: If using a robot or a drone, this first process will be different — but you still need to think about the environment you'll drive in "on paper" before doing any kind of real algorithmic mapping. The process will be discussed further in the 2/3 of the article.

Once we know where we'll drive on, we can move to step 2.

Step 2 — Mapping Format

The second step is mapping. By mapping, I mean building a real map that the self-driving car will read and use to drive on. But what type of map can we build? Here are a few that exist:

- Feature Maps

- Occupancy Maps

- Point Cloud Maps

- Vector Maps

- Raster Maps

- Other types of Maps

Feature Maps

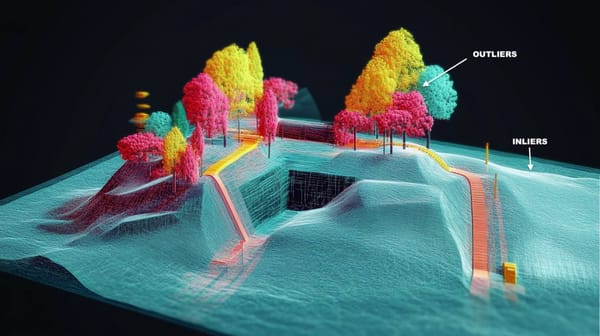

The first type happens when you detect features, like corners and edges, and convert that into a map. Features can be point clouds (if using a LiDAR), or visual features like corners and edges if using a camera. Using Sensor Fusion (the science of fusing sensor data), we could also fuse point clouds and images, or filter some elements.

Occupancy Maps

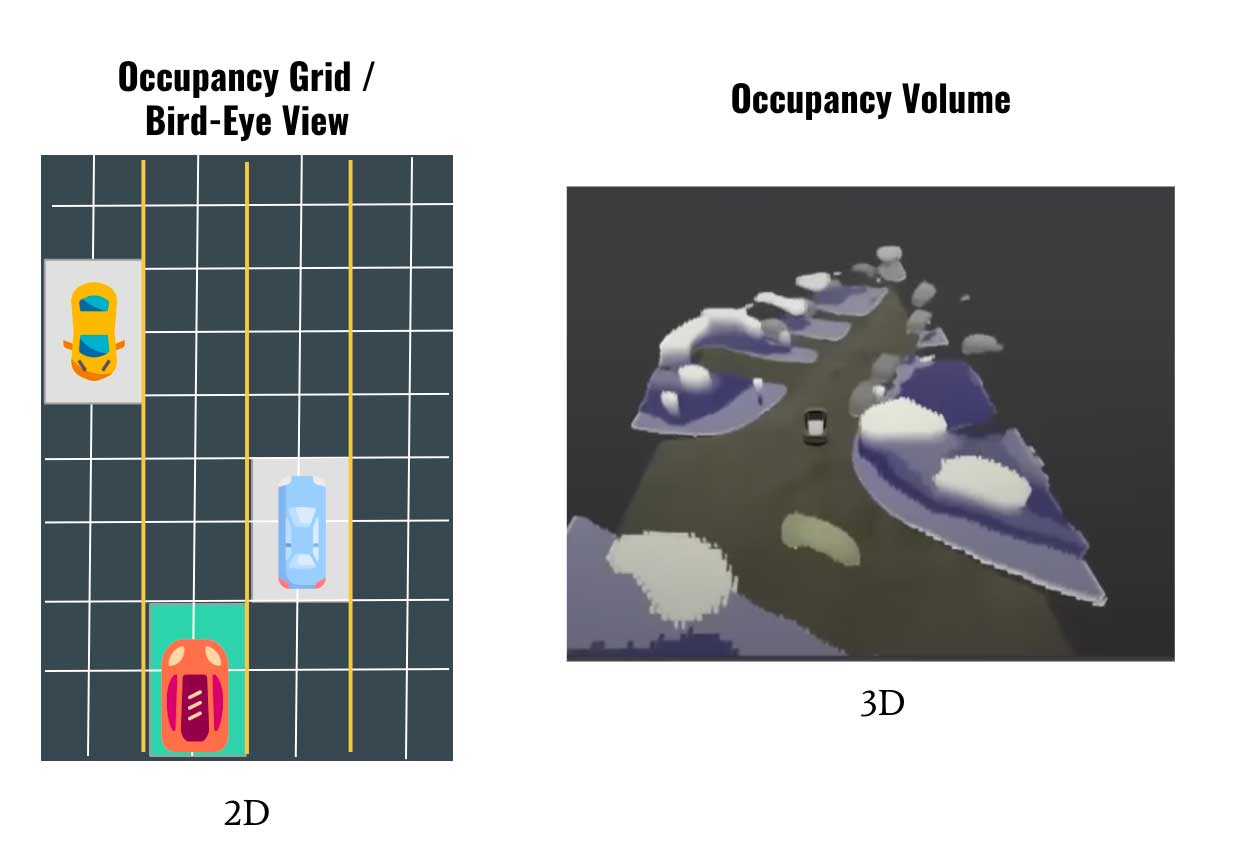

In occupancy based mapping, we want to assign specific locations to values like drivable/non drivable. The most popular algorithm is Occupancy Grid Mapping, in which we discretize the word into cells, and then assign a value for each cell (occupied or free).

The 3D version of Occupancy Grid Mapping is what Tesla does with 3D Occupancy Networks, where rather than 2D cells, they use 3D voxels:

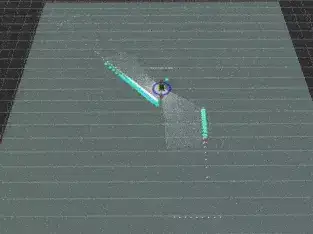

Point Cloud Maps

Another type of map can be done entirely using LiDAR. It's similar to converting visual features into a map, but this time, each of the points we detect are features. We then turn the features into a map.

Here's an example of building a point cloud map:

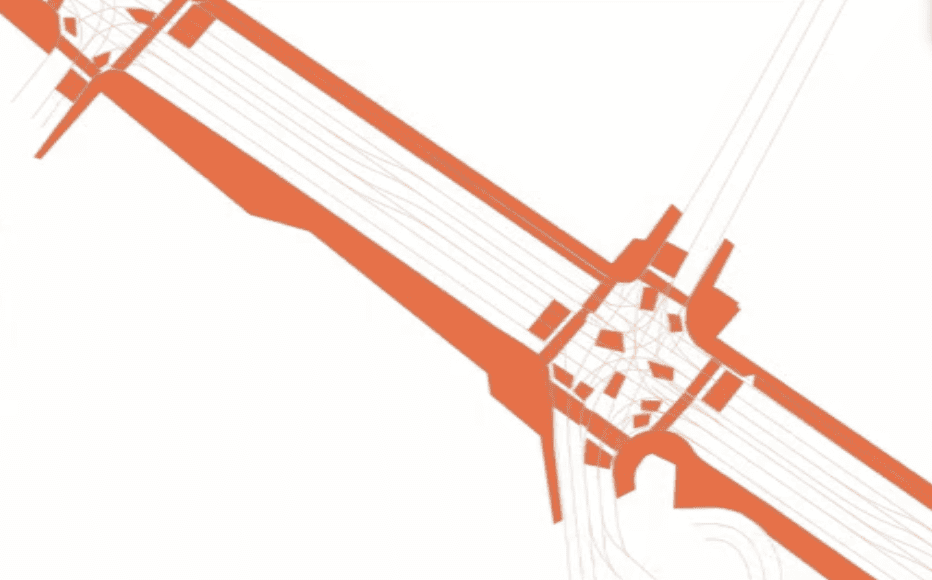

Vector Maps

Probably the most popular type of map in autonomous driving is a Vector Map. The key idea is that you are going to find "vectors", which are nothing but arrays of numbers. These numbers represent values for lane lines, static objects, road curves, etc...

In vector maps, we reduce the problem to finding polylines. What we often do is annotate these maps manually with dedicated software like QGIS.

The thing with these maps is that you can add points, lines, and polygons everywhere you like. Therefore, you can decide that between node 10 and node 20, we have to drive at x speed, and that at node 21, you have a traffic light, etc...

Raster Maps & Other Maps

One other big type is called a raster map.

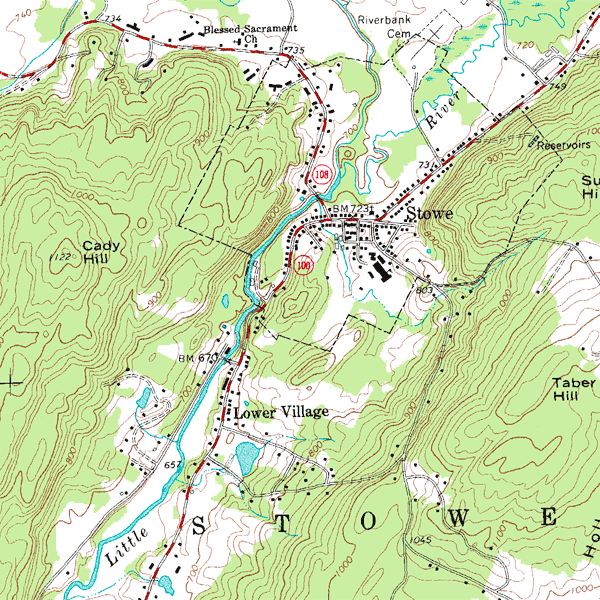

Raster maps are a type of digital map represented by a grid of pixels, or cells, where each pixel holds a value corresponding to a specific feature or measurement of the area being mapped. This format is often used to display continuous data such as elevation, land use, temperature, or color, and is characterized by its pixelated appearance at high zoom levels.

Example here:

They aren't (to my knowledge) the #1 choice in autonomous driving. So now that we know a type of map jump immediately to the final piece: Build an HD Map.

Step 3 — HD Mapping

This step isn't mandatory for all kind of robots/cars — but just those that need the "HD". So what is the difference between an HD Map and a... SD Map? In short, the level of details.

In a SD Map, we're going to get the rough information needed to drive: static obstacles, roads, and that's kinda it. In HD, we'll want to add as many information as we can, and this can go up to the specific curvature of the road, to the speed limit allowed at a specific position, etc...

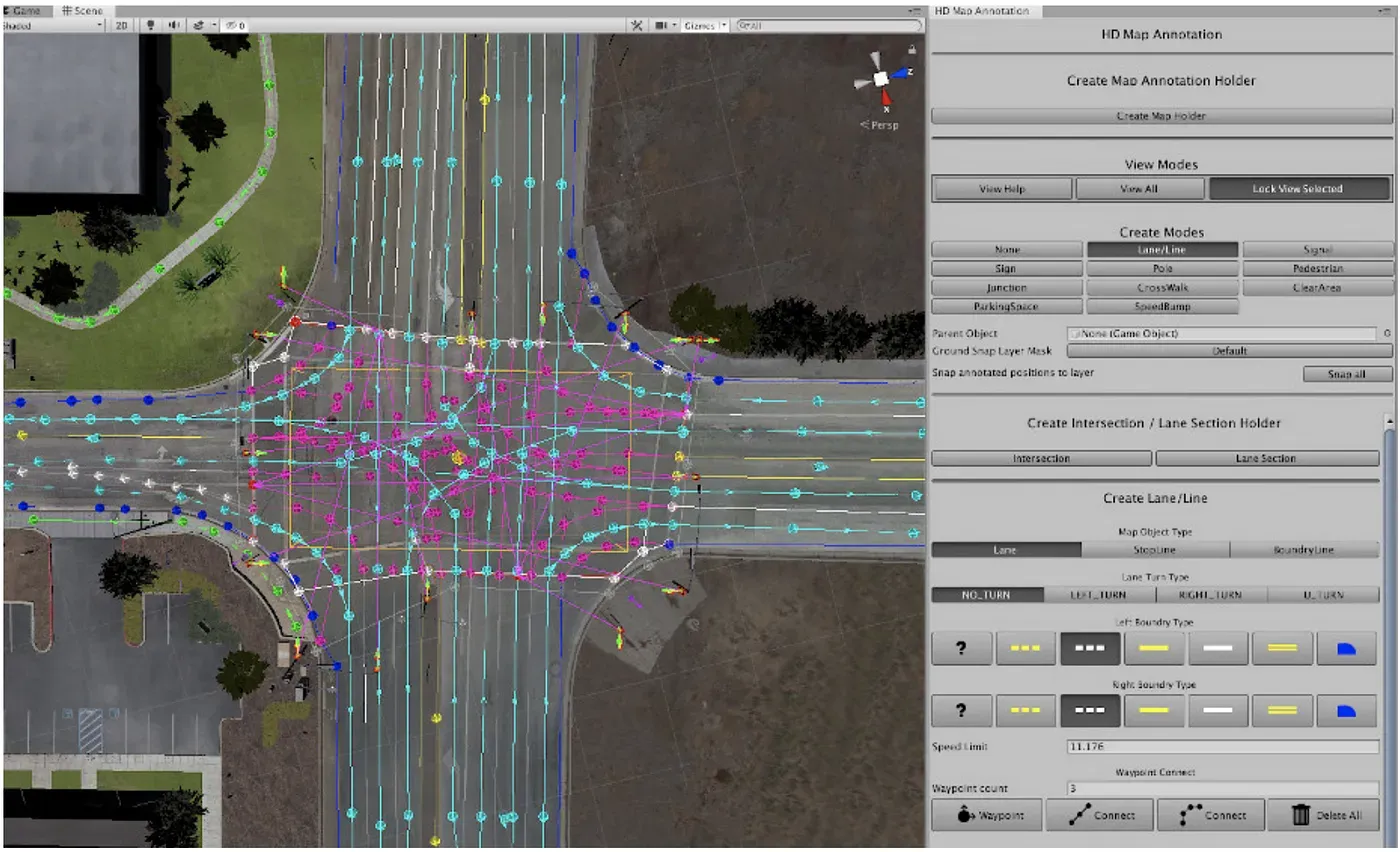

You may for example use additional tools for HD Map Annotation, such as LGSVL which gives rich information like traffic lanes, lane boundary lines, traffic signals, traffic signs, pedestrian walking routes, etc.

These annotations can then be exported into formats like Apollo 5.0 HD Map, Autoware Vector Map, Lanelet2, or OpenDrive 1.4, so users can use the map files for their own autonomous driving stacks. By the way, some other tools are Autoware, Apollo Open Platform, HERE Maps, Camera, etc...

In tools like Autoware, it looks like this:

So this is the final step: HD Mapping.

What about Kalman Filters?

We've talked a bit about mapping in this article, but when it comes to localization, the word "Kalman Filter" almost always comes up. A Kalman Filter is an algorithm (in the artificial intelligence field, not Deep Learning), that can fuse data, help with unknown data, and estimate a state continuously over time.

Kalman Filters, as well as many other probabilistic robotics algorithms (particle filters, SLAM, ...) is part of mapping... Yet, they can also come up a bit later in the localization step. For example, Extended Kalman Filters have been heavily used in mobile robots, and also in self-driving cars.

Is Robot Mapping the same as Car Mapping?

Something to think about is that maps aren't the same when mapping on a robot or a car. If the purpose is different, then the map can be different.

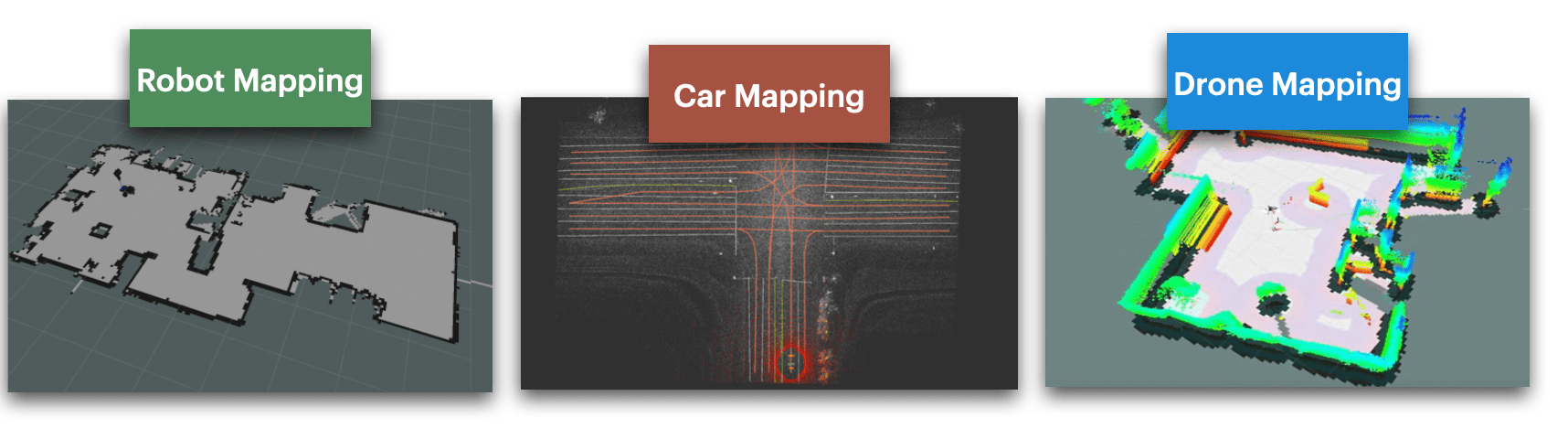

- We already saw that autonomous vehicles used HD Maps where the main difference is the addition of lane lines as poly-lines, and other things, such as speed limits, bumpers, traffic signs, etc.... Now what would a robot or a drone need?

- A drone will require a different kind of map: a 3D Map. Since the drone drives in three dimensions, the map HAS to be built in 3D. Most drones today use 3D Reconstruction and Photogrammetry to recreate 3D scenes, and then turn it into an interpretable map. They can also include terrain or specific weather conditions.

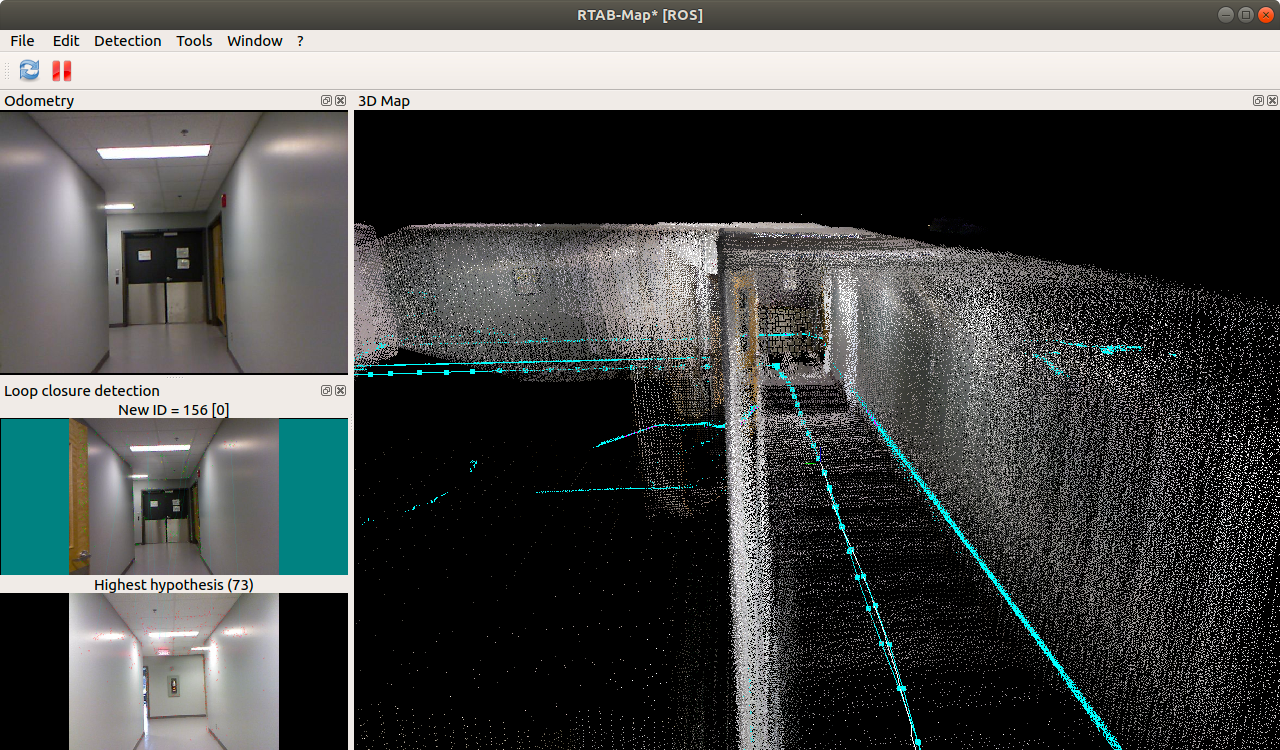

- A robot will usually be good with an SD map in 2D. Although there are exceptions, most robots use SLAM algorithms to create simple maps, and then use these maps to drive. Below are examples of the detail on these 3 types of maps.

Now, let's see some examples of mapping software and companies.

Example 1: Autoware on ROS

In this video, you can see the Autoware software doing some mapping with ROS. Autoware is an open source software that can build HD Maps using LiDAR Point Clouds and LaneNet2 topology.

Thousands of companies and mapping systems rely on it, it's likely the OpenCV of Maps. It also works with the SVL Simulator described above, which can build a solid visualization and training tool.

Example 2: Mobileye's AV Maps

On the other hand, mobileye is building AV Maps, which are HD Maps built by a fleet of customers. Many cars today are equipped with mobileye technology, which provides cameras and ADAS systems to consumer and carmakers. Using the data collected by the cameras, mobileye is creating maps that also get updated every time a user goes through it.

Here's how it works:

- Data Collection & Transmitting: We detect objects using Computer Vision algorithms on the cameras and send that to a cloud server

- Aggregation & Alignment: We aggregate every information from every car

- Modeling & Semantics: We add as much information as possible (semantics, lane lines, ...) and compile a final map.

- Roadbook & Localization: We send that map back to the car that localizes in it.

These AV maps have been built using their "Road Experience Management" mapping system, which now claims that since they know all the user's driving decisions, they no longer to build lane lines anymore. Where the fleet goes, the cars will go.

Unlike HD Maps, that are a manual process, this approach is:

- Scalable (done by the fleet)

- Automated (done all the time)

- Real-Time (if there is roadwork, blocked streets, etc...)

- Evolving (cars can detect new things, add new elements, etc...)

Ok, we got quite a bit of information, let's do a summary.

Summary

- In Robotics, a map is the environment that tells the robot where it is, and where to drive. Today, most self-driving cars use a map to drive, even a really basic one.

- There are 3 main steps when mapping: GPS Driving (observation), Mapping (creation), HD Mapping (customization).

- GPS driving is the idea that if you want to build a map of a region, you first need to drive in it, explore, and collect some information, such as the speed limits, forbidden roads, traffic lights, etc...

- There are several types of maps, from vector maps (a set of polylines, points, ...), to raster maps (2D images), to point cloud maps (LiDARs), to occupancy maps, to feature maps (processing visual information like features). Depending on the type of map, the algorithm will differ.

- Kalman Filters can be used when building maps (SLAM, ...), but often comes in the localization step

- HD Mapping can be done with mapping, but we usually use additional annotation tools like LGSVL to enrich information.

- A map can have many definition, depending on the device it is used on. A drone map will be very different from an indoor robot map, or even outdoor robots, because it works in 3D, usually only with cameras.

Next Steps

- If you want to learn more about mapping, I invite you to check out Autoware, who's building an open source self-driving car, and has lots of documentation on maps and mobile robotics in general.

- To learn more about localization systems in autonomous driving you can read this article on Self-Driving Car Localization.

- The logical next step after Localization is also Path Planning, I highly recommend reading my last article on High Level vs Low Level Motion Planning in Self-Driving cars.

But the bigger next step?